Framepack AI

Revolutionary AI for long video generation with uncompromised quality

What is Framepack AI? Complete Overview

Framepack AI is a groundbreaking neural network structure developed by researchers at Stanford University that revolutionizes how video generation models handle long-form content. It solves the fundamental 'forgetting-drifting dilemma' by implementing an innovative compression technique that prioritizes frames based on their importance, maintaining a fixed transformer context length regardless of video duration. This breakthrough allows AI systems to process significantly more frames without increasing computational requirements, making long video generation practical and efficient. Target users include content creators, filmmakers, AI researchers, and enterprises looking to produce high-quality, consistent long-form video content.

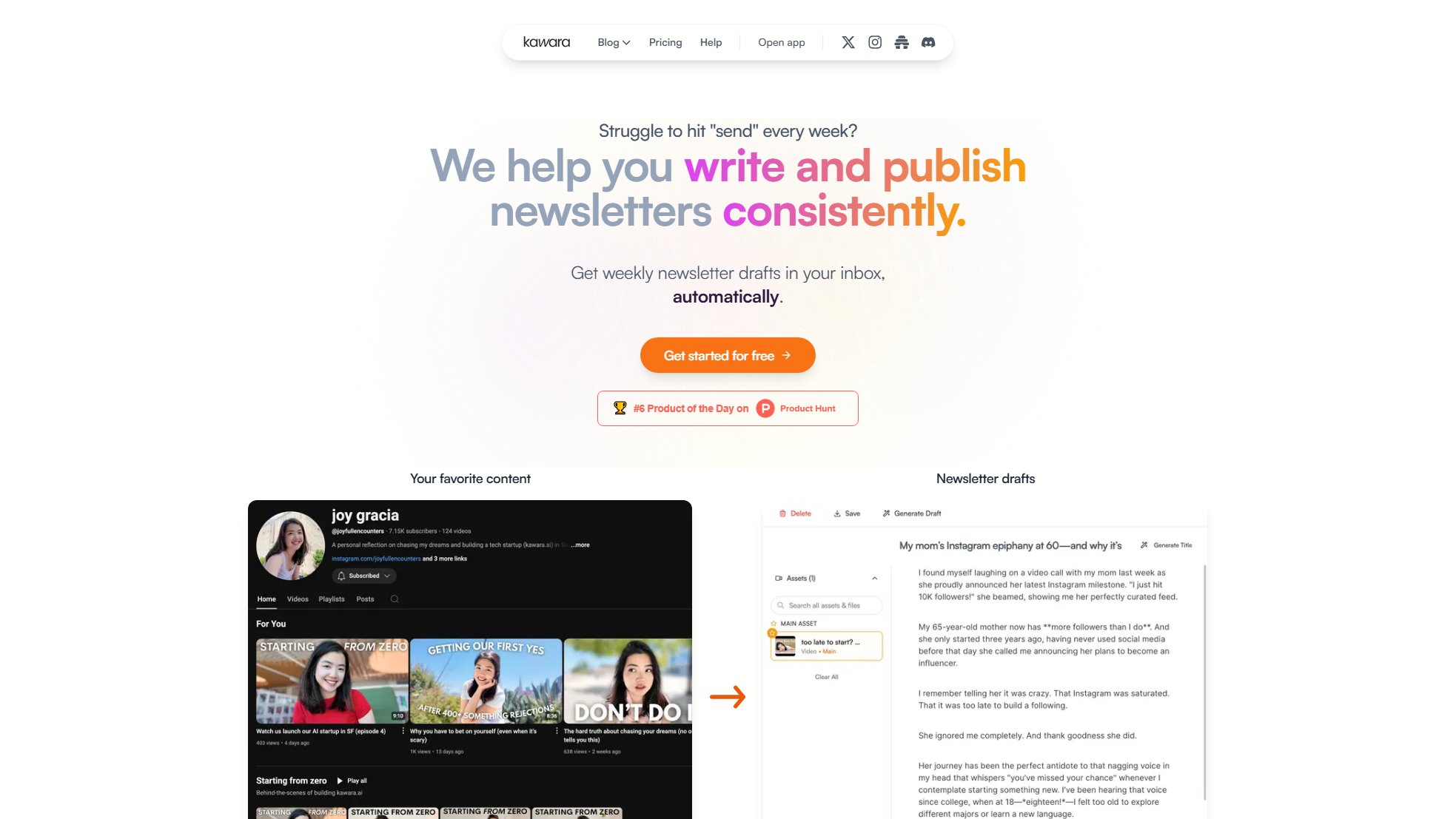

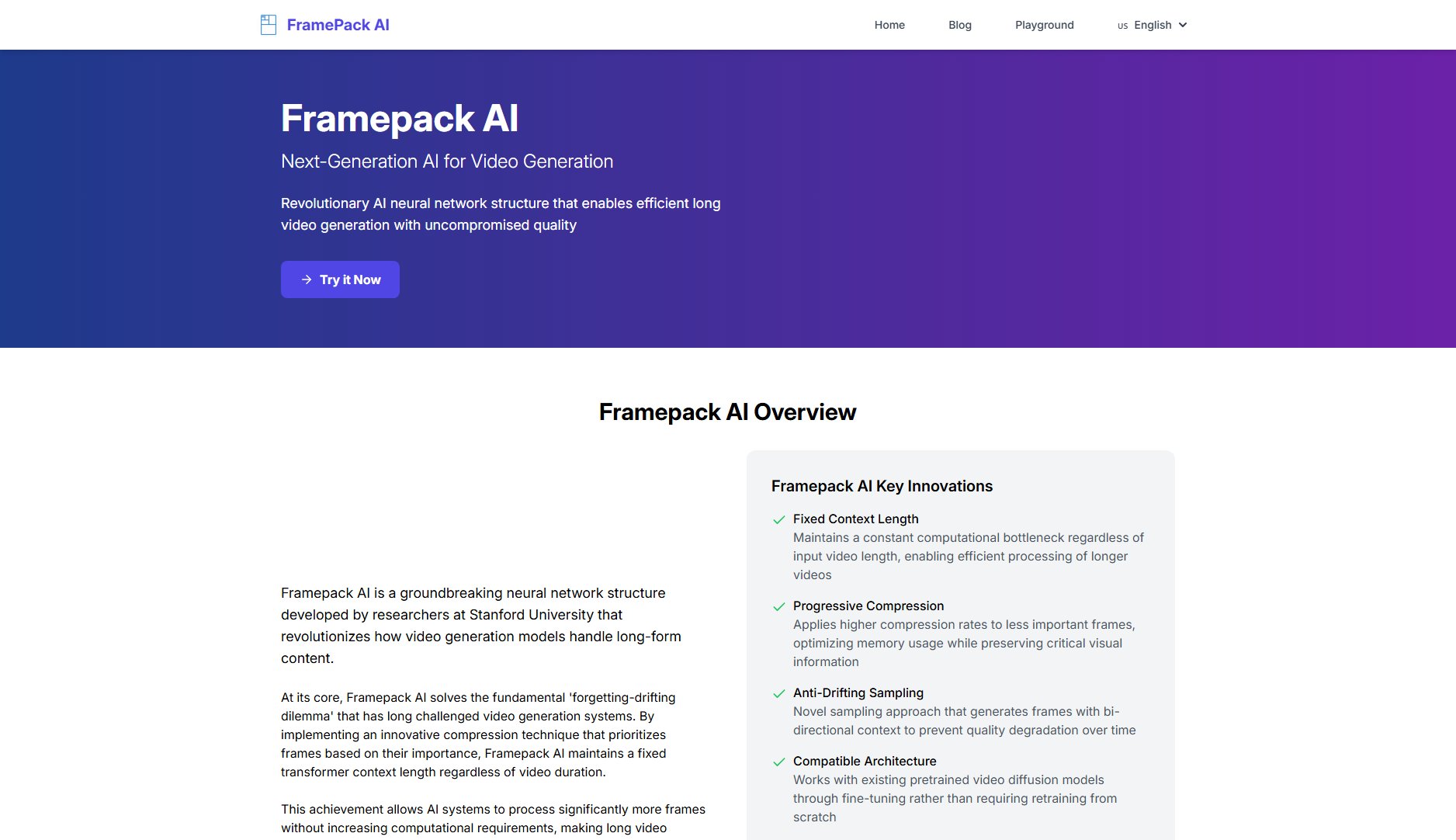

Framepack AI Interface & Screenshots

Framepack AI Official screenshot of the tool interface

What Can Framepack AI Do? Key Features

Fixed Context Length

Maintains a constant computational bottleneck regardless of input video length, enabling efficient processing of longer videos without increasing resource requirements.

Progressive Compression

Applies higher compression rates to less important frames, optimizing memory usage while preserving critical visual information for consistent quality output.

Anti-Drifting Sampling

Novel sampling approach that generates frames with bi-directional context to prevent quality degradation over time, solving the exposure bias problem.

Compatible Architecture

Works with existing pretrained video diffusion models like HunyuanVideo and Wan through fine-tuning rather than requiring complete retraining from scratch.

Higher Batch Sizes

Enables training with batch sizes comparable to image diffusion models (up to 64 samples/batch), significantly accelerating the training process by 5x compared to traditional methods.

Best Framepack AI Use Cases & Applications

Extended Narrative Video Creation

Content creators can generate multi-minute narrative videos with consistent character and scene continuity, overcoming traditional AI limitations.

Photo Animation

Transform still images into smooth, consistent video sequences while preserving original details and identity, perfect for memorial videos or historical recreations.

Educational Content Generation

Produce long-form educational videos where conceptual consistency and gradual visual development are crucial for effective learning.

How to Use Framepack AI: Step-by-Step Guide

Prepare your input source (text prompt, image, or existing video) and select the desired output length and resolution settings.

Choose between sampling methods (vanilla, anti-drifting, or inverted anti-drifting) based on your quality and temporal coherence requirements.

Configure compression parameters (λ) and context length settings to optimize for your specific hardware capabilities and quality needs.

Initiate the generation process, where FramePack will progressively compress frames and maintain consistent quality throughout the video duration.

Review and optionally refine the output, taking advantage of FramePack's stable generation to make targeted adjustments without full regeneration.

Framepack AI Pros and Cons: Honest Review

Pros

Considerations

Is Framepack AI Worth It? FAQ & Reviews

FramePack is highly efficient, requiring 8×A100-80GB GPUs for training, or a single A100-80GB/2×RTX 4090 for inference, with ~40GB memory usage for 480p video generation.

Yes, FramePack supports multi-resolution training with aspect ratio bucketing, using a minimum unit size of 32 pixels with various resolution buckets at 480p.

FramePack achieves 5x faster training (48 vs 240 hours for 13B model), enables 4x larger batch sizes (64 vs 16), and maintains better quality in longer videos through its compression approach.

While primarily designed for quality generation, FramePack's fixed context length shows promise for real-time applications with optimization, especially for streaming scenarios.

FramePack offers three methods: vanilla (sequential), anti-drifting (anchor-first), and inverted anti-drifting (reverse generation), with the latter being most effective for quality.