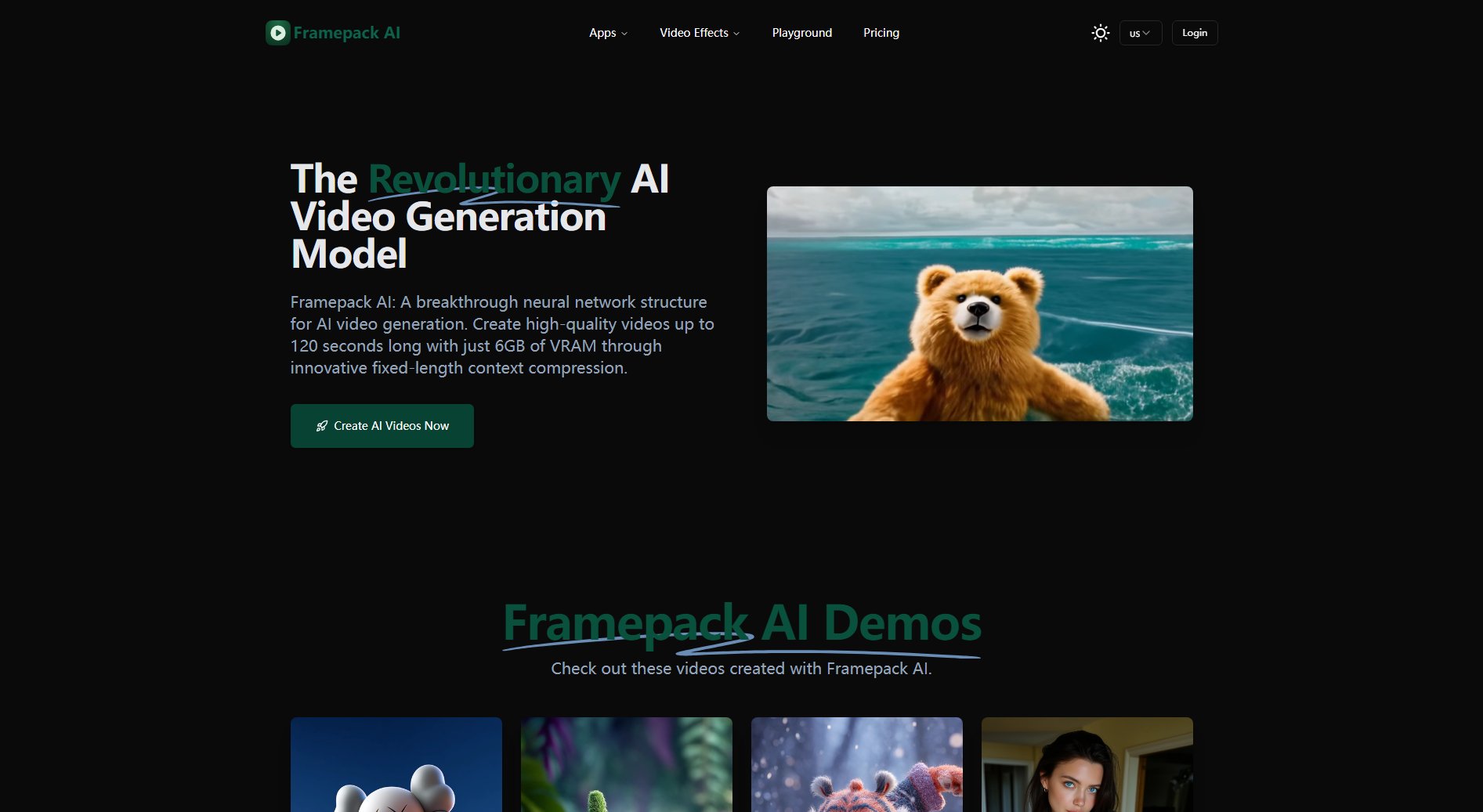

Framepack AI

Advanced neural network for high-quality, long-form video generation

What is Framepack AI? Complete Overview

Framepack AI is a revolutionary neural network structure designed for AI video generation using 'next frame prediction' technology. It enables users to create high-quality videos up to 120 seconds long with just 6GB of VRAM through innovative fixed-length context compression. Developed by ControlNet creator Lvmin Zhang and Stanford professor Maneesh Agrawala, this open-source solution addresses the key challenges in AI video generation: memory constraints, computational costs, and quality consistency in long videos. Framepack AI is ideal for content creators, animators, researchers, and developers looking for an efficient way to produce long-form video content without prohibitive hardware requirements.

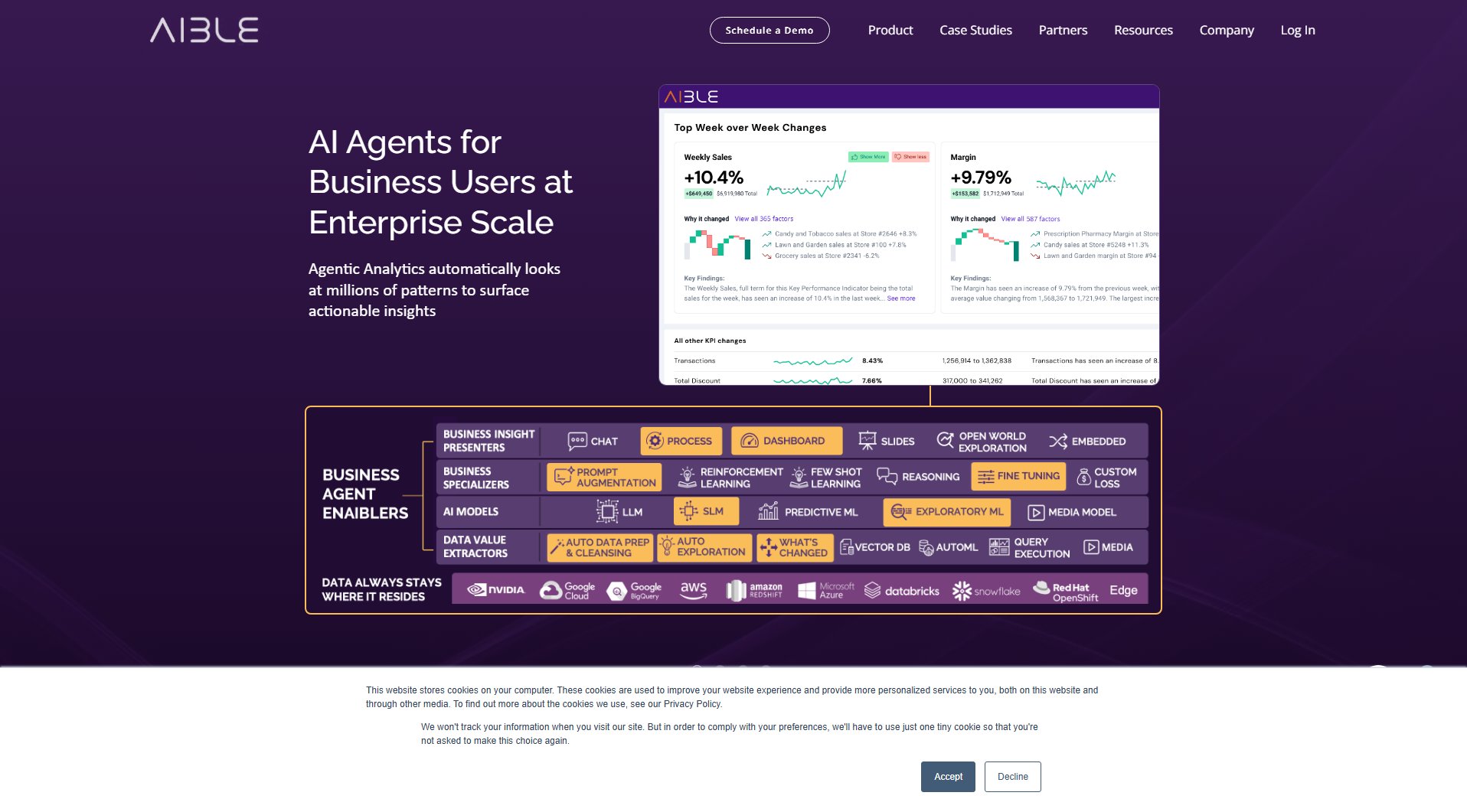

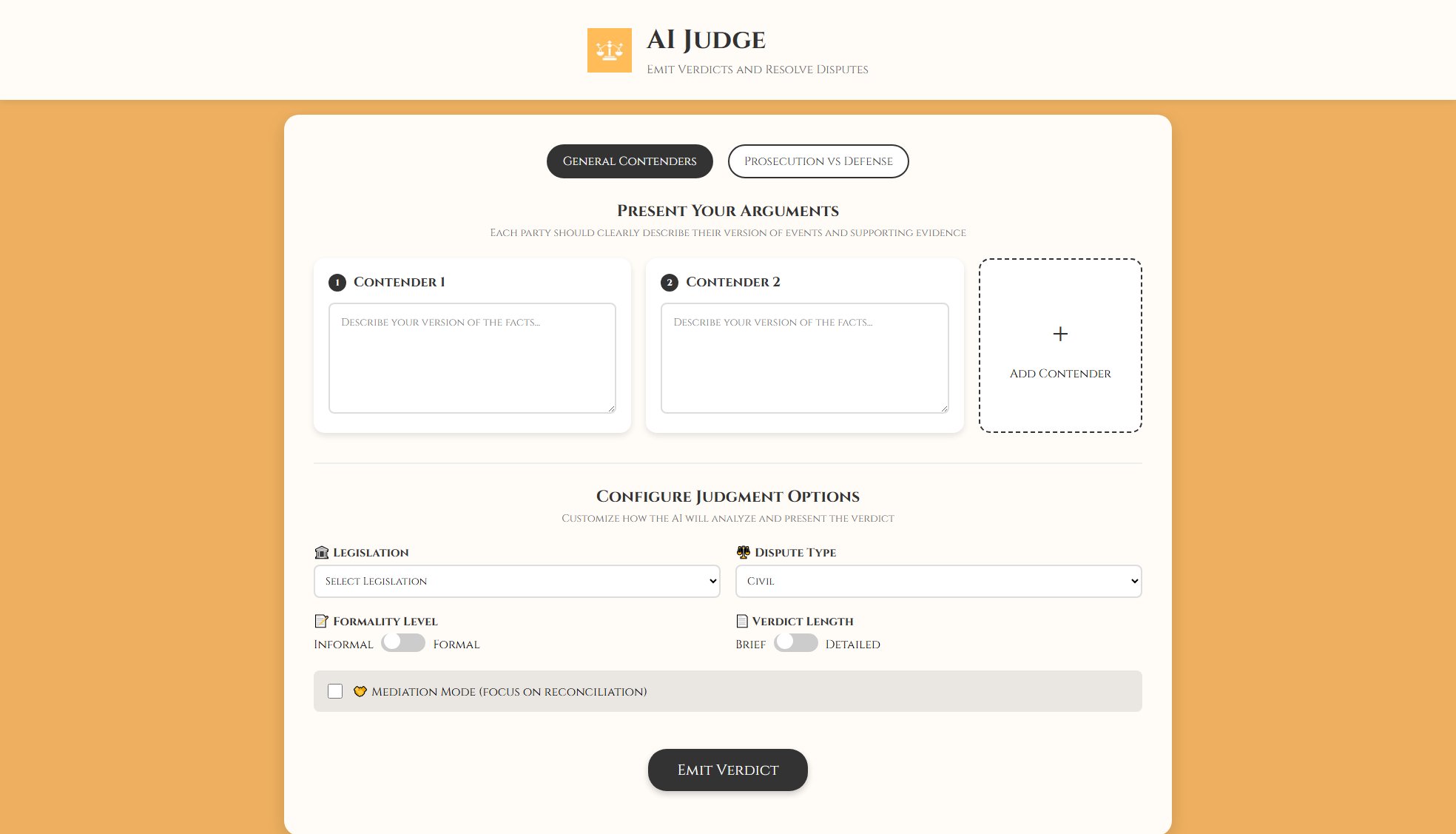

Framepack AI Interface & Screenshots

Framepack AI Official screenshot of the tool interface

What Can Framepack AI Do? Key Features

Fixed-Length Context Compression

Framepack's breakthrough technology compresses all input frames into fixed-length context 'notes', preventing memory usage from scaling with video length. This innovative approach dramatically reduces VRAM requirements while maintaining video quality, making long-form video generation feasible on consumer-grade GPUs.

Minimal Hardware Requirements

With only 6GB of VRAM needed, Framepack AI can generate high-quality videos up to 60-120 seconds at 30fps. It's compatible with RTX 30XX, 40XX, and 50XX series NVIDIA GPUs, making it accessible to a wide range of users without requiring expensive professional hardware.

Efficient Generation

Framepack AI generates frames at approximately 2.5 seconds per frame on RTX 4090 desktop GPUs. With optimization techniques like teacache, this can be reduced to 1.5 seconds per frame, significantly speeding up the video creation process compared to traditional methods.

Strong Anti-Drift Capabilities

The system employs progressive compression and differential handling of frames by importance to mitigate the 'drift' phenomenon common in long video generation. This ensures consistent quality throughout extended sequences, maintaining visual coherence from start to finish.

Multiple Attention Mechanisms

Framepack supports various attention mechanisms including PyTorch attention, xformers, flash-attn, and sage-attention. This flexibility allows users to optimize performance for different hardware setups and achieve the best possible results with their specific configuration.

Open-Source Ecosystem

As an open-source project, Framepack AI benefits from an active community of developers and researchers. The code and models are freely available on GitHub, with ongoing improvements and a growing ecosystem of plugins and integrations with other AI tools.

Best Framepack AI Use Cases & Applications

Content Creation

Digital creators can use Framepack AI to produce long-form video content for platforms like YouTube or TikTok, generating consistent, high-quality videos without expensive production equipment.

Animation Production

Animators can leverage Framepack's efficient generation to create extended animated sequences, with the anti-drift capabilities ensuring character consistency throughout scenes.

Research and Development

AI researchers can utilize Framepack's open-source architecture to experiment with new video generation techniques or develop specialized applications for scientific visualization.

Educational Content

Educators can create dynamic video lessons and demonstrations that maintain visual quality throughout extended explanations, enhancing student engagement.

How to Use Framepack AI: Step-by-Step Guide

Download and install Framepack AI from the official GitHub repository, ensuring your system meets the minimum hardware requirements (NVIDIA GPU with at least 6GB VRAM).

Prepare your input frames or initial video sequence that will serve as the foundation for your AI-generated video content.

Configure Framepack's settings according to your project needs, selecting appropriate attention mechanisms and optimization options for your hardware.

Run the generation process, monitoring the progressive frame creation through Framepack's fixed-length context compression system.

Review and refine the output, using Framepack's anti-drift capabilities to ensure consistent quality throughout the entire video sequence.

Export your final video in your preferred format, ready for sharing or further post-processing with other tools.

Framepack AI Pros and Cons: Honest Review

Pros

Considerations

Is Framepack AI Worth It? FAQ & Reviews

Framepack AI is a specialized neural network structure for AI video generation using 'next frame prediction' technology. It compresses input context information to a fixed length, making computational load independent of video length and significantly reducing resource requirements.

Framepack AI requires an NVIDIA RTX 30XX, 40XX, or 50XX series GPU with at least 6GB of VRAM. It supports FP16 and BF16 data formats and is compatible with both Windows and Linux operating systems.

Framepack AI can generate high-quality videos up to 60-120 seconds long at 30fps, depending on your hardware configuration and optimization techniques used.

Its fixed-length context compression evaluates frame importance and compresses them into fixed-length 'notes', preventing linear context growth with video time, significantly reducing VRAM requirements and computational costs.

Yes, developed by ControlNet creator Lvmin Zhang and Stanford professor Maneesh Agrawala, the project code and models are publicly available on GitHub with an active community.

Yes, Framepack AI can be integrated with platforms like ComfyUI, and the community has developed plugins for easier integration with other tools.