Wan2.2

Open Source MoE Video Generation - Every Shot, Wan Take

What is Wan2.2? Complete Overview

Wan2.2 is the world's first open-source Mixture-of-Experts (MoE) video generation model, designed to create professional cinematic videos from text or images at 720P resolution. Developed by Alibaba Tongyi Lab, Wan2.2 offers advanced motion understanding, stable video synthesis, and superior cinematic control. It is ideal for filmmakers, content creators, and AI researchers who seek high-quality video generation with open-source flexibility. The tool supports both 480P and 720P resolutions, reduces unrealistic camera movements, and provides fine-grained control over lighting, color, and composition. Wan2.2 is fully open-source, allowing users to customize and modify the model to suit their specific needs.

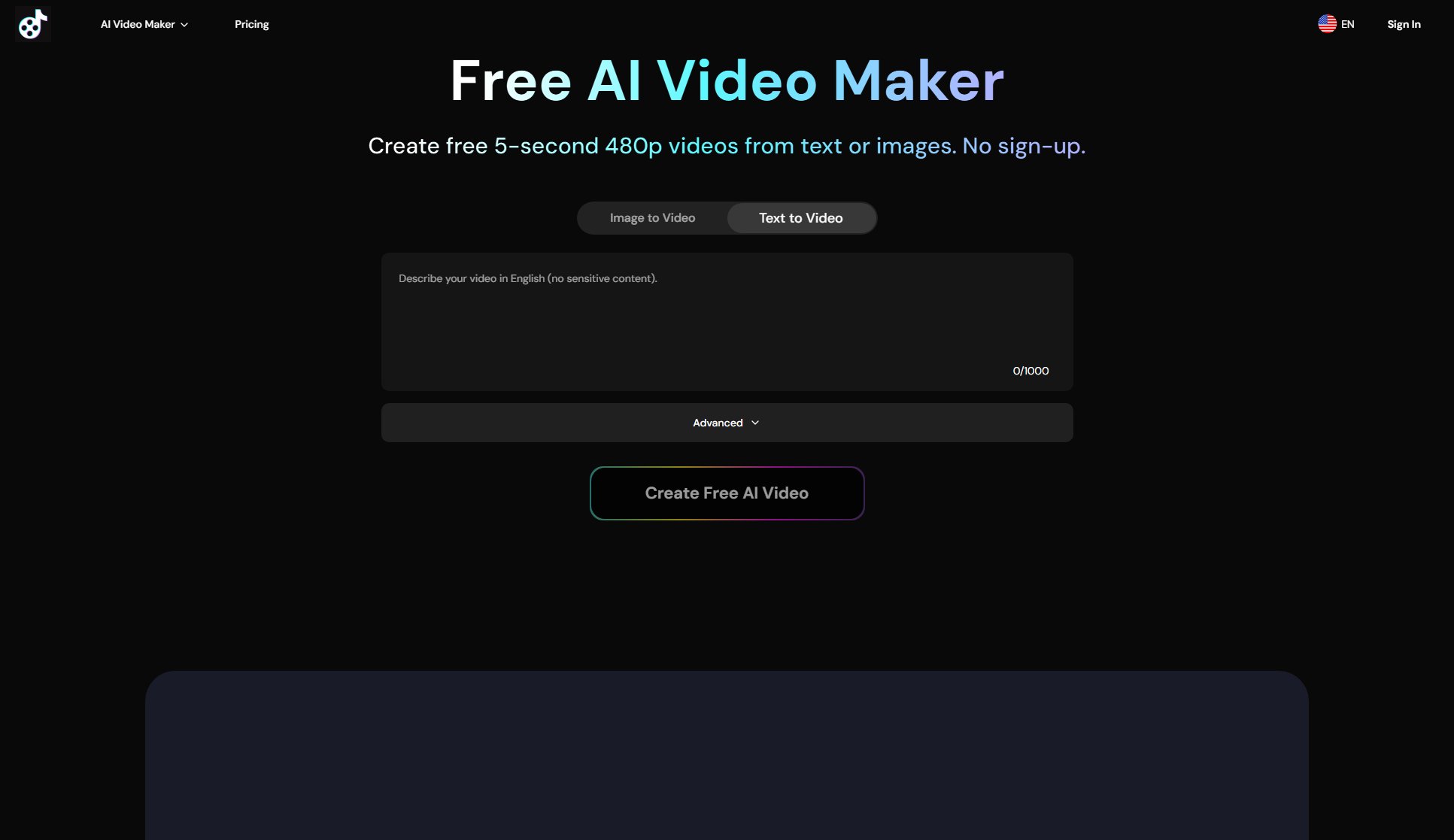

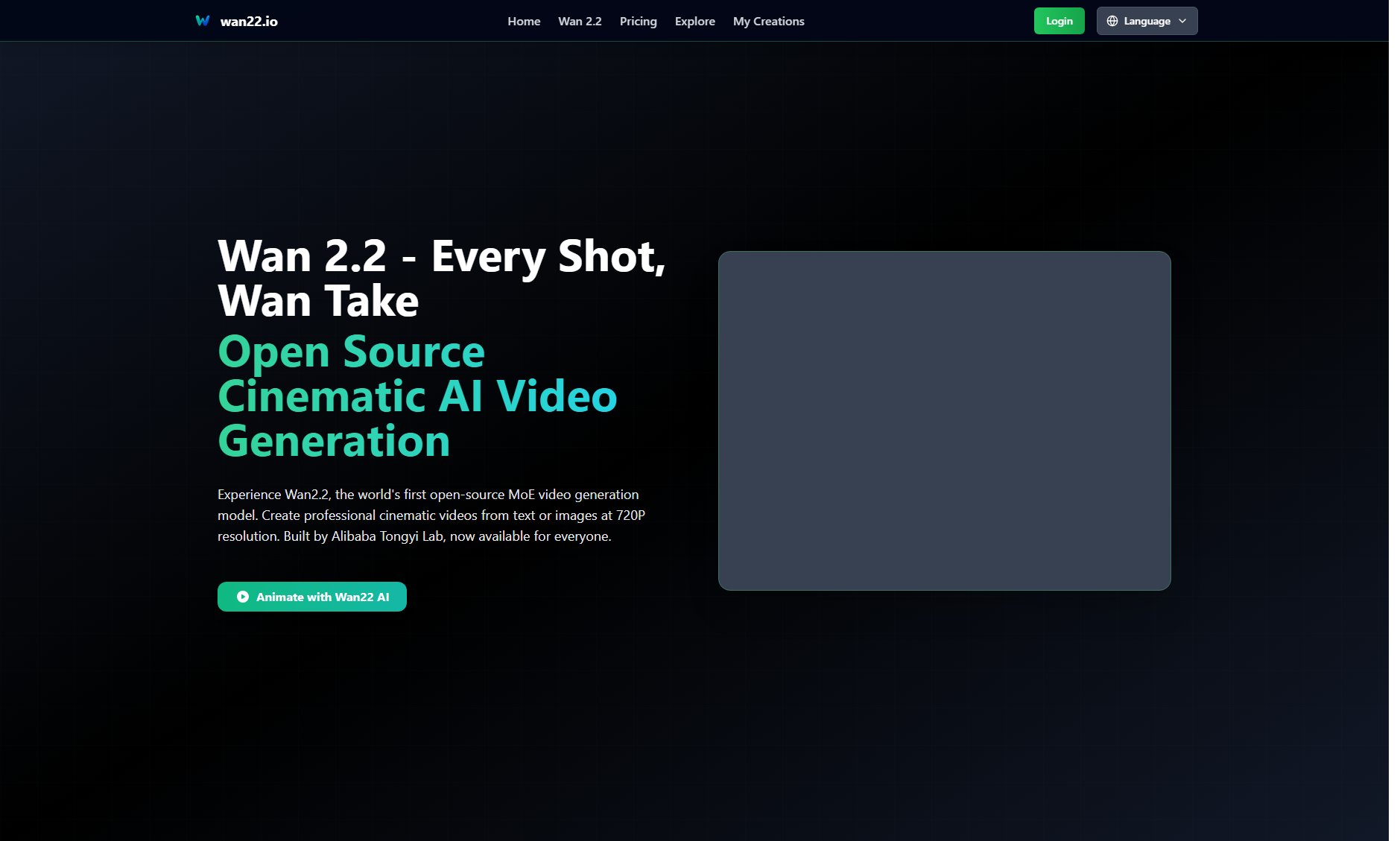

Wan2.2 Interface & Screenshots

Wan2.2 Official screenshot of the tool interface

What Can Wan2.2 Do? Key Features

Advanced Motion Understanding

Wan2.2 effortlessly recreates complex motions with enhanced fluidity, supporting activities like hip-hop dancing, street parkour, and figure skating. The model's advanced algorithms ensure smooth and natural movement patterns.

Stable Video Synthesis

The I2V-A14B model delivers stable results with realistic physics and natural movement patterns, thanks to its advanced MoE architecture. This ensures high-quality output with minimal artifacts.

Image-to-Video Excellence

Wan2.2 transforms static images into dynamic cinematic sequences with reduced unrealistic camera movements. It supports both 480P and 720P resolutions, ensuring professional-quality output.

Cinematic Vision Control

Achieve professional cinematic narratives with fine-grained control over lighting, color, and composition. Wan2.2's MoE architecture allows for versatile styles and delicate detail in every frame.

MoE Architecture Innovation

Wan2.2 introduces Mixture-of-Experts architecture into video diffusion models, enlarging model capacity while maintaining computational efficiency. This innovation sets a new standard in AI video generation.

Best Wan2.2 Use Cases & Applications

Independent Filmmaking

Independent filmmakers can use Wan2.2 to create high-quality cinematic videos without expensive equipment. The tool's open-source nature allows for full customization, enabling unique visual styles and narratives.

Content Creation for Social Media

Content creators can leverage Wan2.2 to produce engaging videos for platforms like Instagram, YouTube, and TikTok. The tool's 720P resolution and advanced motion control ensure professional-grade results.

AI Research and Development

Researchers can study and modify Wan2.2's MoE architecture to advance video diffusion models. The open-source codebase provides a valuable resource for innovation in AI video generation.

Pre-visualization for Studios

Video studios can integrate Wan2.2 into their production pipelines for pre-visualization. The tool's cinematic control features help in planning shots and sequences before full-scale production.

How to Use Wan2.2: Step-by-Step Guide

Download the Wan2.2 models from GitHub or access ready-to-use deployments on platforms like Hugging Face. Ensure your hardware meets the requirements for optimal performance.

Prepare your input, whether it's text prompts or static images, ensuring they are optimized for video generation. For images, consider using Wan2.2's cinematic enhancement pipeline for best results.

Input your text or image into the Wan2.2 model. Use the provided tools to fine-tune parameters such as resolution (480P or 720P), frame rate (24fps), and cinematic controls like lighting and composition.

Generate the video and review the output. Wan2.2 provides real-time previews, allowing you to make adjustments before finalizing the video.

Export the final video in your desired format. Wan2.2 supports various output formats suitable for professional use, social media, or further post-processing.

Wan2.2 Pros and Cons: Honest Review

Pros

Considerations

Is Wan2.2 Worth It? FAQ & Reviews

Wan2.2 is the world's first open-source MoE video generation model with full cinematic control. Unlike closed-source alternatives, you get complete access to source code, model weights, and can run it on your own hardware.

Wan2.2 generates professional videos at 720P resolution with 24fps. The T2V-A14B and I2V-A14B models support both 480P and 720P, while the TI2V-5B model focuses on efficient 720P generation.

Yes! The TI2V-5B model is optimized to run on single consumer-grade GPUs like the RTX 4090. It's one of the fastest 720P@24fps models available for personal use.

Wan2.2 introduces Mixture-of-Experts architecture that separates the denoising process across timesteps with specialized expert models. This enlarges model capacity while maintaining computational efficiency.

Yes, Wan2.2 is fully open-source with no licensing fees for most use cases. Commercial licensing options are available for enterprise solutions requiring additional support and features.