Claude Code Router

Flexible AI model routing for enhanced coding workflows

What is Claude Code Router? Complete Overview

Claude Code Router is an extension of Anthropic's Claude Code, designed to provide developers with flexible routing of coding requests to various AI models. It solves the pain point of being locked into a single AI model by allowing dynamic switching between models like Claude, Gemini, DeepSeek, and local Ollama models. This tool is particularly valuable for developers who need specialized models for different tasks such as background processing, complex reasoning, or handling long contexts. Configuration is done through a simple JSON file, making it easy to customize the routing based on specific project needs.

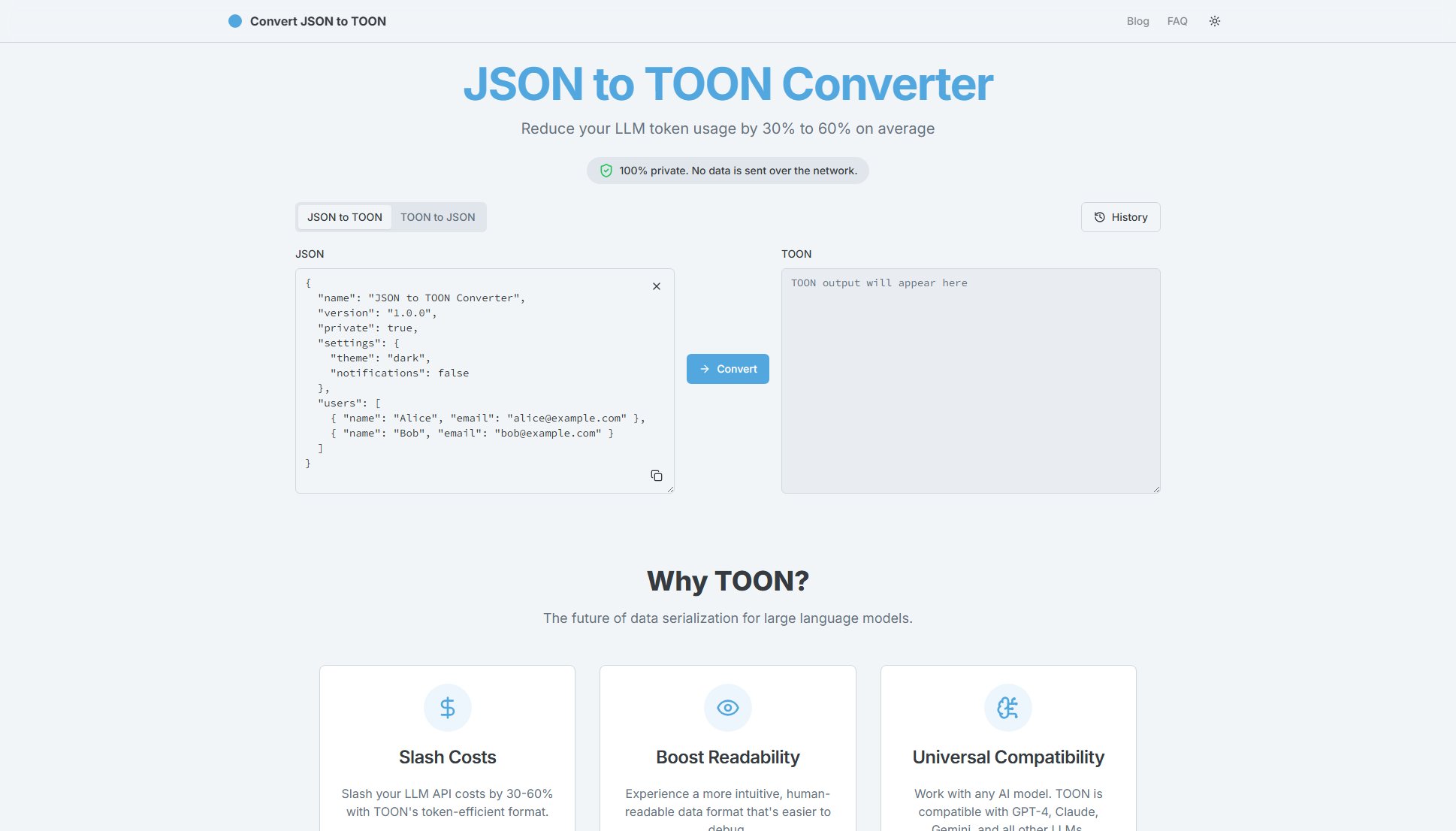

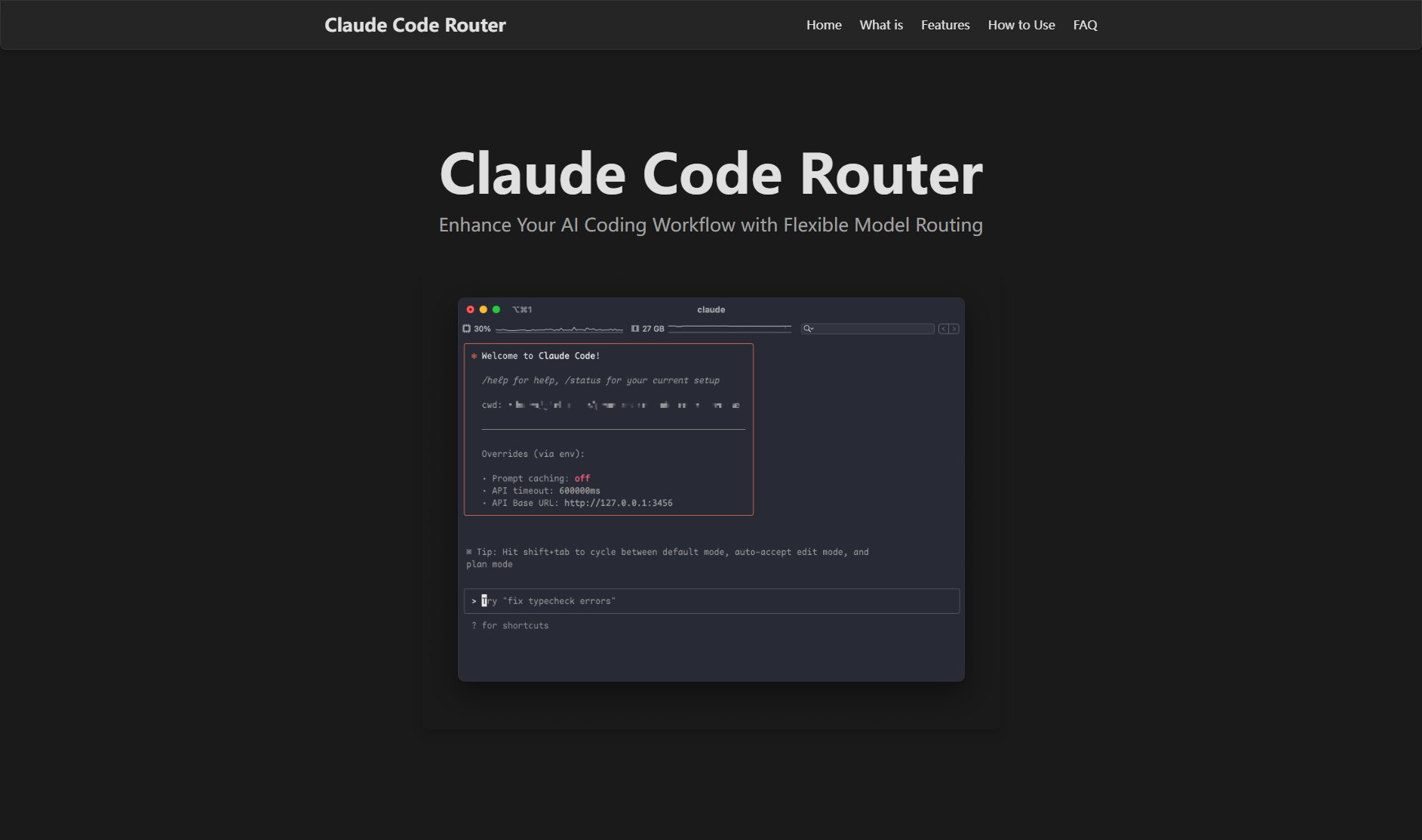

Claude Code Router Interface & Screenshots

Claude Code Router Official screenshot of the tool interface

What Can Claude Code Router Do? Key Features

Multi-model Routing

Route coding queries to any supported provider or model, including OpenRouter, DeepSeek, Ollama, Gemini, and Volcengine. Supports dynamic switching using simple commands like `/model provider,model`.

Specialized Model Roles

Designate specific models for different tasks: background processing, high-reasoning tasks (think mode), and handling long contexts (exceeding 32K). This allows optimization of performance and cost for different workflow needs.

Extensive Provider Support

Supports multiple AI providers including OpenRouter (for Claude models), DeepSeek, local Ollama models, Google Gemini, and Volcengine. Configuration is flexible through a JSON file.

GitHub Actions Integration

Seamlessly integrates with GitHub Actions for automated workflows, enabling features like automated code reviews and issue bots using any configured AI model.

Flexible Configuration

All settings are configurable via a JSON file (`~/.claude-code-router/config.json`) that defines providers, API keys, available models, and routing rules for different use cases.

Best Claude Code Router Use Cases & Applications

Multi-stage Code Review

Route initial code reviews to faster, less expensive models for basic checks, then use more sophisticated models like Claude-3.5-Sonnet for complex analysis, optimizing both cost and quality.

Local Development with Ollama

Configure background tasks to use local Ollama models while reserving cloud-based models for primary coding tasks, reducing API costs and improving privacy for certain operations.

Long Context Handling

Automatically route conversations exceeding 32K tokens to models specifically optimized for long contexts (like Gemini-2.5-Pro), preventing performance issues with standard models.

Automated GitHub Workflows

Integrate with GitHub Actions to create automated code review bots that use different models based on repository size, complexity, or other factors specified in your routing configuration.

How to Use Claude Code Router: Step-by-Step Guide

Install prerequisites: Ensure Node.js and npm/yarn are installed on your system.

Install Claude Code CLI: Run `npm install -g @anthropic-ai/claude-code` to install Anthropic's base tool.

Install Claude Code Router: Run `npm install -g @musistudio/claude-code-router` to add the routing functionality.

Configure routing: Create or edit `~/.claude-code-router/config.json` to define your providers, API keys, and routing rules.

Start coding: Run `ccr code` to begin using Claude Code with your configured routing. Use `/model provider,model` commands to switch models dynamically.

Claude Code Router Pros and Cons: Honest Review

Pros

Considerations

Is Claude Code Router Worth It? FAQ & Reviews

Claude Code Router enhances Claude Code by allowing routing of coding requests to various AI models, providing greater flexibility and customization in AI coding workflows.

While Claude Code is Anthropic's command-line tool for agentic coding, Claude Code Router adds model routing capabilities that the base tool doesn't natively support.

It supports models from OpenRouter, DeepSeek, Ollama (local models), Google Gemini, and Volcengine, including Claude-3.5-Sonnet, Gemini-2.5-Pro, and DeepSeek-Chat.

Through a JSON configuration file (~/.claude-code-router/config.json) that defines providers, API keys, available models, and routing rules.

Yes, using commands like `/model openrouter,anthropic/claude-3.5-sonnet` you can switch models during your coding session.

Yes, by allowing routing to more cost-effective models for specific tasks, it can significantly reduce costs compared to using a single expensive model.