Echo

User Pays AI SDK for seamless LLM integration

What is Echo? Complete Overview

Echo is a revolutionary AI SDK designed to simplify the integration of Large Language Models (LLMs) into applications while ensuring that users pay for their own usage. This eliminates the need for developers to front the bill for AI services. Echo provides a unified gateway to multiple frontier models, including OpenAI, Anthropic, and Google Gemini, making it easy to switch between providers. The SDK is particularly beneficial for developers building Next.js and React applications, offering drop-in components for authentication, balance monitoring, and usage tracking. With Echo, developers can focus on building their applications without worrying about API keys, metering, or Stripe integrations. The platform also allows developers to set markups on token usage, turning every token consumed into instant revenue.

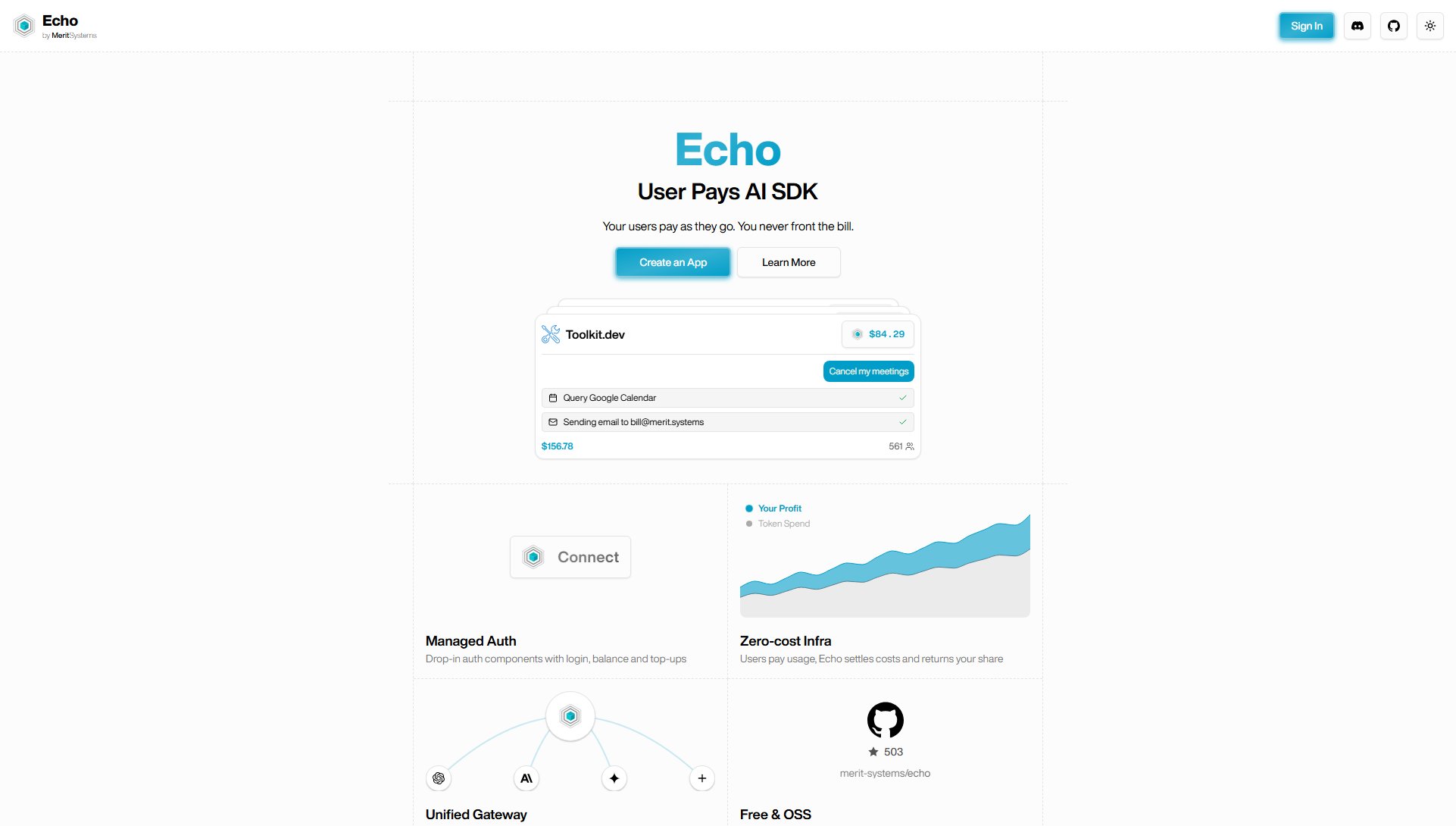

Echo Interface & Screenshots

Echo Official screenshot of the tool interface

What Can Echo Do? Key Features

User Pays Model

Echo's unique user-pays model ensures that your users cover their own LLM usage costs. This means you never have to front the bill for AI services, making it financially sustainable to offer AI-powered features in your applications. The system automatically handles balance tracking and top-ups, providing a seamless experience for both developers and end-users.

Unified Gateway

Echo serves as a single gateway to multiple frontier AI models, including OpenAI, Anthropic, and Google Gemini. This unified approach simplifies integration and allows you to easily switch between different providers without changing your codebase. The gateway handles all the complexities of API communication, giving you more time to focus on your application's core functionality.

Managed Auth Components

Echo provides ready-to-use authentication components that handle login, balance monitoring, and top-ups. These drop-in components significantly reduce development time and ensure a consistent user experience across applications. The auth system is designed to work seamlessly with both Next.js and React applications.

Revenue Generation

With Echo, you can set a markup on token usage, turning every token your users consume into instant revenue. The platform provides detailed analytics on profit, token consumption, and transactions, giving you clear visibility into your earnings. This model creates a sustainable way to monetize AI features in your applications.

Zero-cost Infrastructure

Echo eliminates the need for you to manage or pay for AI infrastructure. Users pay for their own usage, and Echo handles all the backend costs, including model access and API management. This approach removes financial risk and allows you to scale your AI features without worrying about infrastructure costs.

Cross-app Balance

Echo provides a universal balance system that works across all Echo-powered applications. Users can maintain a single balance that's shared between different apps, simplifying the payment process. This feature enhances user convenience and encourages engagement across multiple Echo-integrated platforms.

Best Echo Use Cases & Applications

AI-Powered Chat Applications

Developers can build chat applications that leverage various LLMs without worrying about API costs. Users pay for their own message generations, making it financially viable to offer high-quality AI conversations. The unified gateway allows for easy switching between different models based on performance or cost considerations.

Content Generation Tools

Content creation platforms can integrate AI-assisted writing features where users pay per generation. This model works particularly well for tools that generate marketing copy, blog posts, or social media content. The revenue markup feature allows platform owners to earn from every piece of generated content.

Educational Applications

Learning platforms can offer AI tutors or homework helpers where students or institutions cover the usage costs. The balance system allows for institutional accounts with shared budgets across multiple users or applications, making it ideal for educational environments.

Developer Tools

Coding assistants and developer productivity tools can leverage Echo to provide AI-powered features without burdening the tool creators with infrastructure costs. The SDK's easy integration makes it perfect for adding AI capabilities to existing developer tools with minimal changes.

How to Use Echo: Step-by-Step Guide

Install the Echo SDK in your Next.js or React application using the provided npm packages. For Next.js, use '@merit-systems/echo-next-sdk', and for React, use '@merit-systems/echo-react-sdk'. The installation process is straightforward and well-documented on the Echo GitHub repository.

Configure the Echo provider in your application by adding your unique app ID. This typically involves wrapping your application or specific components with the EchoProvider component in React or setting up the API handlers in Next.js. The configuration requires minimal code changes from standard AI SDK implementations.

Integrate the pre-built auth components into your application's UI. These components handle user authentication, balance display, and top-up functionality. The components are designed to be customizable while providing out-of-the-box functionality that works seamlessly with Echo's backend.

Implement AI features using Echo's streamlined API calls. The SDK provides simple methods like 'streamText' that abstract away the complexity of dealing with different AI providers. You can specify the model and prompt, and Echo handles the rest, including cost calculation and balance deduction.

Monitor usage and revenue through Echo's built-in analytics. The platform provides real-time data on token consumption, user balances, and your earnings from markups. This information is accessible both through the SDK and potentially through a dashboard interface.

Deploy your application with confidence, knowing that users will pay for their own AI usage. Echo handles all the billing and infrastructure aspects, allowing you to focus on improving your application's features and user experience.

Echo Pros and Cons: Honest Review

Pros

Considerations

Is Echo Worth It? FAQ & Reviews

Echo's user-pays model means your application's users are responsible for covering their own LLM usage costs. They maintain a balance within your app (or across multiple Echo-powered apps) that gets deducted with each AI interaction. You never have to pay for their usage, eliminating financial risk.

Echo currently provides a unified gateway to multiple frontier models including OpenAI's models, Anthropic's Claude, and Google's Gemini. The SDK abstracts away the differences between these providers, allowing you to easily switch between them in your application.

You can set a markup on the base cost of token usage. This markup becomes your instant revenue whenever users consume tokens in your application. Echo provides detailed analytics to track your earnings from these markups.

Yes, Echo offers a completely free and open-source version with no mandatory fees. You only pay optional 2.5% fees on your profits if you choose to use certain premium features. The core functionality is available at no cost.

Integration is designed to be extremely fast. For Next.js applications, you can often be production-ready by changing just 5 lines of code from the Vercel AI SDK. React integration is similarly straightforward with pre-built components available.

Yes, one of Echo's key features is the universal balance system. A user's Echo balance can be used across any Echo-powered application, creating a seamless experience and encouraging engagement with multiple services in the ecosystem.