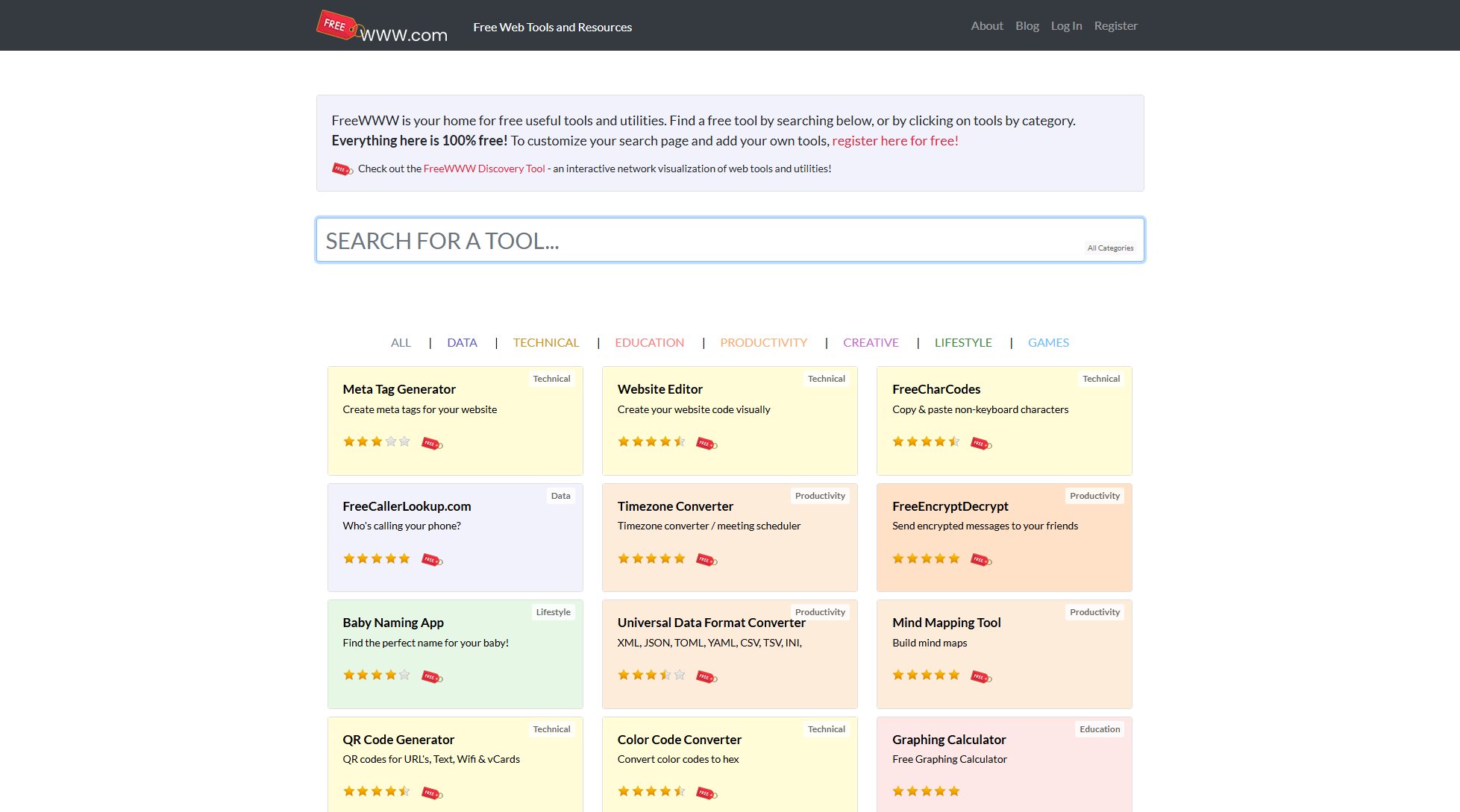

Ally

The most accessible AI assistant for daily independence

What is Ally? Complete Overview

Ally is an AI assistant designed with accessibility at its core, helping users achieve daily independence through intuitive, voice-activated interactions. It serves as a helpful companion for people who are blind or have low vision, older adults, those with cognitive needs, and anyone seeking a more accessible way to stay connected. Ally understands natural language queries, offers personalized responses, and integrates with various tools like weather APIs, OCR, and visual language models to provide contextual assistance. Available across multiple platforms including iOS, Android, web, and smart glasses, Ally ensures seamless accessibility wherever users go.

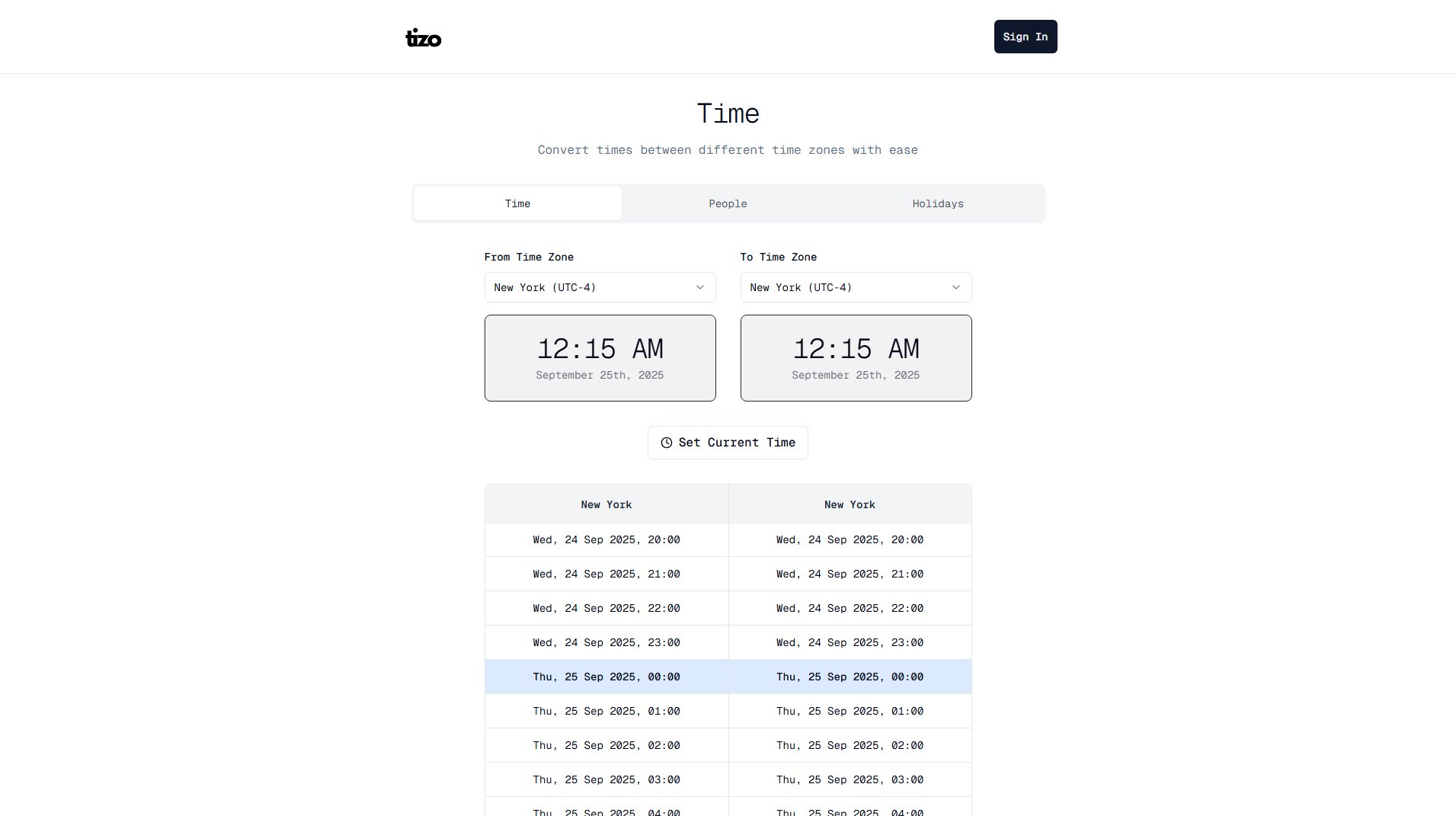

Ally Interface & Screenshots

Ally Official screenshot of the tool interface

What Can Ally Do? Key Features

Intent Recognition

Ally uses a custom reasoning model to understand user intent, analyzing the structure and context of questions to determine if the user seeks information, visual analysis, or an action. This ensures accurate responses tailored to the user's needs.

Multi-Tool Integration

Ally leverages a suite of tools including language models, camera-based visual recognition, OCR for text extraction, weather APIs, and calendar integration. This allows it to handle diverse queries, from identifying objects to reading documents or checking the weather.

Personalization

Users can customize Ally's voice, personality, and response style (e.g., professional, humorous). Ally also learns preferences like dietary restrictions or professional details to provide tailored recommendations, such as menu suggestions or study plans.

Cross-Platform Accessibility

Ally works on smartphones, web browsers, and smart glasses (like Solos and Envision Glasses), offering a consistent experience. It's optimized for screen readers and voice input, ensuring inclusivity for all users.

Real-World Assistance

From guiding users through cooking times to describing surroundings or helping with skincare routines, Ally excels in practical scenarios. Examples include reading menus, identifying objects, or breaking down tasks like exam studying into manageable steps.

Best Ally Use Cases & Applications

Navigating Daily Tasks

A blind user asks Ally to identify ingredients in their kitchen or describe a new coffee machine’s controls, enabling independent meal preparation.

Education Support

A student requests help planning a genetics exam study schedule. Ally breaks the material into timed segments and provides concise explanations.

Social Inclusion

At a restaurant, a low-vision user points their phone at the menu. Ally reads options aloud and recommends dishes based on their dietary preferences.

How to Use Ally: Step-by-Step Guide

Download the Ally app on your iOS/Android device, access the web version, or use compatible smart glasses. No complex setup is needed—just launch the app.

Personalize Ally by setting your name, preferences (e.g., dietary, language), and choosing a voice/personality (e.g., 'English butler' or 'funny sidekick').

Start a conversation by voice or text. Ask questions naturally, like 'Do I need an umbrella today?' or 'What’s this object?' Ally will analyze the intent and respond.

For visual queries, point your device’s camera at objects, text, or scenes. Ally uses OCR or visual models to describe or read aloud what it sees.

Use Ally’s proactive features, like calendar reminders or weather alerts, or explore advanced tools like multilingual translation and trivia games.

Ally Pros and Cons: Honest Review

Pros

Considerations

Is Ally Worth It? FAQ & Reviews

Yes, Ally is designed with and for the blind/low-vision community. It works seamlessly with screen readers and voice commands.

Ally complies with GDPR and other privacy laws. Data is encrypted and never shared without consent. See the Privacy Policy for details.

Yes, Ally for Enterprise offers tailored solutions for organizations. Contact [email protected] for inquiries.