VibeVoice AI

Open-source multi-speaker TTS for podcasts & long-form content

What is VibeVoice AI? Complete Overview

VibeVoice AI is Microsoft's open-source text-to-speech framework specializing in long-form, multi-speaker audio generation. It enables users to create up to 90 minutes of natural-sounding dialogue between multiple speakers (up to 4 voices) in English or Chinese. The tool is particularly valuable for content creators, educators, and researchers who need to prototype podcast scripts, audiobook narrations, or educational dialogues without recording sessions. Its key innovation is a next-token diffusion approach with ultra-compressed speech tokens (7.5Hz), allowing efficient generation while maintaining quality. VibeVoice delivers superior performance in conversational flow and speaker consistency compared to many commercial TTS services, though it requires substantial GPU resources.

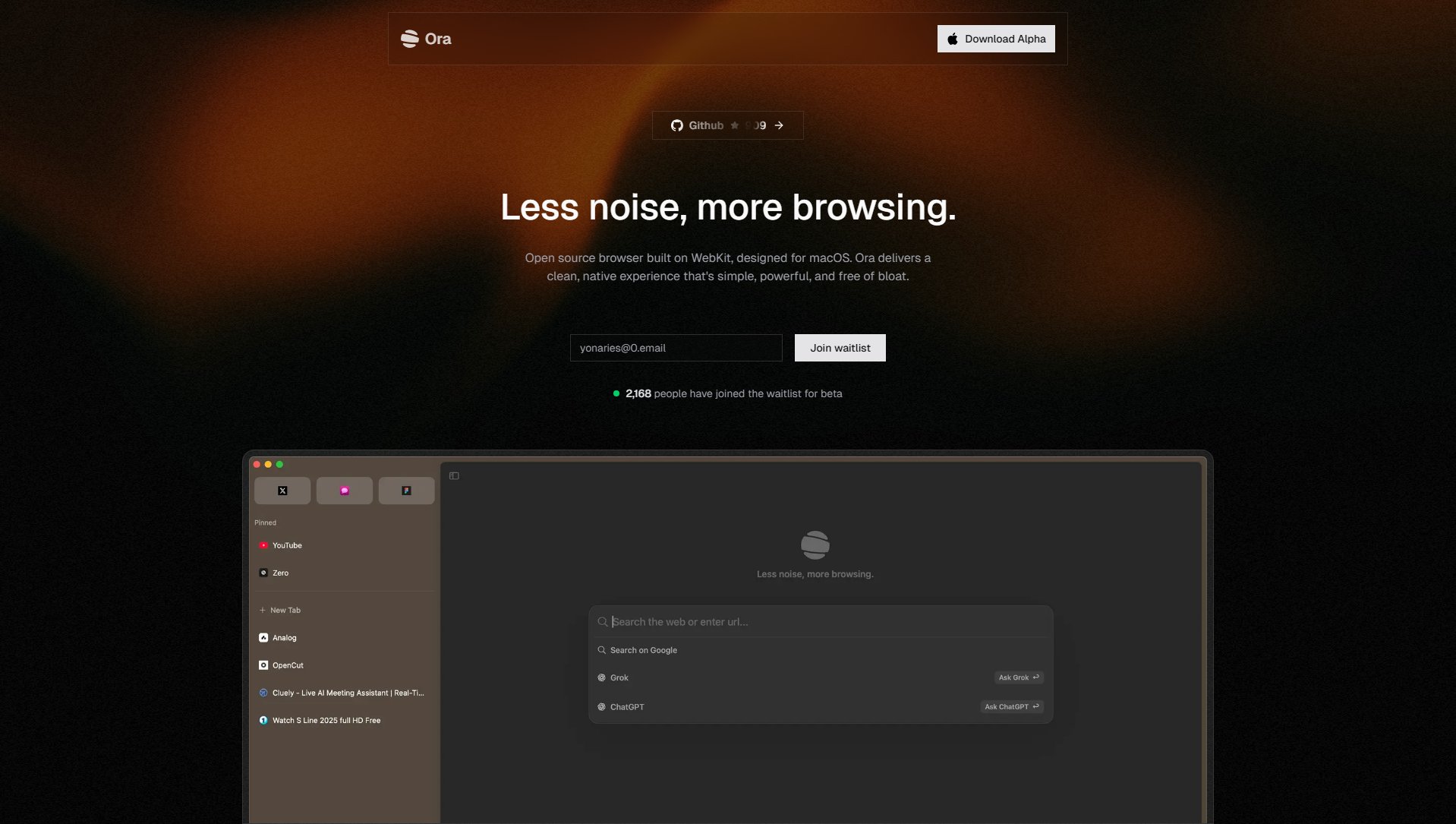

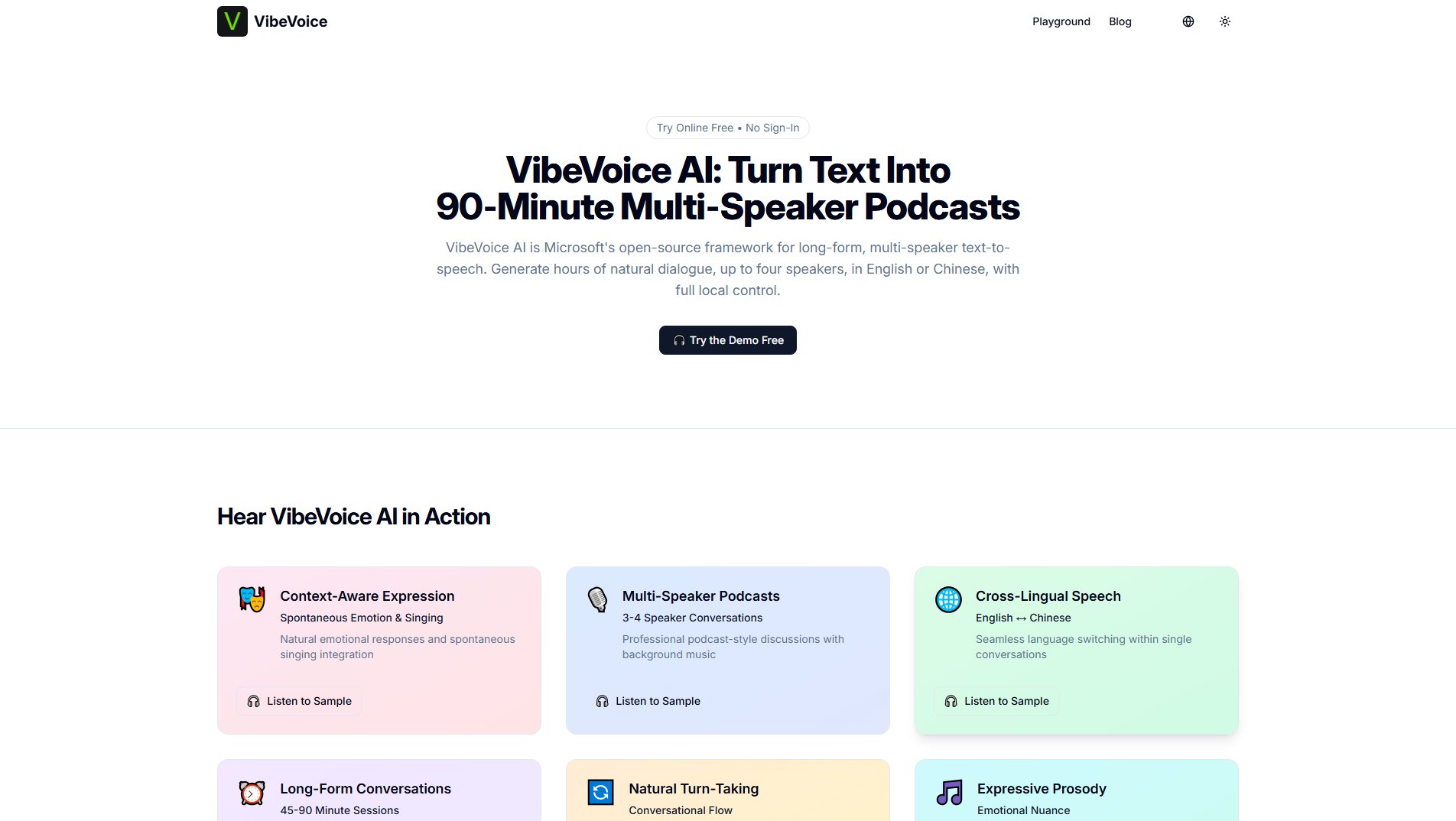

VibeVoice AI Interface & Screenshots

VibeVoice AI Official screenshot of the tool interface

What Can VibeVoice AI Do? Key Features

Long-Form Conversational Synthesis

Generates up to 90 minutes of continuous audio within a 64K token context window. Maintains coherent dialogue flow and natural turn-taking across extended conversations, making it ideal for podcasts, audiobooks, and educational content.

Multi-Speaker Dialogue

Supports up to 4 distinct speakers in one conversation with consistent voice characteristics. Each speaker maintains their unique timbre and vocal identity throughout lengthy dialogues, enabling realistic podcast-style discussions.

Next-Token Diffusion Framework

Unique architecture where LLMs predict hidden states and a diffusion head refines them into acoustic features. This unified approach improves speech realism and stability for long-form generation compared to traditional TTS pipelines.

Ultra-Low Frame Rate Tokenizer

Revolutionary 7.5 Hz speech tokenizer compresses audio by up to 3200×, dramatically reducing computational costs while preserving audio quality. Enables efficient processing of lengthy audio segments.

Bilingual Support

Native capability for both English and Chinese with seamless language switching within conversations. Maintains speaker identity and prosody when transitioning between languages.

Hybrid Audio Representations

Uses parallel acoustic and semantic tokenizers to balance timbre preservation with linguistic meaning. Combines σ-VAE for prosody with ASR objectives for content accuracy.

Open Source & Local Control

MIT licensed with pretrained weights available on GitHub and Hugging Face. Offers full local deployment without reliance on cloud services or APIs.

Best VibeVoice AI Use Cases & Applications

Podcast Prototyping

Content creators can rapidly transform written scripts into 90-minute podcast drafts with multiple hosts/guests. Enables testing dialogue flow and episode formats before committing to studio recordings.

Audiobook Narration

Authors generate multi-character audiobook readings where each character maintains a distinct voice throughout chapters. More engaging than single-narrator productions.

Language Learning Content

Educators create bilingual dialogues between teachers and students for immersive language practice. Particularly effective for English-Chinese learning materials.

Game Dialogue Prototyping

Game developers test character interactions and narrative pacing during early design phases without requiring voice actor recordings.

Accessible Content Conversion

Convert lengthy articles or reports into natural multi-voice audio presentations for visually impaired users or auditory learners.

How to Use VibeVoice AI: Step-by-Step Guide

Prepare your script with clear speaker identifiers (e.g., 'Speaker A:', 'Speaker B:') and proper punctuation. For best results, format dialogues with natural turn-taking patterns.

Set up the environment by installing Docker and cloning the VibeVoice repository from GitHub. Install dependencies using the provided pip commands.

Choose your model variant based on hardware constraints: VibeVoice-1.5B (~7-10GB VRAM) for longer outputs or VibeVoice-7B (~18-24GB VRAM) for higher quality.

Run the Gradio demo interface with your selected model. Input your formatted script and configure optional parameters like speaker prompts.

Initiate generation and monitor progress. Expect longer processing times for extended dialogues (several minutes per minute of audio on consumer GPUs).

Review and export the generated audio. The system outputs WAV files containing your multi-speaker conversation ready for review or further editing.

VibeVoice AI Pros and Cons: Honest Review

Pros

Considerations

Is VibeVoice AI Worth It? FAQ & Reviews

The 1.5B model requires ~7-10GB VRAM (RTX 3060/3070), while the 7B model needs ~18-24GB VRAM (RTX 3090/4090). Consumer GPUs can run it but expect slower generation speeds.

While technically MIT licensed, Microsoft recommends research use only due to potential misuse risks. Commercial deployment requires ethical safeguards and AI content disclosure.

VibeVoice specializes in long-form multi-speaker content and offers local deployment, while ElevenLabs provides more polished single-speaker voices and broader language support.

This is an uncontrolled artifact from training data, not an intentional feature. The model occasionally reproduces musical patterns heard in its training samples.

No, the current architecture only supports turn-based conversations without overlapping speech or interruptions.