Tensorchat.io

Run dozens of AI prompts simultaneously with unified API access

What is Tensorchat.io? Complete Overview

Tensorchat.io is a powerful AI platform that enables users to run multiple AI prompts simultaneously across 400+ AI models via a single API call. It integrates seamlessly with OpenRouter's extensive model catalog, providing access to the latest AI models from major providers like OpenAI, Anthropic, Google, and Meta. The platform is designed for developers, data scientists, and enterprises looking to streamline their AI workflows by comparing outputs, exploring alternatives, and finding optimal solutions in real-time. Tensorchat.io eliminates the need to manage multiple API keys and ensures consistent performance for professional and enterprise use cases.

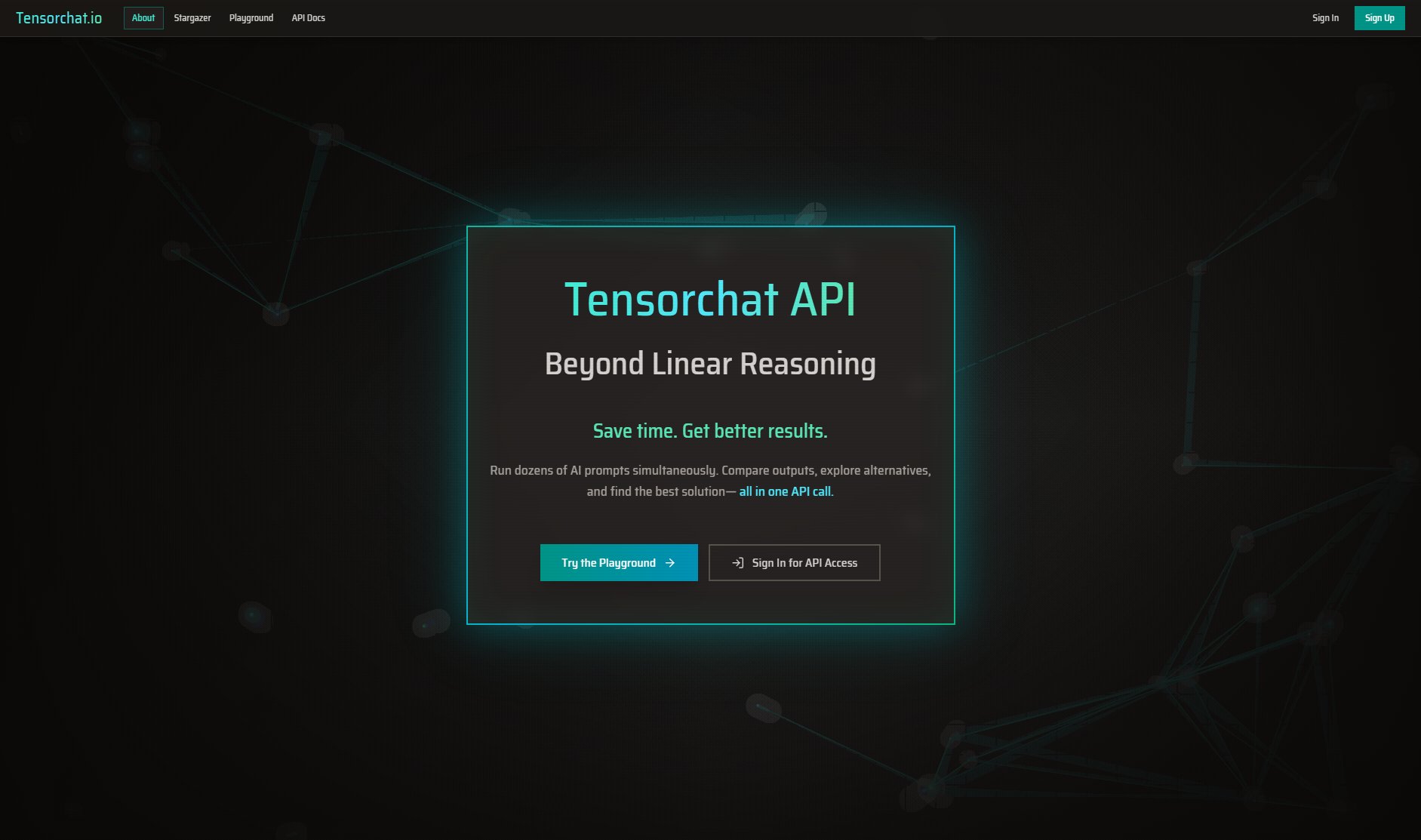

Tensorchat.io Interface & Screenshots

Tensorchat.io Official screenshot of the tool interface

What Can Tensorchat.io Do? Key Features

Concurrent AI Processing

Run the same prompt across multiple AI models simultaneously, allowing users to compare outputs from Claude, GPT-4, and other leading models in real-time. This feature helps in identifying the best possible results for specific tasks without the need for multiple API calls.

Web Search Integration

Enable AI-powered web search to retrieve real-time data. This feature allows prompts to automatically scour the web for current information, news, and contextual data, enhancing the accuracy and relevance of AI responses.

Model Flexibility & Mixing

Mix and match different AI models within the same request. For example, use GPT-4 for reasoning, Claude for writing, and Gemini for analysis—all in a single tensor operation. This flexibility optimizes performance for complex workflows.

Extensive Model Catalog

Access over 400 AI models through OpenRouter integration, including the latest models like GPT-5, Claude 4, and specialized models for coding, mathematics, and creative tasks. This extensive catalog ensures users have the right tools for any application.

Multi-Model Comparison

Compare how different AI models interpret the same prompt. This feature helps users discover which models excel at specific tasks, enabling informed decisions about AI usage and optimization.

Production-Ready Performance

Built for serious AI work, Tensorchat.io offers reliable performance, consistent results, and scalability. It is designed to meet the demands of professional and enterprise use cases, ensuring smooth integration into existing workflows.

Best Tensorchat.io Use Cases & Applications

LLM-Dependent Dashboards

Build intelligent dashboards that automatically re-analyze and regenerate insights when data changes. Apply consistent analysis prompts across evolving datasets for real-time updates.

Alternative Code Generation

Generate multiple variations of code simultaneously—different color schemes, UI layouts, algorithm approaches, or architectural patterns—to explore creative solutions efficiently.

Content Adaptation

Transform the same base content for different audiences, platforms, or formats. Generate blog posts, social media content, technical documentation, and marketing copy in one go.

Conversational AI Testing

Test chatbot responses across different scenarios, user personas, and edge cases simultaneously. Evaluate conversation flows, tone consistency, and error handling with ease.

Data Analysis & Insights

Apply the same analytical frameworks to different datasets, time periods, or market segments. Generate consistent insights, trend analysis, and comparative reports across multiple data sources.

Hypothetical Testing

Explore 'what-if' scenarios by applying the same prompts to different hypothetical conditions. Test business strategies, research hypotheses, or decision outcomes across multiple variables.

How to Use Tensorchat.io: Step-by-Step Guide

Sign up or log in to Tensorchat.io to access the platform. New users can explore the Playground to test features without API access.

Navigate to the API Docs to understand the endpoints and parameters available for tensor prompting. Familiarize yourself with the syntax for running concurrent prompts.

Use the Playground to experiment with running prompts across multiple models. Input your prompt, select the models you want to compare, and execute the request.

Review the outputs from different models. Compare the results to identify which model performs best for your specific use case.

Integrate the Tensorchat API into your workflow. Use the provided API key to make requests programmatically, leveraging the platform's concurrent processing capabilities.

Tensorchat.io Pros and Cons: Honest Review

Pros

Considerations

Is Tensorchat.io Worth It? FAQ & Reviews

Tensorchat.io is a platform that allows users to run multiple AI prompts simultaneously across various AI models using a single API call, streamlining AI workflows.

Tensorchat.io provides access to over 400 AI models through its integration with OpenRouter, including models from OpenAI, Anthropic, Google, and Meta.

Yes, Tensorchat.io offers a free plan with limited API calls and access to basic models. Paid plans provide additional features and higher usage limits.

Yes, you can try the Playground feature to experiment with running prompts across multiple models without needing an API key.

The web search feature allows your prompts to automatically retrieve real-time data from the web, enhancing responses with current information and contextual data.

Tensorchat.io eliminates the need to manage multiple API keys and provides a unified interface to compare outputs from different models, saving time and improving results.