sam3d

Transform single images into 3D assets with Meta's AI model

What is sam3d? Complete Overview

sam3d is Meta’s cutting-edge single-image 3D reconstruction model that seamlessly integrates open-vocabulary segmentation with geometry, texture, and layout predictions. Designed to convert ordinary photos into ready-to-use 3D assets, sam3d is ideal for creative professionals, AR/VR developers, e-commerce platforms, and robotics engineers. By leveraging SAM 3's segmentation capabilities, sam3d enables users to isolate objects through text or visual prompts, making it a versatile tool for various applications. The model is backed by open-source checkpoints, inference code, and curated datasets like Artist Objects, ensuring reproducibility and ease of use.

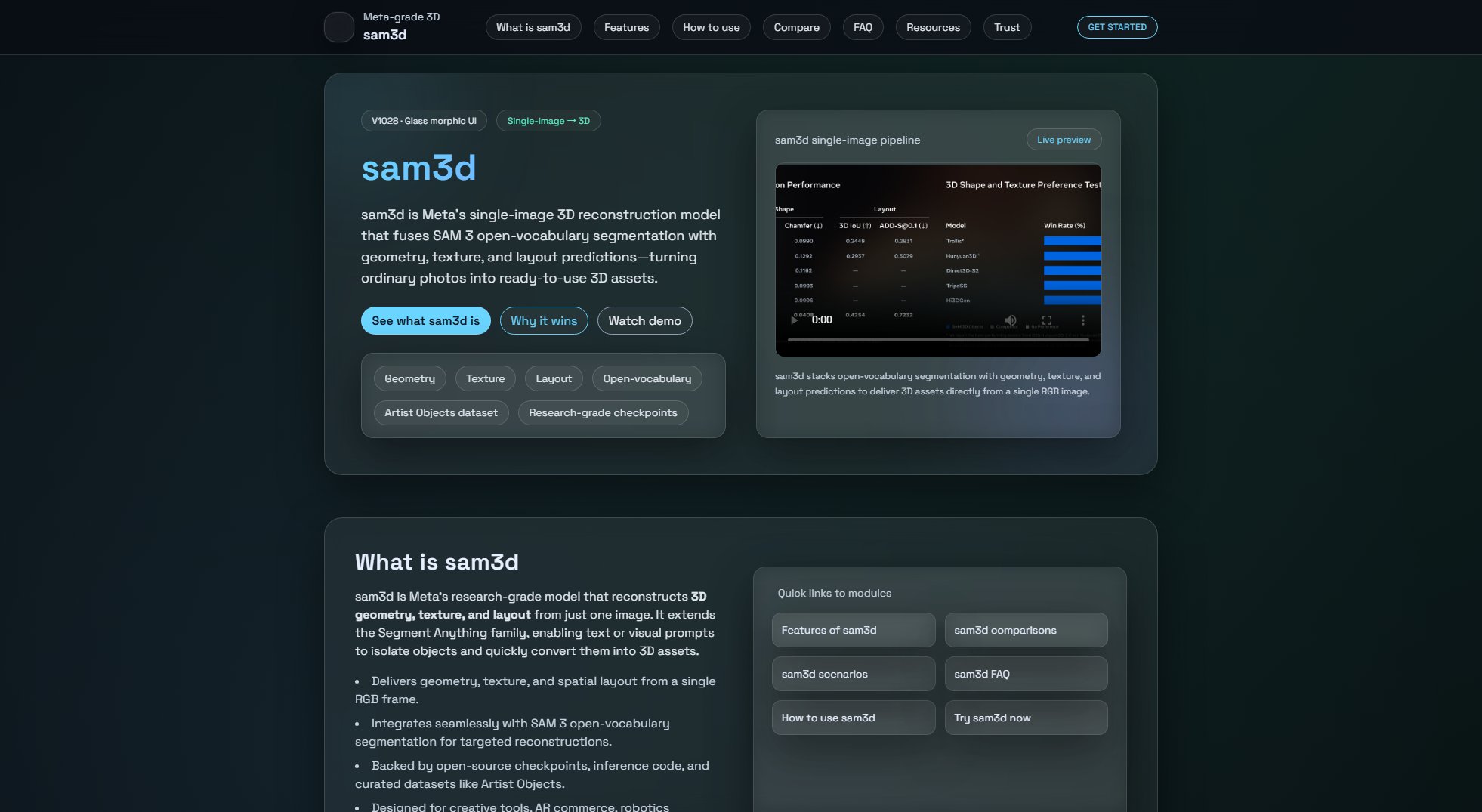

sam3d Interface & Screenshots

sam3d Official screenshot of the tool interface

What Can sam3d Do? Key Features

Single-image → 3D

sam3d infers full 3D shape, texture, and layout from just one RGB photo, eliminating the need for multi-view or LiDAR setups. This feature is perfect for quick and efficient 3D asset generation from existing images.

Open-vocabulary segmentation

Leveraging SAM 3, sam3d allows users to isolate objects using text, points, or box prompts. This open-vocabulary capability enables targeted 3D reconstructions from natural language or visual cues.

Open ecosystem

sam3d ships with checkpoints, inference code, and benchmarks like Artist Objects and SAM 3D Body. This open ecosystem supports reproducible research and smooth integration into production pipelines.

XR ready

Designed for AR/VR workflows, sam3d allows users to import single-image scans into virtual rooms, mixed reality scenes, and immersive storytelling environments, enhancing creative and commercial applications.

Efficient inputs

sam3d works with legacy photos, user-generated content, and single product shots, reducing the complexity and cost associated with traditional 3D capture methods.

Benchmarks

Includes clear evaluation suites to measure performance, identify domain gaps, and fine-tune the model for specific use cases, ensuring optimal results across different applications.

Best sam3d Use Cases & Applications

Creative production

Accelerate game development, CGI, and social content creation by converting single photos of products or props into 3D assets for use in Blender or game engines.

E-commerce & AR shopping

Enable 'view in room' features by reconstructing 3D models from single product shots, enhancing customer experience in AR viewers.

Robotics & autonomy

Provide 3D priors for robotics perception when depth data is missing, complementing LiDAR stacks with inferred shape and free space information.

Medical & scientific visualization

Transform 2D scans or microscopy images into 3D forms for detailed inspection and analysis in medical and biological research.

How to Use sam3d: Step-by-Step Guide

Capture & prompt: Use a single, well-lit RGB image. Optionally apply SAM 3 with a text or box prompt to isolate the target object for reconstruction.

Reconstruct with sam3d: Run inference using the provided checkpoints and code to predict geometry, texture, and layout from the input image.

Export & deploy: Export the generated mesh and texture files for use in AR viewers, 3D engines, robotics simulators, or other applications.

Optimize results: Ensure sharp images, balanced lighting, and minimal occlusion for best performance. Use SAM 3 prompts to refine object isolation.

sam3d Pros and Cons: Honest Review

Pros

Considerations

Is sam3d Worth It? FAQ & Reviews

sam3d reconstructs 3D assets from a single image with SAM 3 prompts, while photogrammetry requires multiple calibrated views and controlled capture conditions.

No. sam3d predicts geometry, texture, and layout from RGB images alone, reducing the need for LiDAR or depth cameras.

Yes. Use SAM 3 open-vocabulary prompts (text, points, boxes) to mask the object, then pass it to sam3d for clean reconstruction.

sam3d may struggle with low-res, noisy, or heavily occluded images, rare categories, deformable objects, and scenes with motion blur.

Yes. Meta provides checkpoints, inference code, and benchmarks, enabling reproducible research and production pilots.