LongCat Flash AI

Meituan's ultra-fast AI chat with <100ms responses

What is LongCat Flash AI? Complete Overview

LongCat Flash AI is Meituan's revolutionary AI chat model designed for lightning-fast conversational experiences. With sub-100ms response times, exceptional accuracy, and advanced long context understanding, it transforms how users interact with AI. The model excels in real-time applications, supporting complex conversations while maintaining human-like understanding. Its technical architecture features optimized inference engines and distributed computing capabilities, making it ideal for enterprises, developers, and general users who demand speed and reliability in their AI interactions. LongCat Flash supports 50+ languages and offers comprehensive developer tools, positioning it as a next-generation solution for customer support, creative writing, coding assistance, and multilingual communication.

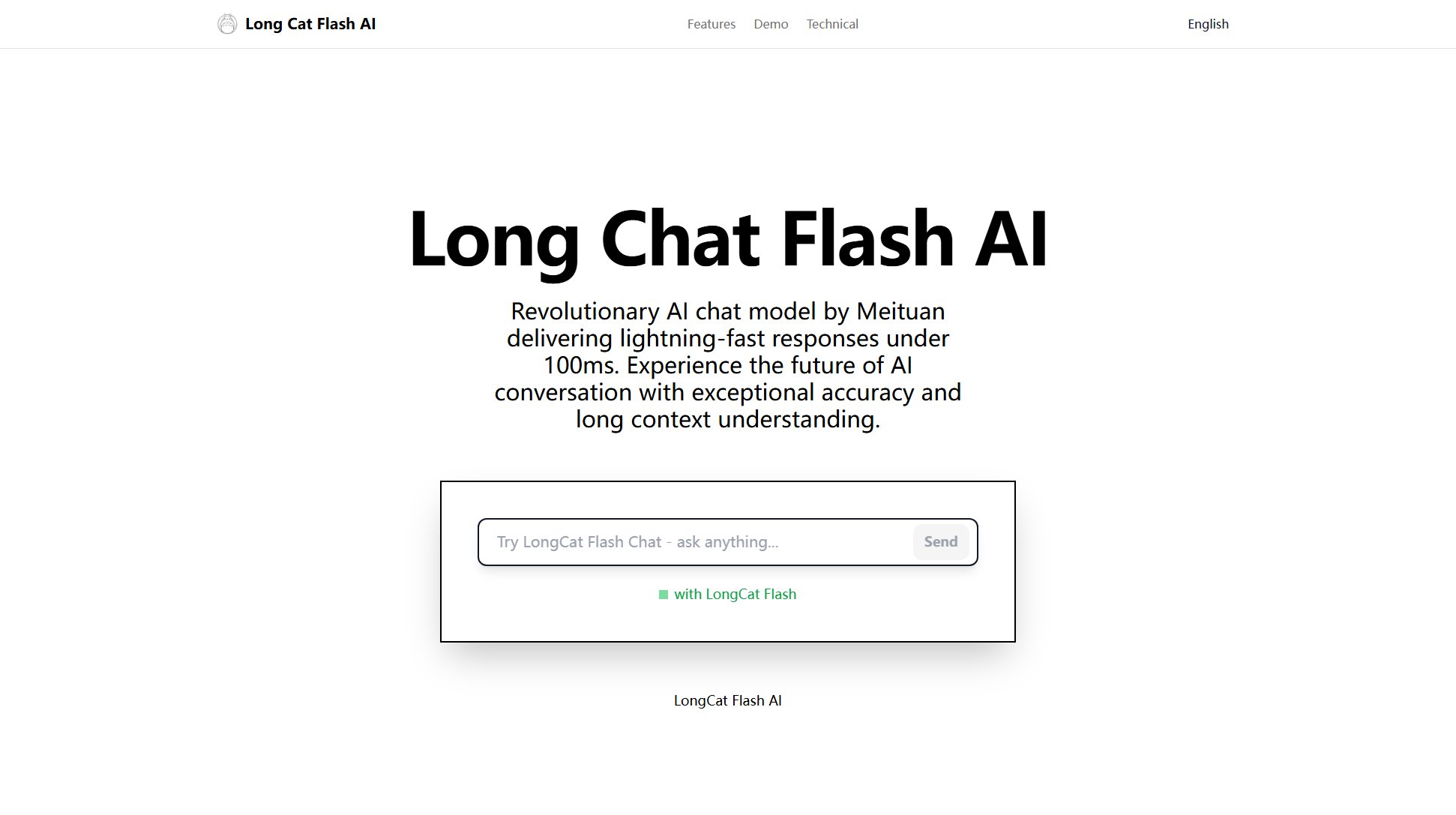

LongCat Flash AI Interface & Screenshots

LongCat Flash AI Official screenshot of the tool interface

What Can LongCat Flash AI Do? Key Features

Lightning-Fast Responses

Delivers AI responses in under 100ms, enabling real-time conversations without lag. The custom-built Flash Inference Engine reduces latency by 90% compared to standard implementations, making it ideal for time-sensitive applications like customer support and live chat systems.

Long Context Understanding

Handles complex conversations with advanced context retention across 128K tokens. Maintains 97.8% context accuracy in extended dialogues, outperforming traditional models that lose context in long conversations.

Multilingual Capabilities

Supports 50+ languages with native-level understanding and generation. Achieves 94.5% accuracy across diverse languages, enabling global applications without compromising quality.

Enterprise-Grade Architecture

Built on Meituan's distributed computing infrastructure with 99.9% uptime SLA. Features auto-scaling capabilities handling 10,000+ requests/second, with end-to-end encryption and privacy protection for business-critical applications.

Developer-Friendly Integration

Offers simple REST API with comprehensive SDKs (Python, JavaScript, Go, Java) and detailed documentation. Supports real-time streaming and WebSocket connections for dynamic applications.

Advanced Coding Assistance

Provides 96.1% functional code output success rate for programming help. Offers instant code reviews, debugging assistance, and architecture advice with context-aware suggestions.

Best LongCat Flash AI Use Cases & Applications

Instant Customer Support

Deploy LongCat Flash for 24/7 customer service with human-like responses under 100ms. Reduces wait times by 90% while handling complex queries about products, services, and troubleshooting.

Creative Writing Collaboration

Authors use the AI for real-time brainstorming, receiving instant feedback on plot development, character arcs, and stylistic suggestions while maintaining long narrative context.

Developer Pair Programming

Software engineers accelerate coding with immediate assistance. The AI suggests optimizations, debugs code with 96.1% accuracy, and explains complex concepts during development sessions.

Multilingual Business Communication

Global teams conduct meetings with real-time translation across 50+ languages, with the AI maintaining conversation context and cultural nuances in prolonged discussions.

How to Use LongCat Flash AI: Step-by-Step Guide

Obtain API access by signing up on the LongCat Flash website. Choose between free trial or enterprise plans based on your usage requirements.

Install the preferred SDK (Python, JavaScript, etc.) using package managers. For Python: 'pip install longcat-flash'. Refer to comprehensive documentation for language-specific setup.

Initialize the client with your API key. Configure parameters like model version ('longcat-flash-v1'), temperature (0.7 recommended), and max tokens (150 default).

Send your first chat completion request. Structure messages with role ('user') and content. The API returns responses typically in <100ms with conversation context maintained.

Implement streaming for real-time applications. Use WebSocket connections for continuous conversations, taking advantage of the model's sub-100ms latency.

LongCat Flash AI Pros and Cons: Honest Review

Pros

Considerations

Is LongCat Flash AI Worth It? FAQ & Reviews

Through Meituan's custom Flash Inference Engine that optimizes neural network computations, advanced caching of common patterns, and global distributed computing infrastructure that reduces latency.

Yes, the Professional and Enterprise plans include commercial usage rights. The Free Trial is for development/testing only.

Official SDKs exist for Python, JavaScript, Go, and Java. The REST API can be used with any language that supports HTTP requests.

While both support 128K tokens, LongCat Flash maintains 97.8% context accuracy versus 95% in benchmarks, with faster recall of earlier conversation points.

Enterprise plans offer private cloud and on-premise deployments with custom security requirements and performance tuning.