Inferable

Open-source platform for creating versioned, durable AI workflows

What is Inferable? Complete Overview

Inferable is an open-source platform designed for building reliable AI workflows with humans in the loop. It enables developers to create versioned, durable workflows that can pause for human input for minutes or days, then resume from where they left off. The platform integrates with your existing APIs and databases using familiar programming primitives. Inferable is particularly valuable for teams needing production-ready LLM primitives without having to build the underlying infrastructure. It's ideal for developers working on AI applications that require structured outputs, workflow versioning, human-in-the-loop capabilities, and external notifications. The platform runs on your own infrastructure with outbound-only connections for enhanced security, and is completely open-source and self-hostable for maximum control.

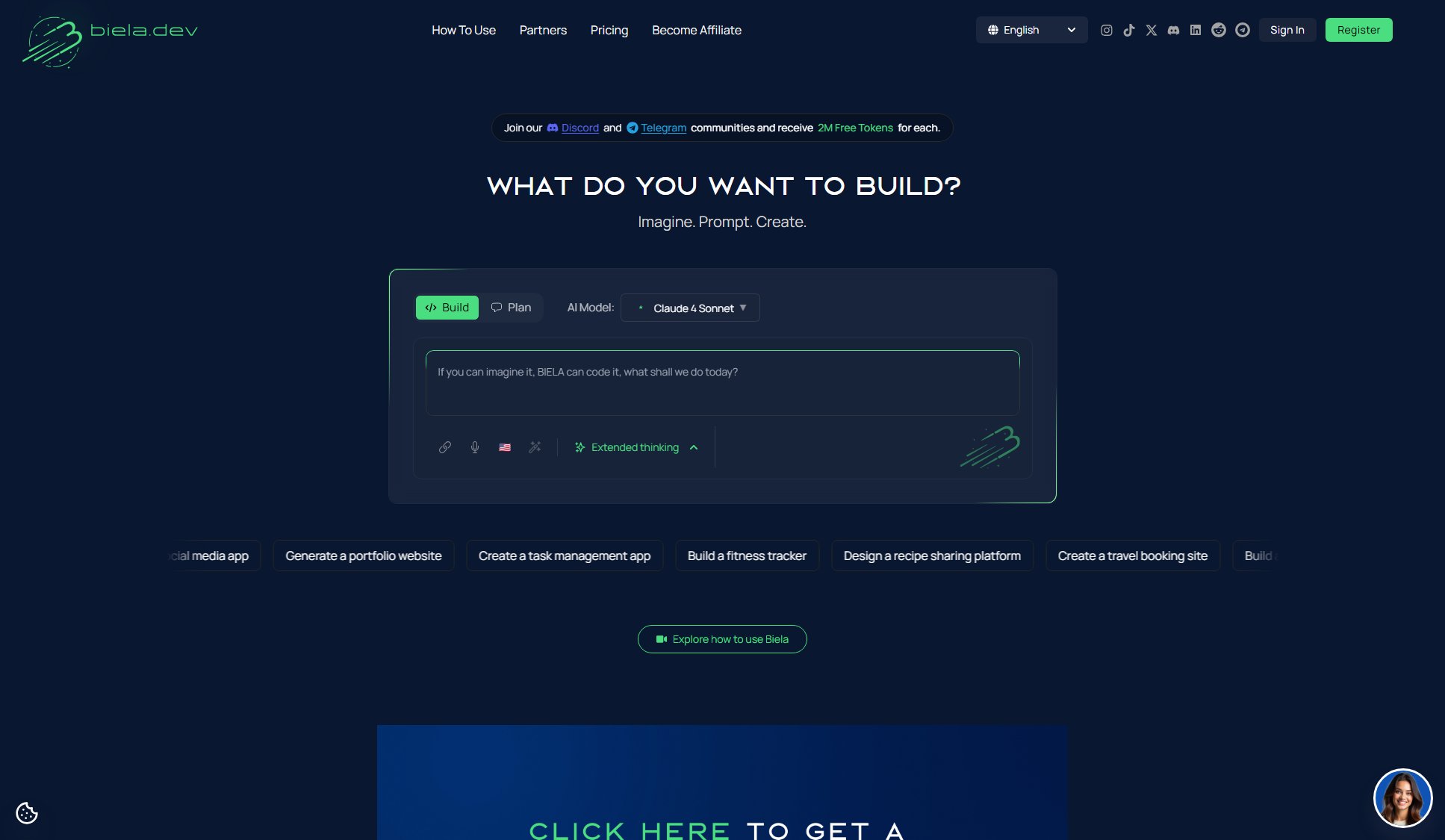

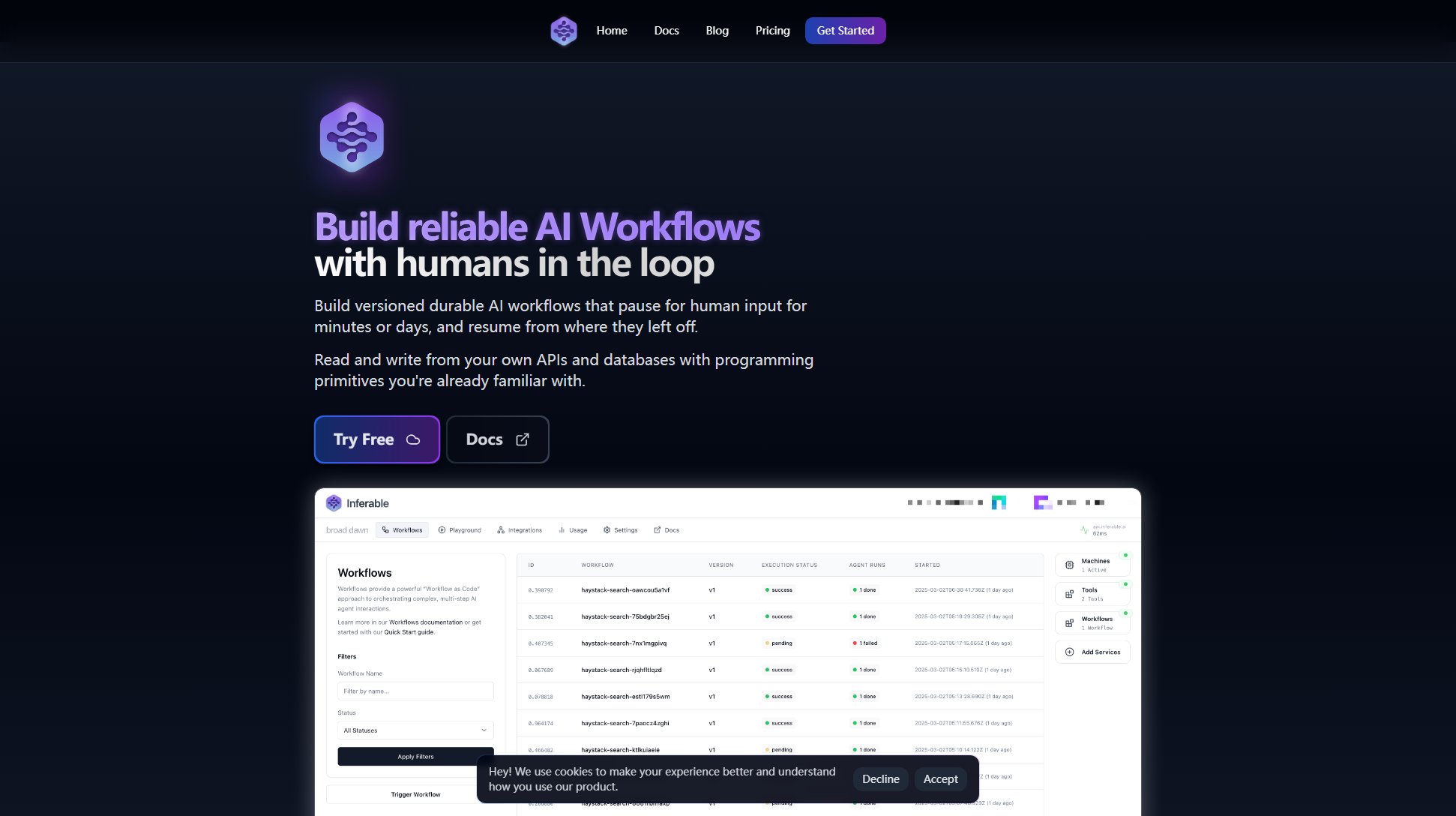

Inferable Interface & Screenshots

Inferable Official screenshot of the tool interface

What Can Inferable Do? Key Features

Workflow Versioning

Evolve your long-running workflows over time in a backwards-compatible way. Define multiple versions of the same workflow as requirements change. Workflow executions maintain version affinity - if a workflow version is updated while an execution is in progress, it continues using the original version until completion. This allows you to add new features, improve logic, or fix bugs without disrupting existing executions.

Structured Outputs

Inferable provides built-in capabilities for structured outputs from LLMs, eliminating the need to build parsing logic for unstructured responses. Define schemas for your outputs including enums, arrays, and nested objects. This feature ensures consistent data formats across your workflows and simplifies integration with other systems.

Human-in-the-loop

Build workflows that can pause for human input for minutes or days, then seamlessly resume where they left off. This is particularly valuable for approval workflows, quality control checks, or any process requiring human judgment before proceeding. The durable execution model means you don't need to worry about timeouts or maintaining state during pauses.

On-premise Execution

Your workflows run on your own infrastructure with no deployment step required. Inferable uses outbound-only connections, eliminating the need to open inbound ports or expose internal services. This architecture provides enhanced security while maintaining all the benefits of a managed workflow platform.

Managed State

Inferable handles all the state required for durable workflows without requiring you to provision or manage databases. Everything is API-driven, simplifying your infrastructure requirements while providing reliable workflow execution.

Best Inferable Use Cases & Applications

Customer Risk Assessment

Financial institutions can use Inferable to build durable workflows that pull customer data from multiple sources, perform risk analysis using LLMs with structured outputs, then pause for human review of high-risk cases before finalizing assessments. Workflow versioning allows continuous improvement of the risk models without disrupting in-process evaluations.

Document Processing Pipeline

Create a versioned document processing workflow that extracts information from uploaded documents using LLMs, validates the extracted data against business rules, and routes exceptions for human correction. The workflow can maintain state across multiple iterations of document review and correction.

AI-Powered Customer Support

Build workflows that automatically respond to customer inquiries using LLMs, but escalate complex cases to human agents. The workflow maintains context throughout the interaction, allowing seamless transitions between automated and human-assisted support.

How to Use Inferable: Step-by-Step Guide

Install the Inferable SDK using your preferred package manager. Import the library into your project and initialize it with your API credentials. The SDK provides the core functionality for creating and managing workflows.

Define your workflow by specifying a name and input schema. This establishes the contract for what data your workflow expects to receive when triggered. The schema helps with validation and documentation of your workflow's interface.

Create workflow versions to implement your processing logic. Each version can build upon previous ones while maintaining compatibility. Within your version definition, you can call external APIs, use LLM primitives, and implement business logic using standard programming constructs.

Trigger your workflow by calling the execution API with the required input data. The workflow will process through its steps, potentially pausing for human input if configured. You can monitor execution status through the developer console or API.

Handle workflow results through callbacks or by querying execution status. For human-in-the-loop workflows, provide interfaces for users to review and approve/reject intermediates. Completed workflow outputs can be routed to downstream systems or stored as needed.

Inferable Pros and Cons: Honest Review

Pros

Considerations

Is Inferable Worth It? FAQ & Reviews

A workflow execution is a single triggering of your workflow. Each time your workflow is triggered (whether manually or automatically), it counts as one execution. We don't bill for cached executions - you only pay for actual workflow runs.

BYO Models stands for 'Bring Your Own Models'. Inferable allows you to configure and use your preferred AI models and providers through our SDKs, giving you flexibility while leveraging our orchestration platform for workflow management.

Yes, Inferable is completely open-source and can be self-hosted on your own infrastructure. This gives you complete control over your data and compute resources. We provide documentation for self-hosting on various platforms.

Workflow versioning allows you to evolve your workflows over time while maintaining compatibility. When you update a workflow version, existing executions continue with the original version until completion. New executions use the latest version unless specifically configured otherwise.