HyperMink AI

Democratizing AI with privacy-first open-source solutions

What is HyperMink AI? Complete Overview

HyperMink AI is an open-source platform designed to make AI accessible and understandable for everyday users while prioritizing privacy. The core offering is Inferenceable, a lightweight yet powerful AI inference server built with Node.js that leverages llama.cpp and llamafile technologies. This solution targets both individual developers and organizations looking for a simple, production-ready way to deploy AI models without compromising on transparency or data sovereignty. By focusing on open-source foundations, HyperMink removes the typical barriers of complex AI infrastructure, enabling users to run models locally with full control over their data processing.

HyperMink AI Interface & Screenshots

HyperMink AI Official screenshot of the tool interface

What Can HyperMink AI Do? Key Features

Open-Source Architecture

Inferenceable's completely open-source nature allows full transparency and customization, with its Node.js foundation making it accessible to a wide range of developers. The integration with proven technologies like llama.cpp ensures reliable performance while maintaining flexibility.

Production-Ready Inference

Despite its simplicity, the server is designed for real-world deployment scenarios with robust performance characteristics. The lightweight architecture ensures efficient resource utilization even on modest hardware setups.

Pluggable Design

The modular architecture allows developers to easily extend functionality or integrate with existing systems. This design philosophy future-proofs implementations as AI needs evolve.

Privacy-First Approach

By enabling local inference capabilities, HyperMink ensures sensitive data never leaves user-controlled environments. This addresses growing concerns about cloud-based AI services and data privacy regulations.

Simplified AI Accessibility

The project specifically aims to lower technical barriers, making advanced AI capabilities available to developers without requiring deep machine learning expertise or expensive infrastructure.

Best HyperMink AI Use Cases & Applications

Local AI Development Environment

Developers can create isolated AI testing environments on their workstations without relying on cloud services, enabling faster iteration while protecting proprietary data during development cycles.

Privacy-Sensitive Applications

Healthcare or financial institutions can deploy Inferenceable to process sensitive documents internally, ensuring compliance with regulations like HIPAA or GDPR by keeping all data processing on-premises.

Edge AI Implementations

Manufacturing or IoT deployments can leverage the lightweight server to bring AI capabilities directly to edge devices in factories or field locations with limited connectivity.

Educational AI Platforms

Academic institutions can use Inferenceable to teach AI concepts with hands-on experience, allowing students to experiment with models without complex infrastructure requirements.

How to Use HyperMink AI: Step-by-Step Guide

Clone the Inferenceable repository from GitHub using the provided link to access the complete source code and documentation.

Install required dependencies including Node.js runtime and any system libraries needed for llama.cpp integration as specified in the documentation.

Configure the server by modifying the provided configuration files to specify model paths, inference parameters, and any custom plugins required for your use case.

Load your preferred AI models (compatible with llama.cpp) into the designated directory structure. Inferenceable supports various model formats out of the box.

Start the inference server using the provided startup scripts. The server exposes standard API endpoints that can be integrated with your applications.

Monitor and scale your deployment as needed, taking advantage of the production-ready features like logging, metrics collection, and health checks.

HyperMink AI Pros and Cons: Honest Review

Pros

Considerations

Is HyperMink AI Worth It? FAQ & Reviews

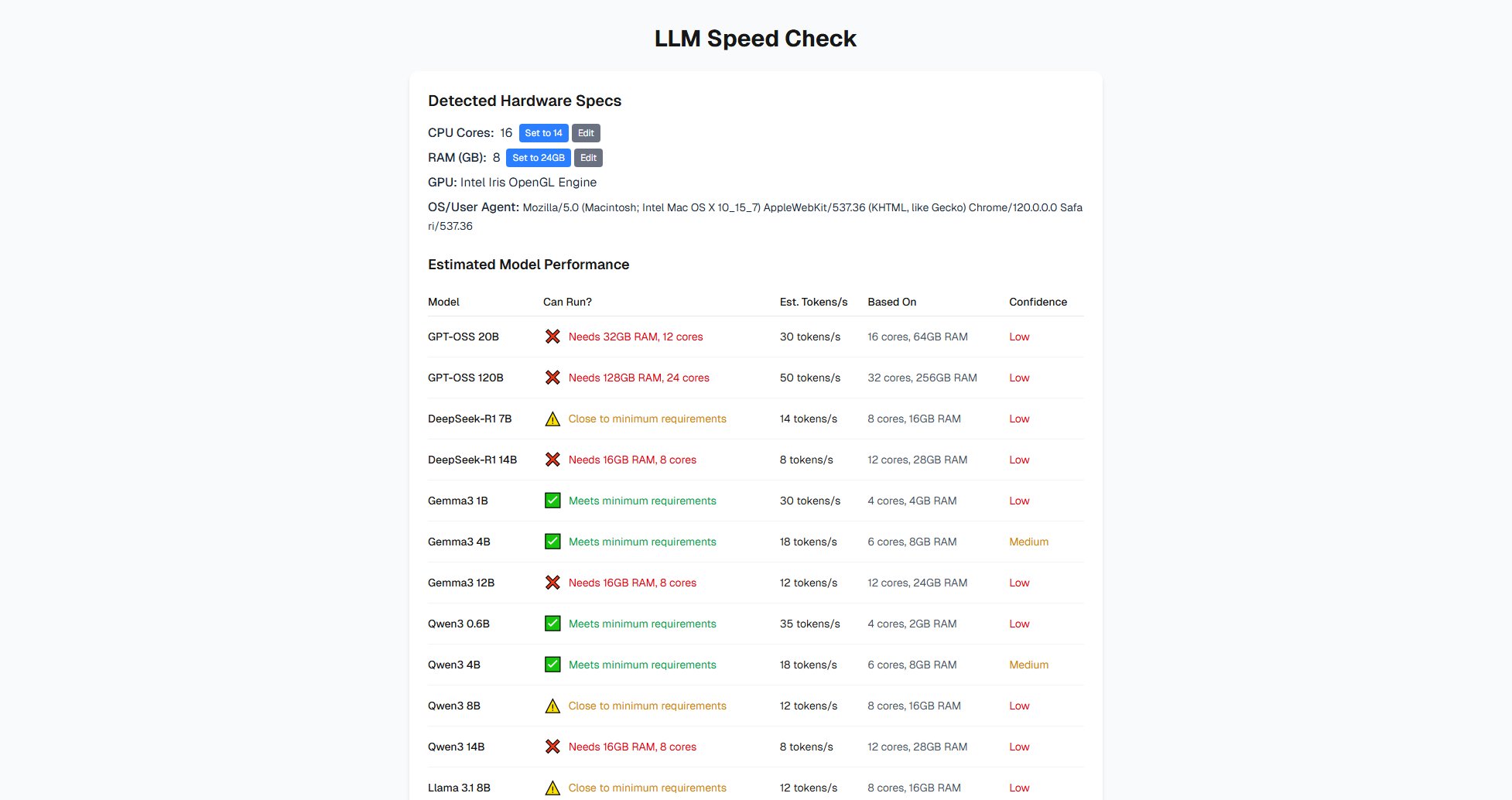

Requirements vary based on model size, but it can run on most modern computers. Smaller models work well on consumer laptops, while larger models benefit from GPUs or high-RAM systems.

Yes, the open-source license permits commercial use. You're responsible for complying with any model licenses when deploying specific AI models with the server.

While commercial services offer convenience, Inferenceable provides full control and privacy. Performance depends on your hardware, but the tradeoff is complete data sovereignty.

Inferenceable supports models compatible with llama.cpp, which includes many popular open-weight LLM architectures. Check the documentation for specific format requirements.

As an open-source project, support comes through community channels. Organizations can implement their own support structures or contract developers familiar with the technology.