ScraperAPI

Scale web data collection with a powerful scraping API

What is ScraperAPI? Complete Overview

ScraperAPI is a powerful web scraping solution designed to help businesses and developers collect data from millions of web sources efficiently. It solves common pain points like IP bans, CAPTCHAs, and JavaScript rendering, making large-scale data acquisition seamless. The tool is ideal for eCommerce businesses, market research firms, SEO agencies, travel agencies, VCs, hedge funds, and AI/ML teams who need reliable, structured data to drive their strategies. With features like async scraping, automated data pipelines, and specialized domain scrapers, ScraperAPI simplifies the data collection process while maintaining high performance and accuracy.

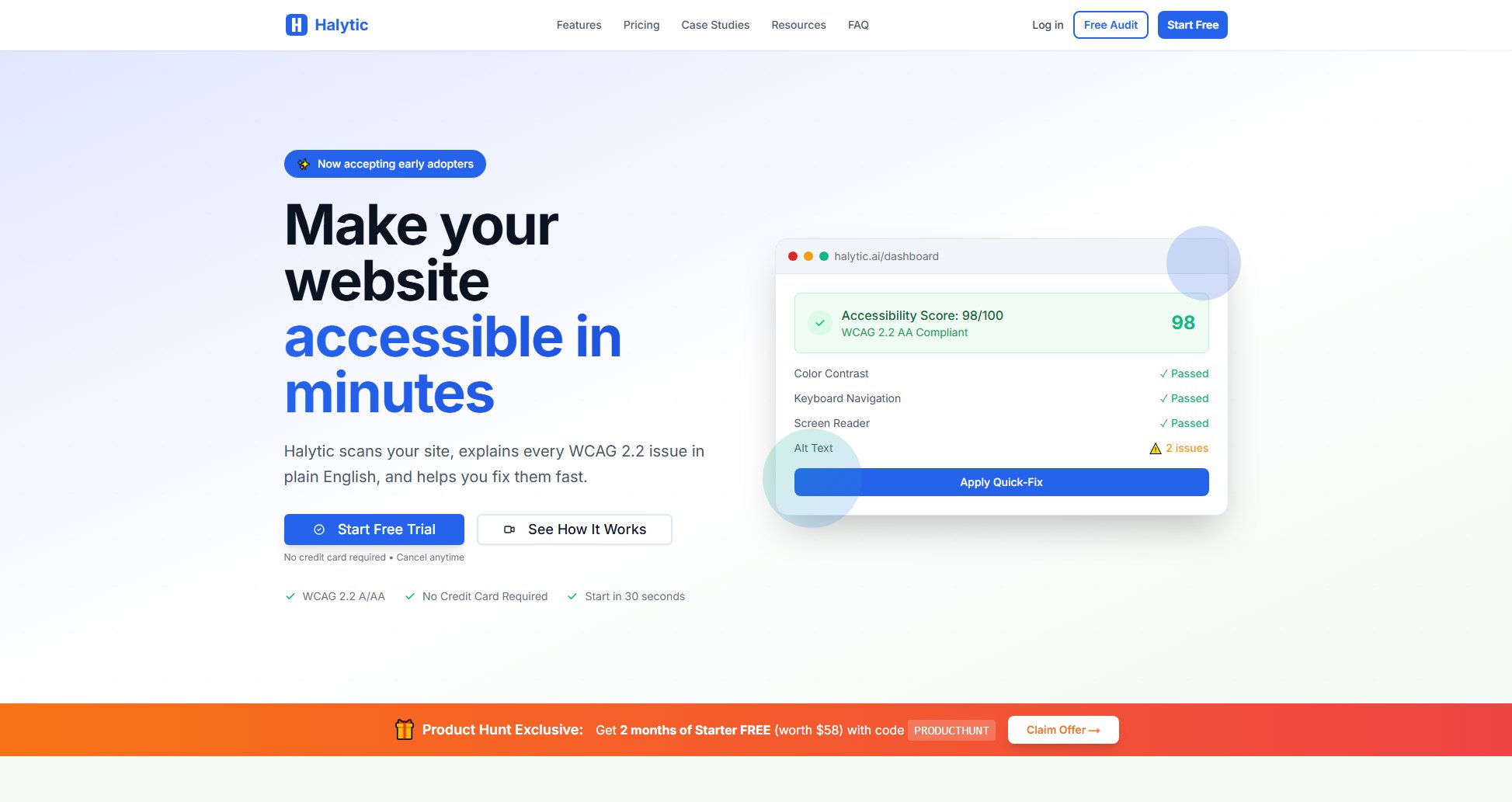

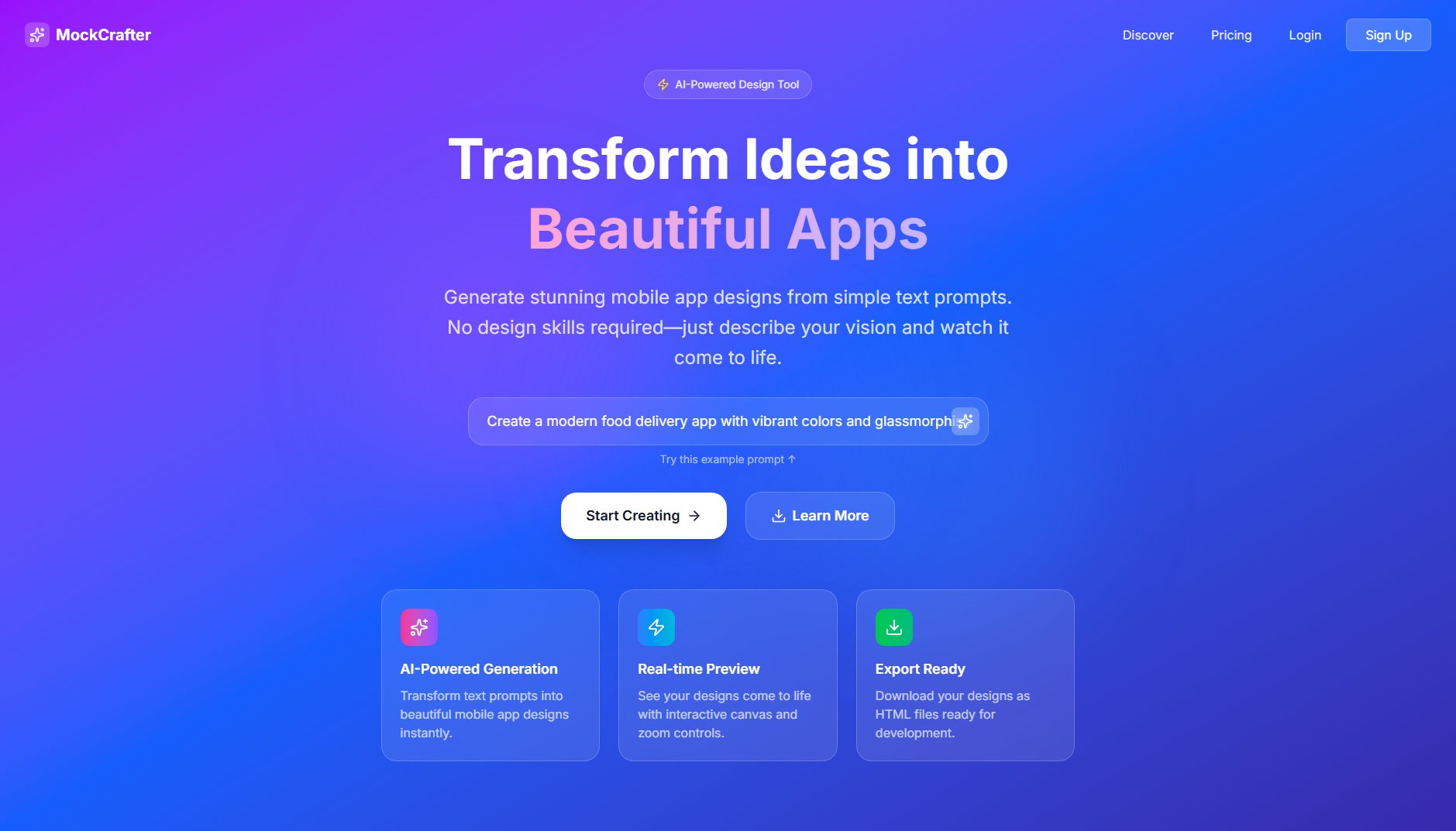

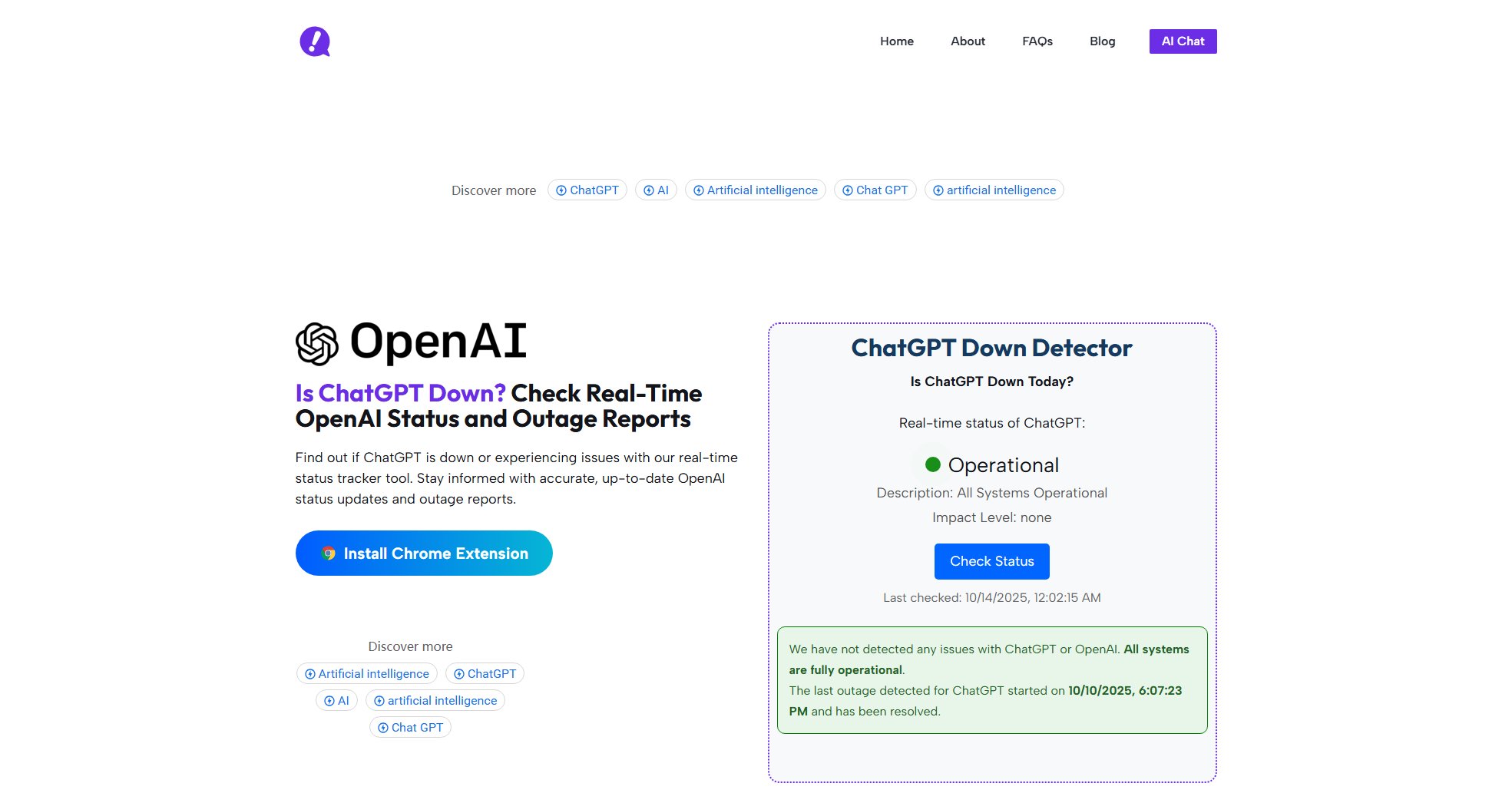

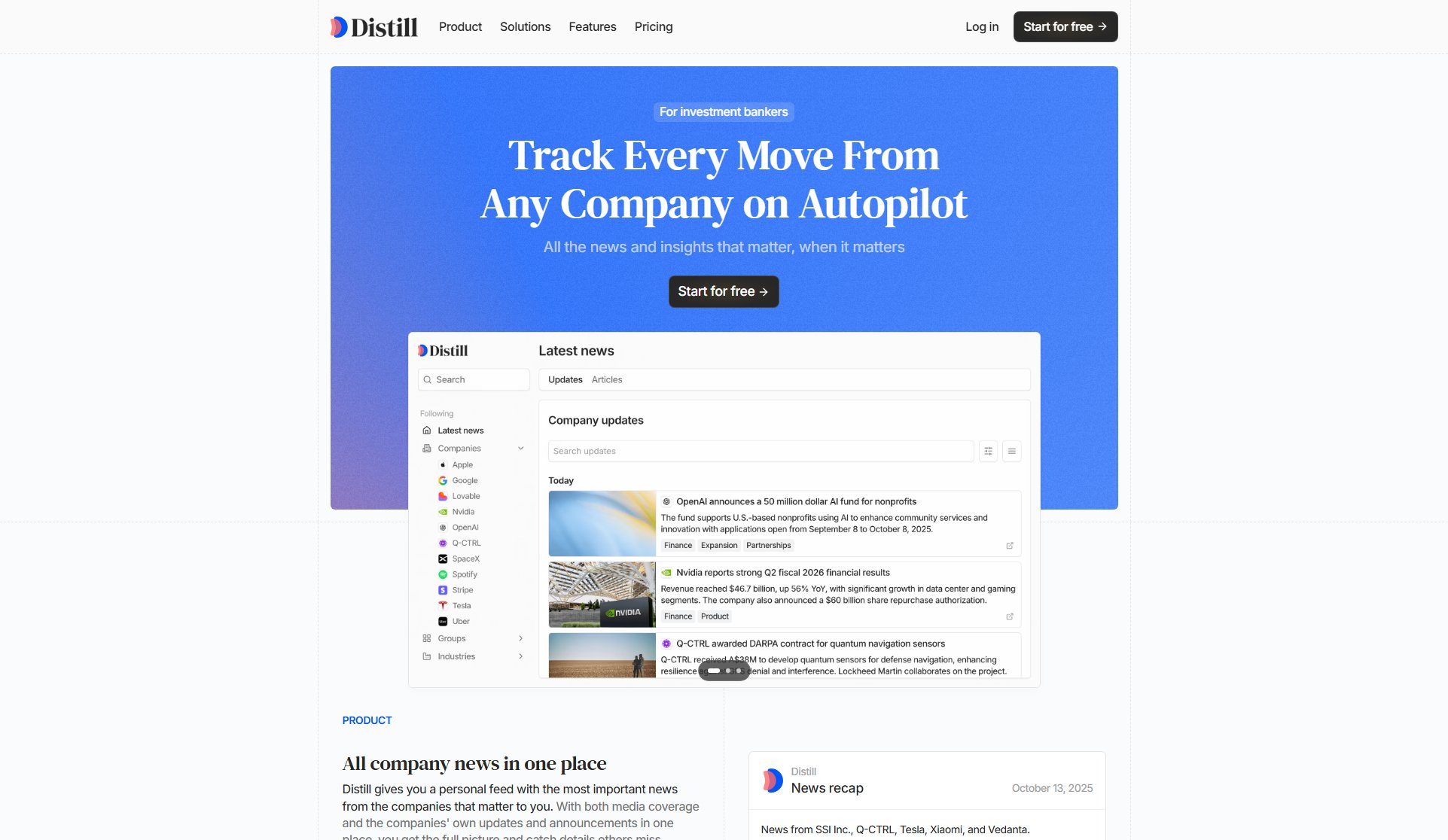

ScraperAPI Interface & Screenshots

ScraperAPI Official screenshot of the tool interface

What Can ScraperAPI Do? Key Features

Async Scraper Service

Send millions of requests asynchronously without worrying about rate limits or IP bans. This feature allows you to scale your data collection effortlessly, handling large volumes of requests while maintaining high efficiency.

Structured Data

Get structured JSON data from in-demand domains with ease. ScraperAPI automatically parses and formats the scraped data, saving you time and effort in post-processing.

DataPipeline

Automate your data collection without writing a single line of code. DataPipeline is perfect for non-technical users who need regular, reliable data updates without manual intervention.

Scraping API

Collect data from millions of web sources with a simple API call. The Scraping API handles proxies, browsers, and CAPTCHAs, so you can focus on analyzing the data.

Large-Scale Data Acquisition

Handle millions of requests without sacrificing efficiency. ScraperAPI's infrastructure is built to support high-volume scraping, ensuring you get the data you need when you need it.

SERP Data Collection

Collect search engine results page (SERP) data for any query in seconds. This feature is invaluable for SEO agencies and marketers looking to track rankings and optimize their strategies.

Ecommerce Data Collection

Grow your eCommerce business with first-party data. Scrape product listings, prices, and reviews to stay competitive and make informed decisions.

Real Estate Data Collection

Make smart investments by collecting property listing data on autopilot, 24/7. This feature provides real-time insights into market trends and opportunities.

Online Reputation Management

Monitor the web to identify MAP violations and brand misrepresentation. Keep track of how your brand is being represented online and take action when necessary.

Best ScraperAPI Use Cases & Applications

Ecommerce Competitive Analysis

Ecommerce businesses can use ScraperAPI to monitor competitors' product listings, prices, and reviews. This data helps them adjust their strategies to stay competitive and improve profit margins.

Market Research

Market research firms can scrape data from various sources to understand consumer behavior, track trends, and predict shifts in demand. This enables them to provide actionable insights to their clients.

SEO Strategy

SEO agencies can collect SERP data to track keyword rankings, analyze competitors, and inform their optimization strategies. First-party data ensures accuracy and reliability.

Investment Research

VCs and hedge funds can scrape financial news, company data, and market trends to identify investment opportunities and optimize their portfolios.

AI Training Data

AI and ML teams can scale their training data collection by scraping relevant datasets from the web. This accelerates model development and improves accuracy.

How to Use ScraperAPI: Step-by-Step Guide

Sign up for a ScraperAPI account and obtain your API key. This key will authenticate your requests and track your usage.

Choose the type of scraping you need: async scraping, structured data, or DataPipeline. Each option caters to different use cases and technical levels.

Configure your scraping parameters. Specify the URLs, data fields, and any other requirements for your data collection.

Send your request via the API or use the DataPipeline for automated scraping. ScraperAPI will handle the rest, including proxies and CAPTCHAs.

Receive your data in structured JSON format. You can then analyze, store, or integrate it into your workflows as needed.

ScraperAPI Pros and Cons: Honest Review

Pros

Considerations

Is ScraperAPI Worth It? FAQ & Reviews

An API credit is a unit of measurement for your usage of ScraperAPI. Each API call consumes a certain number of credits based on the complexity of the request.

Yes, ScraperAPI can be used for commercial purposes. However, you should ensure compliance with the terms of service of the websites you scrape.

Yes, ScraperAPI automatically handles CAPTCHAs, allowing you to scrape websites without manual intervention.

The number of requests you can make depends on your plan. Free plans have lower limits, while paid plans offer higher or unlimited requests.

Data is delivered in structured JSON format, making it easy to integrate into your applications or workflows.