Release.ai

Deploy high-performance AI models with enterprise-grade security

What is Release.ai? Complete Overview

Release.ai is a cutting-edge platform designed to simplify the deployment and management of AI models in development workflows. It offers lightning-fast inference with sub-100ms latency, seamless scalability, and enterprise-grade security features. The platform is ideal for developers, data scientists, and enterprises looking to integrate AI models into their applications without the hassle of infrastructure management. With optimized infrastructure for various model types, including LLMs and computer vision, Release.ai ensures peak performance and reliability. The platform also provides comprehensive SDKs and APIs for easy integration, real-time monitoring, and expert support from ML professionals.

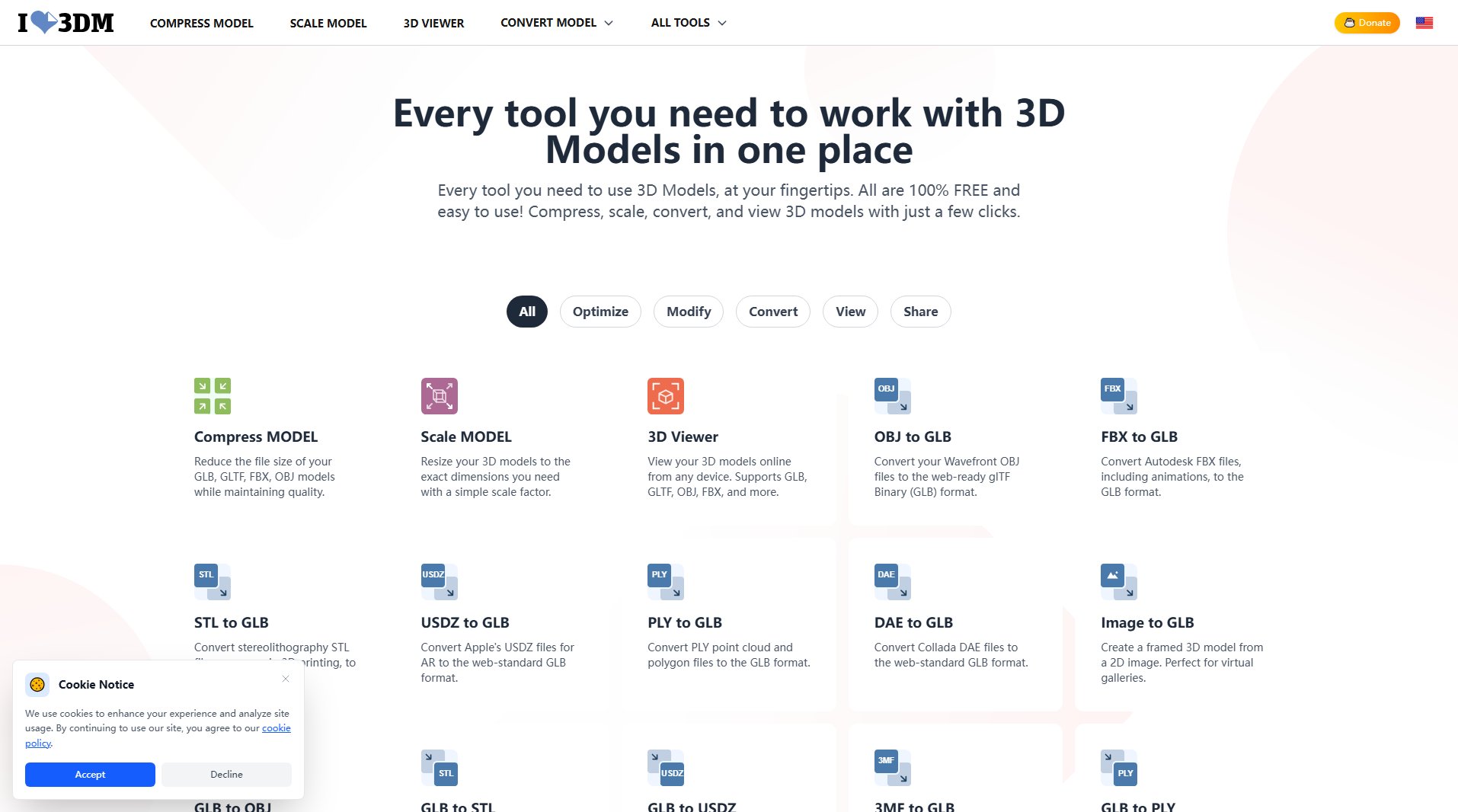

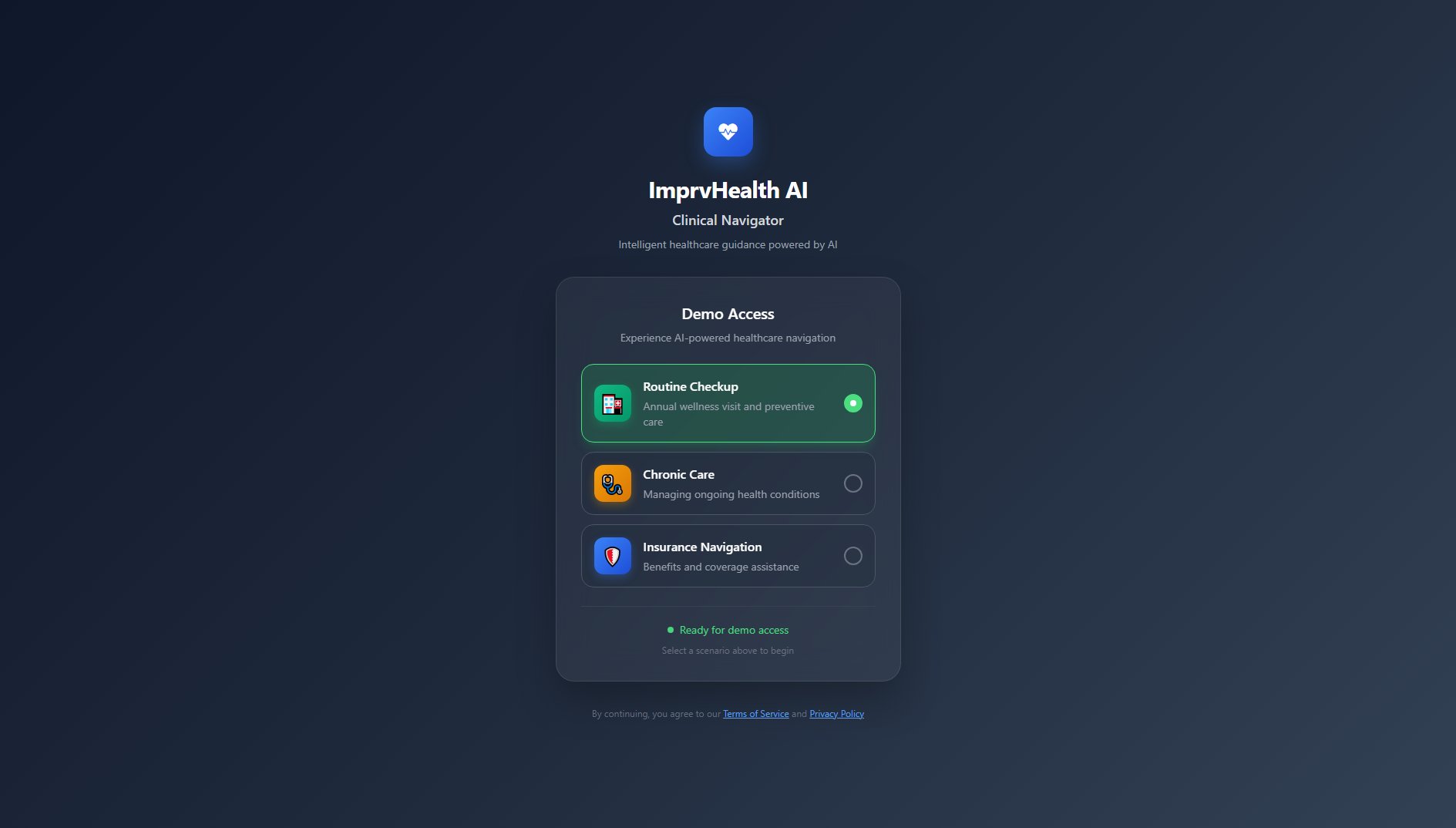

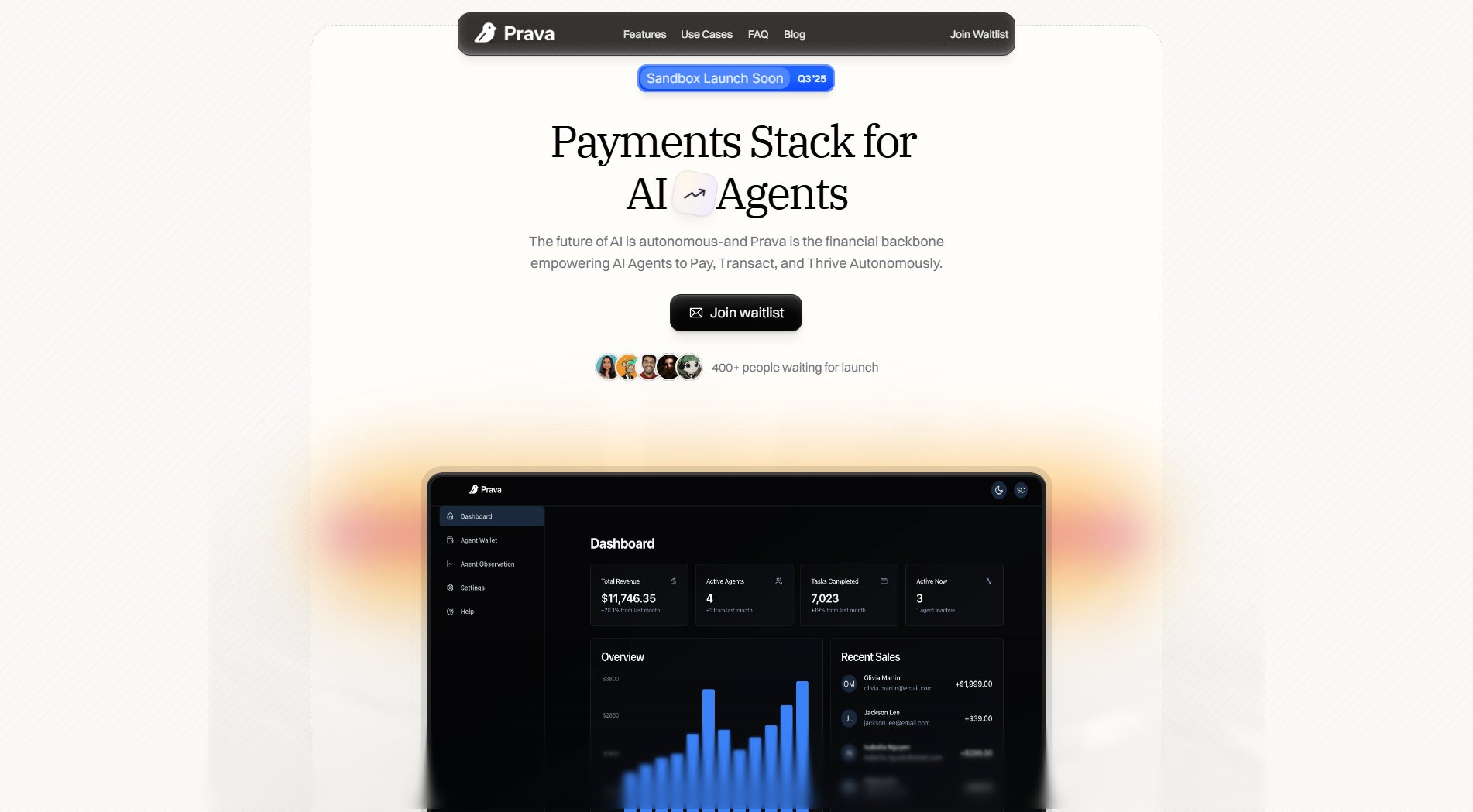

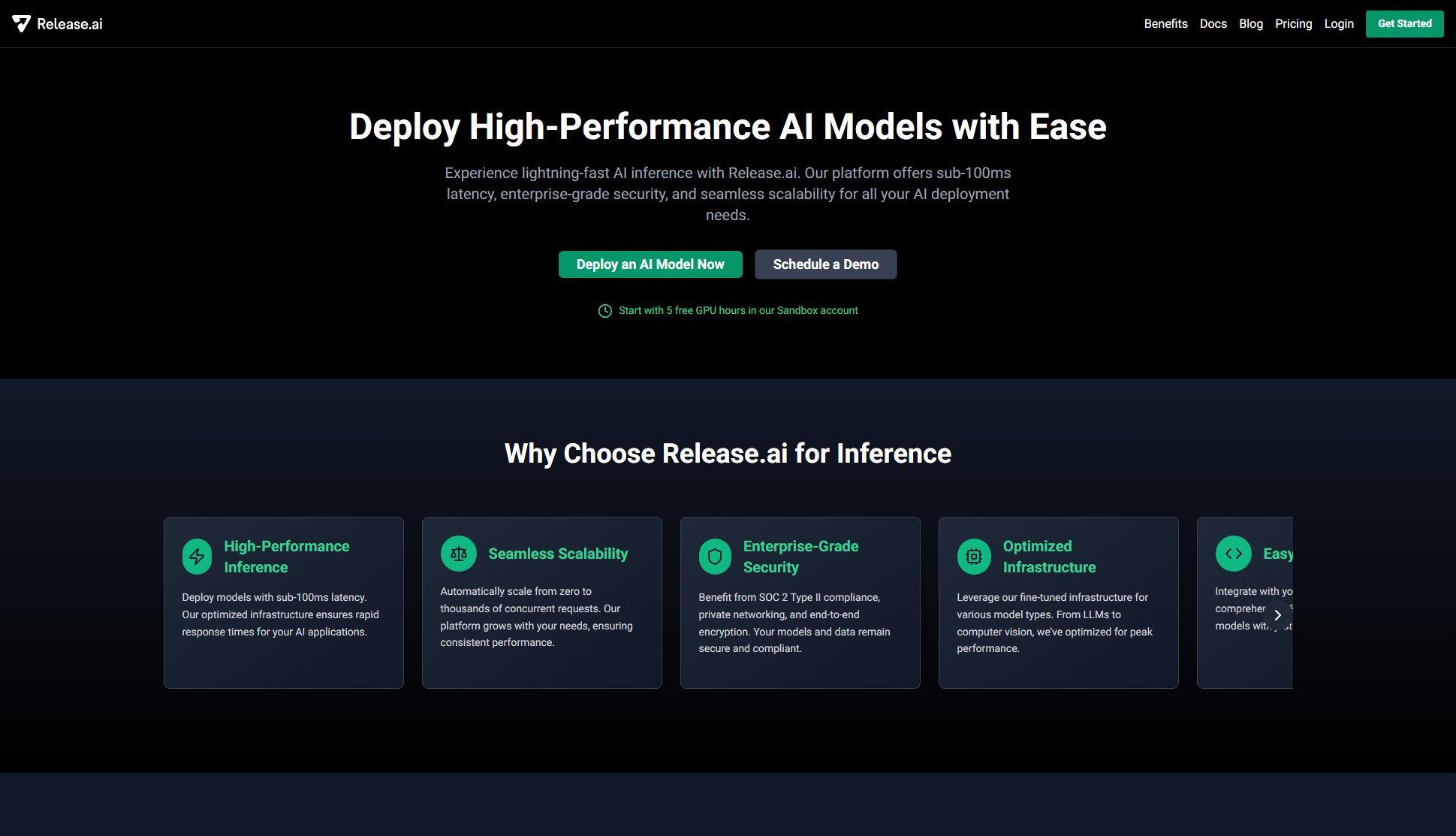

Release.ai Interface & Screenshots

Release.ai Official screenshot of the tool interface

What Can Release.ai Do? Key Features

High-Performance Inference

Release.ai delivers sub-100ms latency for AI model inference, ensuring rapid response times for your applications. The platform's optimized infrastructure is fine-tuned for various model types, from large language models (LLMs) to computer vision, providing consistent high performance.

Seamless Scalability

The platform automatically scales from zero to thousands of concurrent requests, adapting to your needs without manual intervention. This ensures consistent performance even during peak usage, making it ideal for both small projects and large-scale enterprise applications.

Enterprise-Grade Security

Release.ai is SOC 2 Type II compliant, offering private networking and end-to-end encryption to keep your models and data secure. These features make it suitable for industries with strict compliance requirements, such as healthcare and finance.

Optimized Infrastructure

The platform is fine-tuned for various AI model types, ensuring optimal performance for LLMs, computer vision, and other specialized models. This reduces the need for manual optimization and allows developers to focus on building applications.

Easy Integration

Release.ai provides comprehensive SDKs and APIs, allowing developers to deploy models with just a few lines of code. This simplifies the integration process and reduces the time needed to get models up and running in production environments.

Reliable Monitoring

The platform offers real-time monitoring and detailed analytics, helping you track your model's performance and quickly identify and resolve issues. This ensures high availability and reliability for your AI applications.

Cost-Effective Pricing

Release.ai offers a pay-as-you-go pricing model, ensuring you only pay for the resources you use. This scalable approach provides excellent value for both small projects and large-scale deployments.

Expert Support

The platform includes access to a team of ML experts who can help optimize your models and troubleshoot any issues. This support ensures you get the most out of your AI deployments.

Best Release.ai Use Cases & Applications

Natural Language Processing (NLP)

Deploy LLMs like deepseek-r1 or llama3.3 for tasks such as text generation, translation, and sentiment analysis. The platform's low latency and scalability make it ideal for real-time NLP applications.

Computer Vision

Use vision models like llama3.2-vision for image recognition, object detection, and other computer vision tasks. The platform's optimized infrastructure ensures high performance for these resource-intensive applications.

Embeddings and Search

Leverage embedding models like granite-embedding or snowflake-arctic-embed2 for semantic search and recommendation systems. These models are fine-tuned for high accuracy and efficiency.

Code Generation and Assistance

Deploy models like opencoder or athene-v2 for code completion, bug fixing, and other developer tools. The platform's easy integration makes it simple to add AI-powered features to your IDE or development workflow.

Multilingual Applications

Use models like aya-expanse or sailor2 for applications requiring support for multiple languages. These models are trained to perform well across diverse linguistic contexts.

How to Use Release.ai: Step-by-Step Guide

Sign up for a Release.ai account and log in to the platform. New users can start with 5 free GPU hours in the Sandbox account to explore the platform's capabilities.

Browse the model library to select the AI model that fits your needs. The platform offers a wide range of models, from LLMs like deepseek-r1 and llama3.3 to specialized models for vision and embeddings.

Deploy the selected model with just a few clicks. The platform's pre-configured environments ensure instant deployment, typically under 5 minutes.

Integrate the deployed model into your application using the provided SDKs and APIs. The platform's documentation and support resources make this process straightforward.

Monitor the model's performance in real-time using the platform's analytics dashboard. Adjust scaling and other settings as needed to optimize performance.

Scale your deployment as your needs grow. The platform automatically handles scaling, ensuring consistent performance even during high traffic.

Release.ai Pros and Cons: Honest Review

Pros

Considerations

Is Release.ai Worth It? FAQ & Reviews

Release.ai offers a wide range of models, including LLMs (e.g., deepseek-r1, llama3.3), vision models (e.g., llama3.2-vision), embeddings (e.g., granite-embedding), and specialized models for coding and multilingual applications. The platform regularly updates its model library with state-of-the-art options.

Models can typically be deployed in under 5 minutes thanks to the platform's pre-configured environments and automated infrastructure management. This rapid deployment time allows you to focus on building applications rather than managing infrastructure.

Yes, Release.ai is SOC 2 Type II compliant and offers enterprise-grade security features, including private networking and end-to-end encryption. These features make it suitable for industries with strict compliance requirements.

Yes, the platform automatically scales from zero to thousands of concurrent requests, ensuring consistent performance during peak usage. This eliminates the need for manual scaling configuration.

Release.ai offers various support levels, from community resources for free users to 24/7 expert support for enterprise customers. The platform also provides comprehensive documentation and SDKs to help developers integrate models easily.

Release.ai uses a pay-as-you-go pricing model, ensuring you only pay for the resources you use. The platform offers a free Sandbox tier with 5 GPU hours, as well as customizable plans for larger deployments.

Yes, Release.ai supports the deployment of custom models. The platform's optimized infrastructure and expert support can help you achieve high performance with your proprietary models.

Release.ai provides SDKs and APIs that are compatible with popular programming languages, including Python, JavaScript, and more. The platform's documentation includes examples and guides for various languages.