Qalidad

AI-powered no-code web testing & QA automation

What is Qalidad? Complete Overview

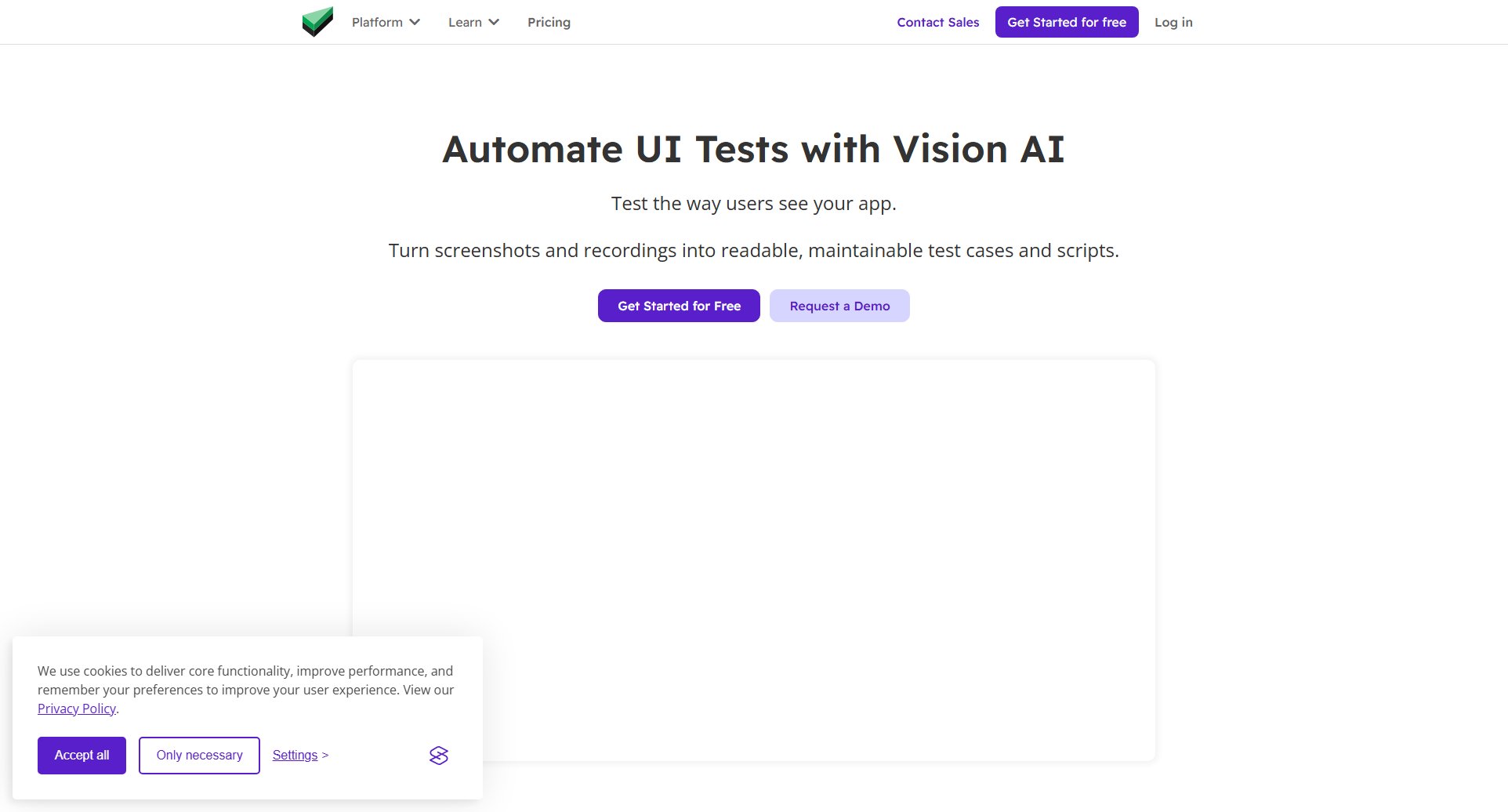

Qalidad is an AI-powered web testing and QA automation platform that revolutionizes how teams perform quality assurance. Using advanced natural language processing, Qalidad allows users to simply describe what they want to test in plain English, and the AI automatically generates comprehensive test cases. The platform eliminates the need for manual test scripting by using intelligent agents that navigate websites like real users, clicking buttons and filling forms naturally. Qalidad is designed for modern development teams of all sizes who want to implement continuous testing without the complexity of traditional automation tools. With features like visual test reporting, scenario management, and scheduled test runs, Qalidad brings enterprise-grade testing capabilities to teams without requiring coding expertise or dedicated QA resources.

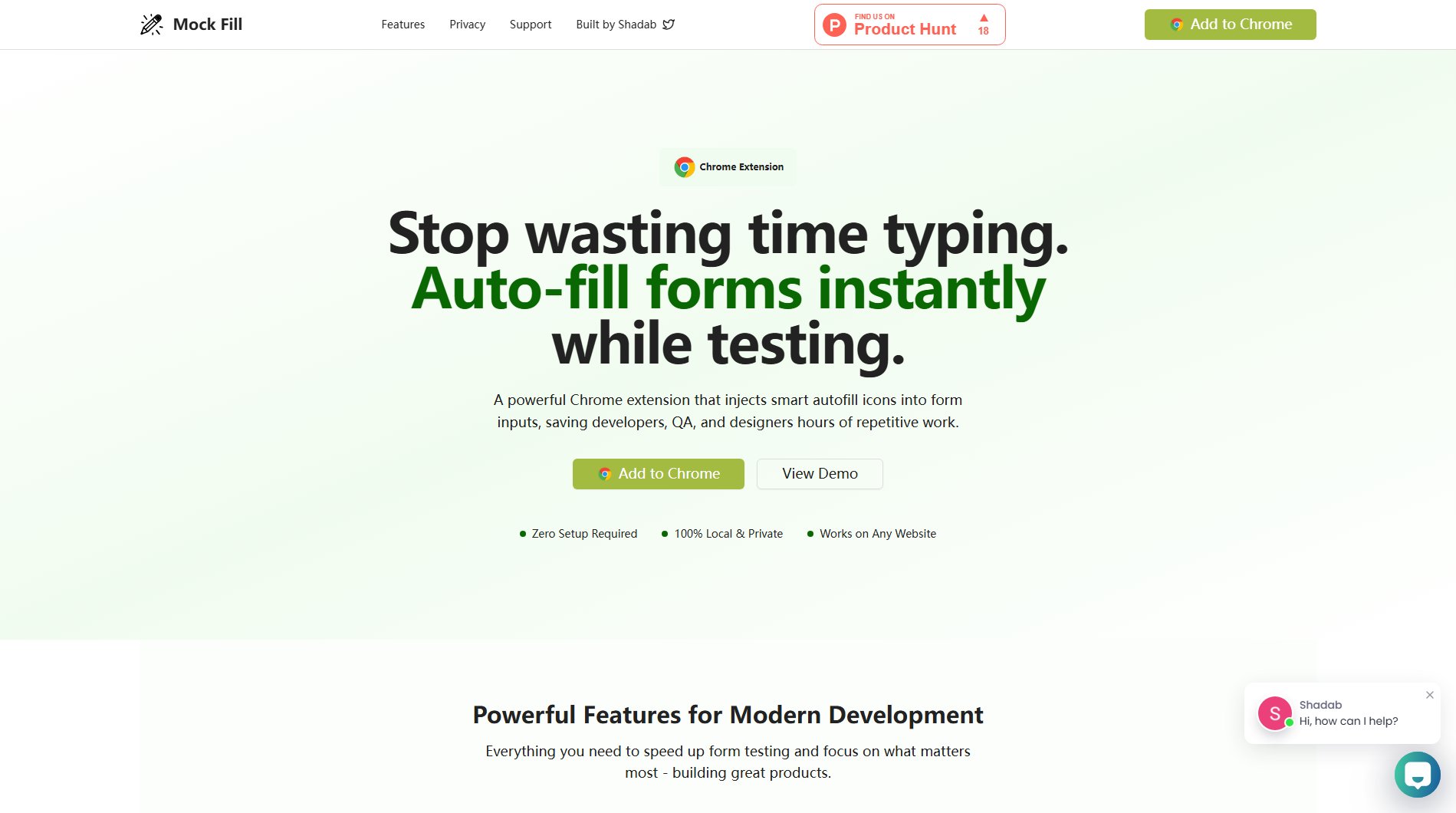

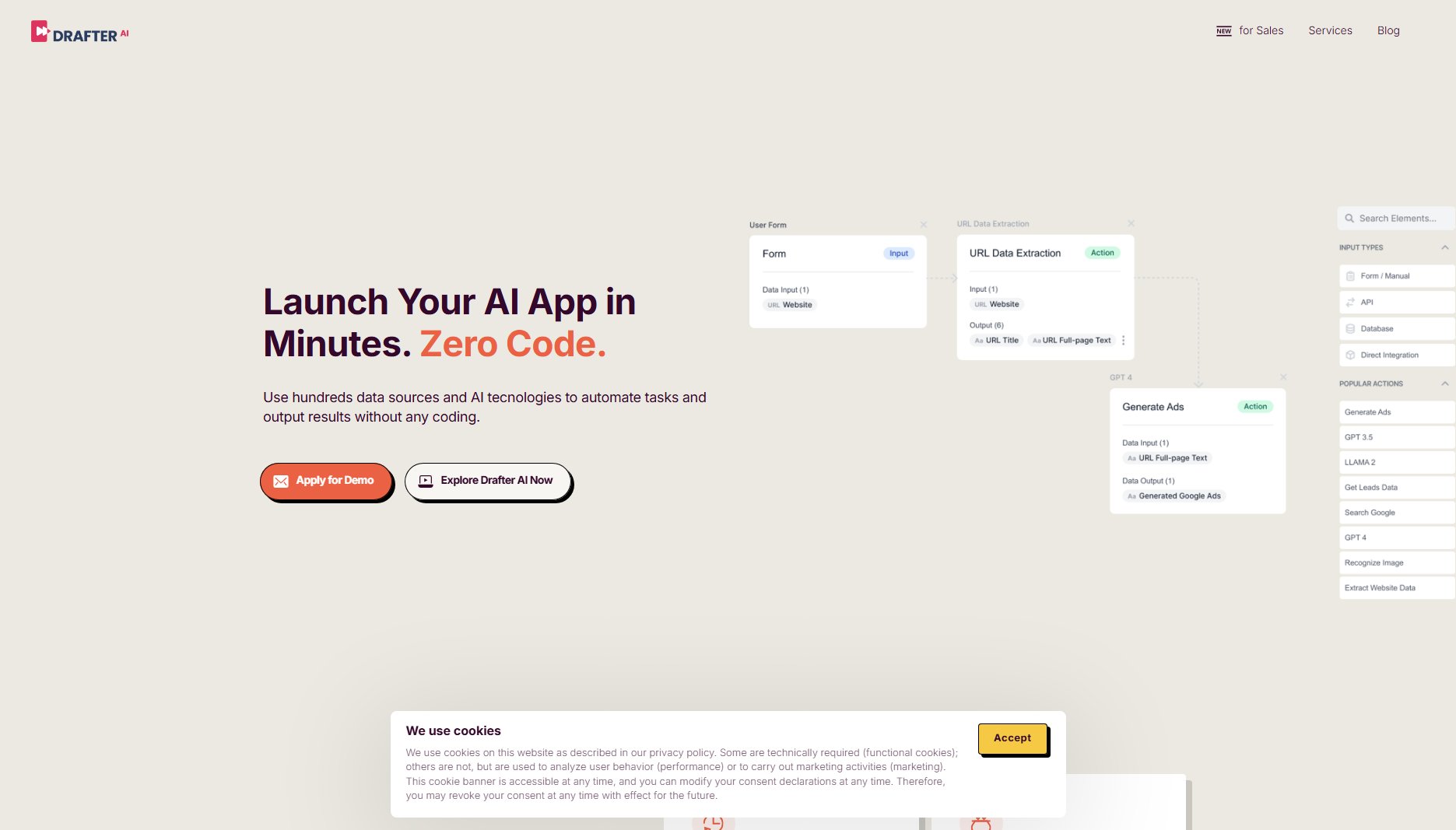

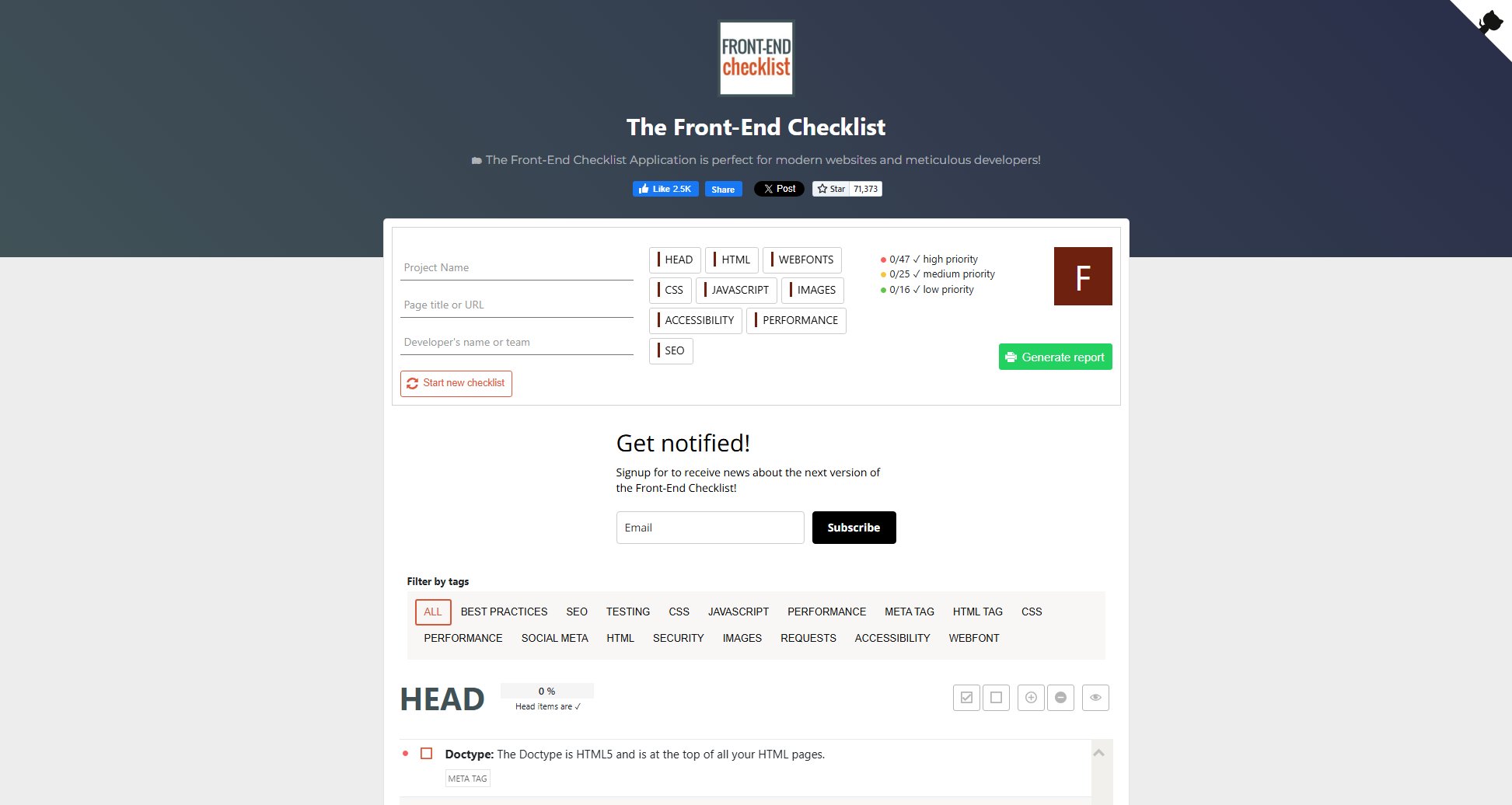

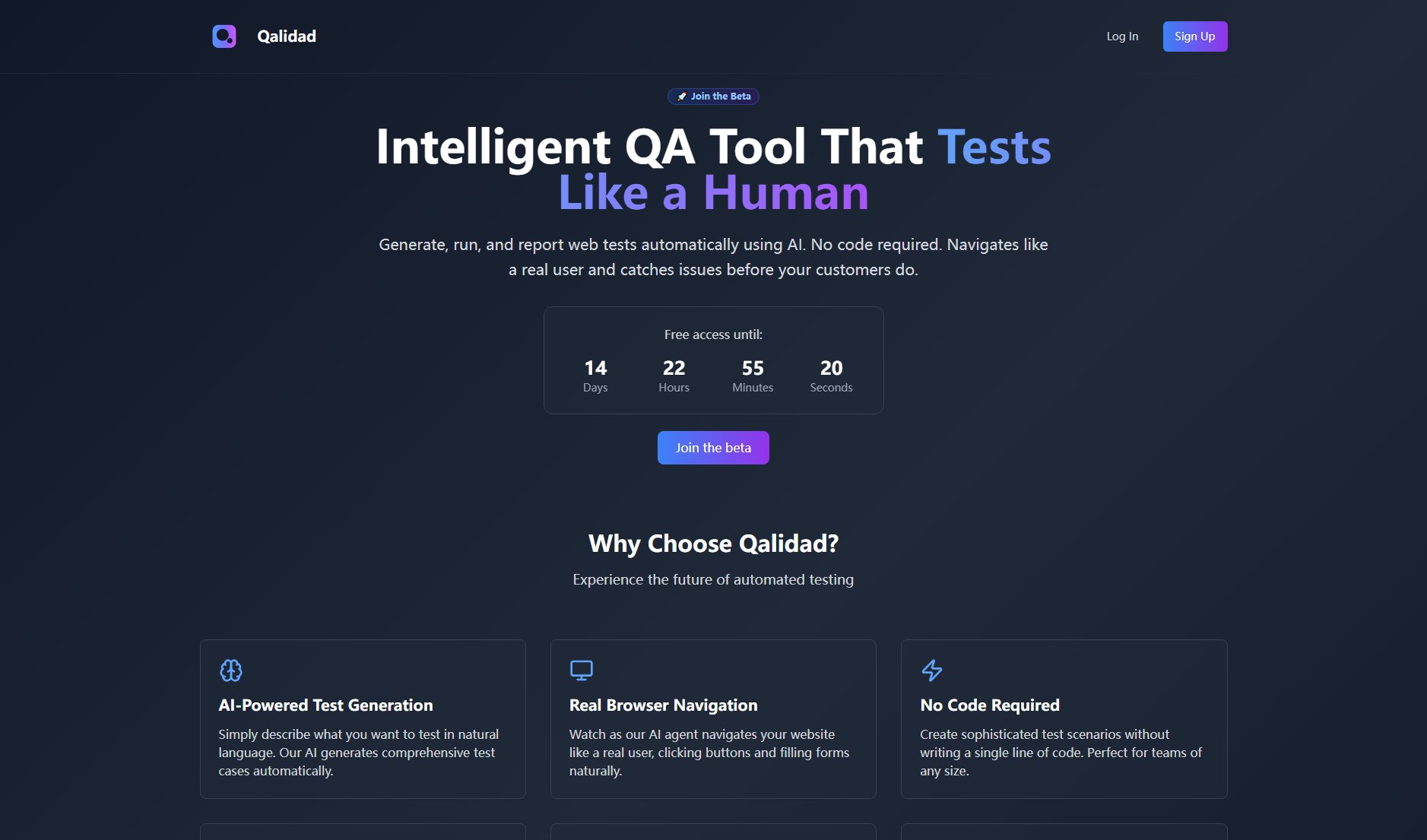

Qalidad Interface & Screenshots

Qalidad Official screenshot of the tool interface

What Can Qalidad Do? Key Features

AI-Powered Test Generation

Qalidad's core innovation is its AI that transforms natural language descriptions into complete test cases. Just describe your test scenario like 'Test checkout flow with invalid credit card' and the AI generates all necessary test steps, assertions, and edge cases automatically. This reduces test creation time from hours to minutes while maintaining comprehensive test coverage.

Real Browser Navigation

The platform's AI agents interact with your website exactly like human users, handling dynamic content and complex UI elements naturally. Unlike traditional testing tools that rely on brittle selectors, Qalidad's agents understand page structure contextually, making tests more reliable and maintainable through UI changes.

No Code Required

Qalidad completely eliminates the need for test scripting knowledge. Create sophisticated test scenarios through simple descriptions without writing any code. This makes comprehensive testing accessible to product managers, designers, and other non-technical team members while still providing the depth technical QA engineers need.

Visual Test Reporting

Get detailed, visual reports showing exactly what happened during test execution. Each report includes screenshots at every step, console logs, network activity, and clear indicators of passed/failed assertions. This makes debugging test failures intuitive and significantly reduces investigation time.

Scenario Management

Organize related tests into logical scenarios that can be run together. Schedule test runs at specific times or intervals, and track historical results to identify trends. The platform maintains complete execution history with the ability to compare results across different runs and environments.

Best Qalidad Use Cases & Applications

Continuous Regression Testing

Development teams use Qalidad to automatically regression test critical user flows after every deployment. By describing core application paths once, teams get automatic regression coverage that scales with their application without maintaining fragile test scripts.

Cross-Browser/Device Validation

QA teams leverage Qalidad to quickly verify application behavior across multiple browser and device combinations. The same natural language test can be executed across different environments without modification, significantly reducing cross-browser testing effort.

Accessibility Testing

Product teams use Qalidad to validate accessibility requirements by describing user interactions that should work for all users. The AI captures accessibility issues during test execution, helping teams maintain inclusive design standards.

How to Use Qalidad: Step-by-Step Guide

Describe your test scenario in natural language in the Qalidad interface. For example: 'Test user registration with existing email' or 'Verify checkout flow with promo code'. The AI will analyze your description and suggest test parameters.

Review the automatically generated test steps. You can modify any step by simply editing the description - the AI will update the test logic accordingly. Add assertions for specific validations you want to include.

Configure test settings like browser type, viewport size, and execution environment. You can also set up test data parameters that will be used across multiple test runs.

Run the test immediately or schedule it for later. Watch as the AI agent executes the test in real-time, navigating your application just like a human user would.

Review the visual test report showing screenshots of each step, console output, and detailed pass/fail indicators. Share reports with your team or integrate results with your existing workflow tools.

Qalidad Pros and Cons: Honest Review

Pros

Considerations

Is Qalidad Worth It? FAQ & Reviews

No coding skills are required. Qalidad is designed specifically to enable comprehensive testing without writing any code. The AI handles all test logic generation based on your natural language descriptions.

Qalidad's AI understands web pages contextually rather than relying on fixed selectors. It can adapt to UI changes and dynamic content by analyzing page structure and relationships between elements, making tests more resilient to changes.

Yes, Qalidad provides API access and webhook support for integration with popular CI/CD tools. Test results can be automatically fed into your existing development workflow.

Qalidad supports all modern browsers (Chrome, Firefox, Safari, Edge) and can test across different viewport sizes for responsive design validation. Mobile browser testing is also supported.

There are no technical limits to test complexity. You can describe multi-step user journeys with conditional logic, and the AI will generate appropriate test cases. Complex scenarios might require more precise descriptions for optimal results.