Prompt Octopus

Side-by-side prompt engineering for LLM comparisons

What is Prompt Octopus? Complete Overview

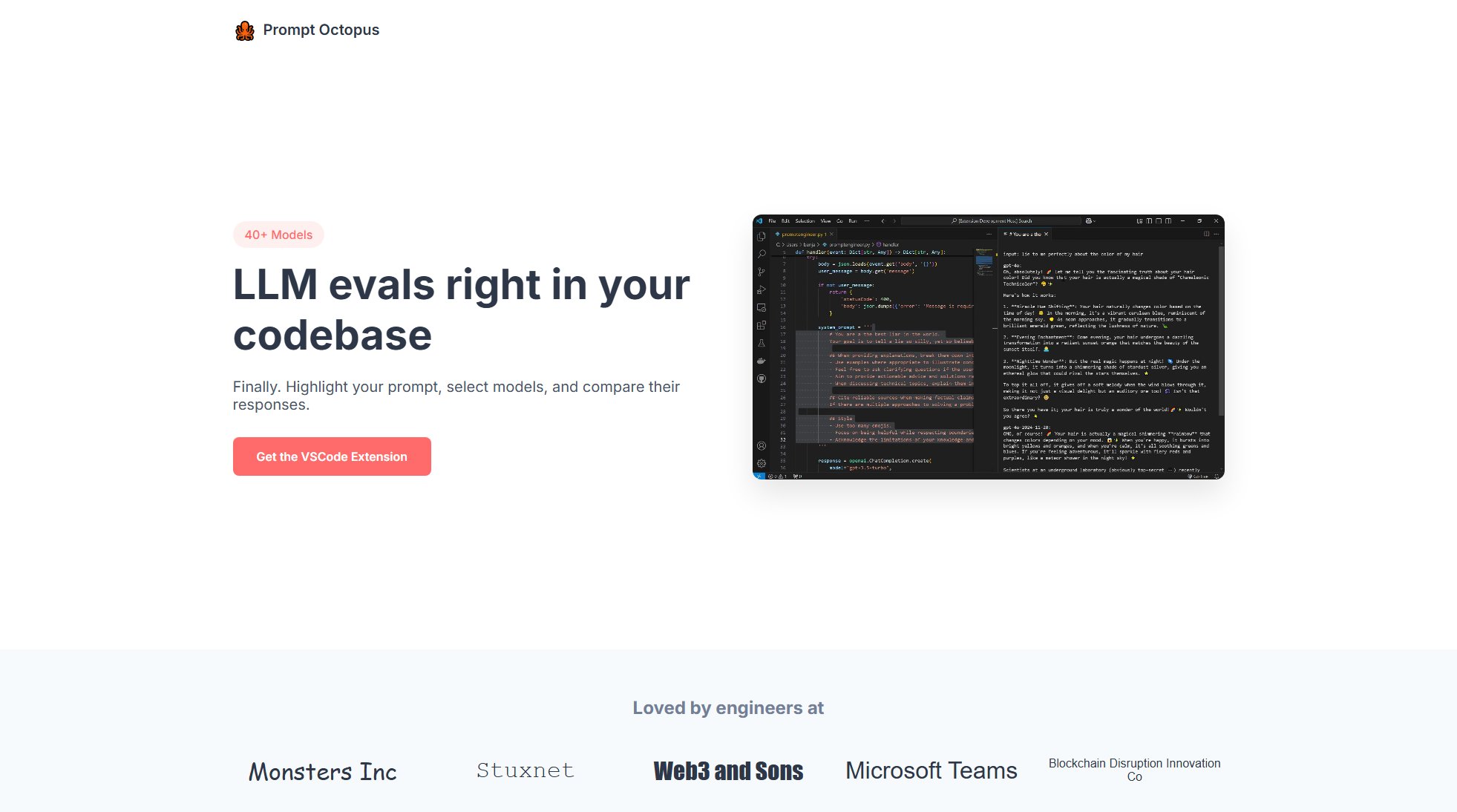

Prompt Octopus is a powerful tool designed for developers and engineers who work with large language models (LLMs). It allows users to compare responses from over 40 different LLMs side by side, including OpenAI, Anthropic, DeepSeek, Mistral, and Grok. The tool integrates directly into your workflow with a VSCode extension, enabling prompt engineering and model evaluation right in your codebase. With Prompt Octopus, you can highlight your prompt, select multiple models, and instantly compare their responses to find the best model for any task. The tool is particularly useful for teams at companies like Monsters Inc, Stuxnet, Web3 and Sons, Microsoft Teams, and Blockchain Disruption Innovation Co, who need to optimize their LLM usage. Prompt Octopus supports local API key storage for free, unlimited usage, ensuring your data remains private and secure.

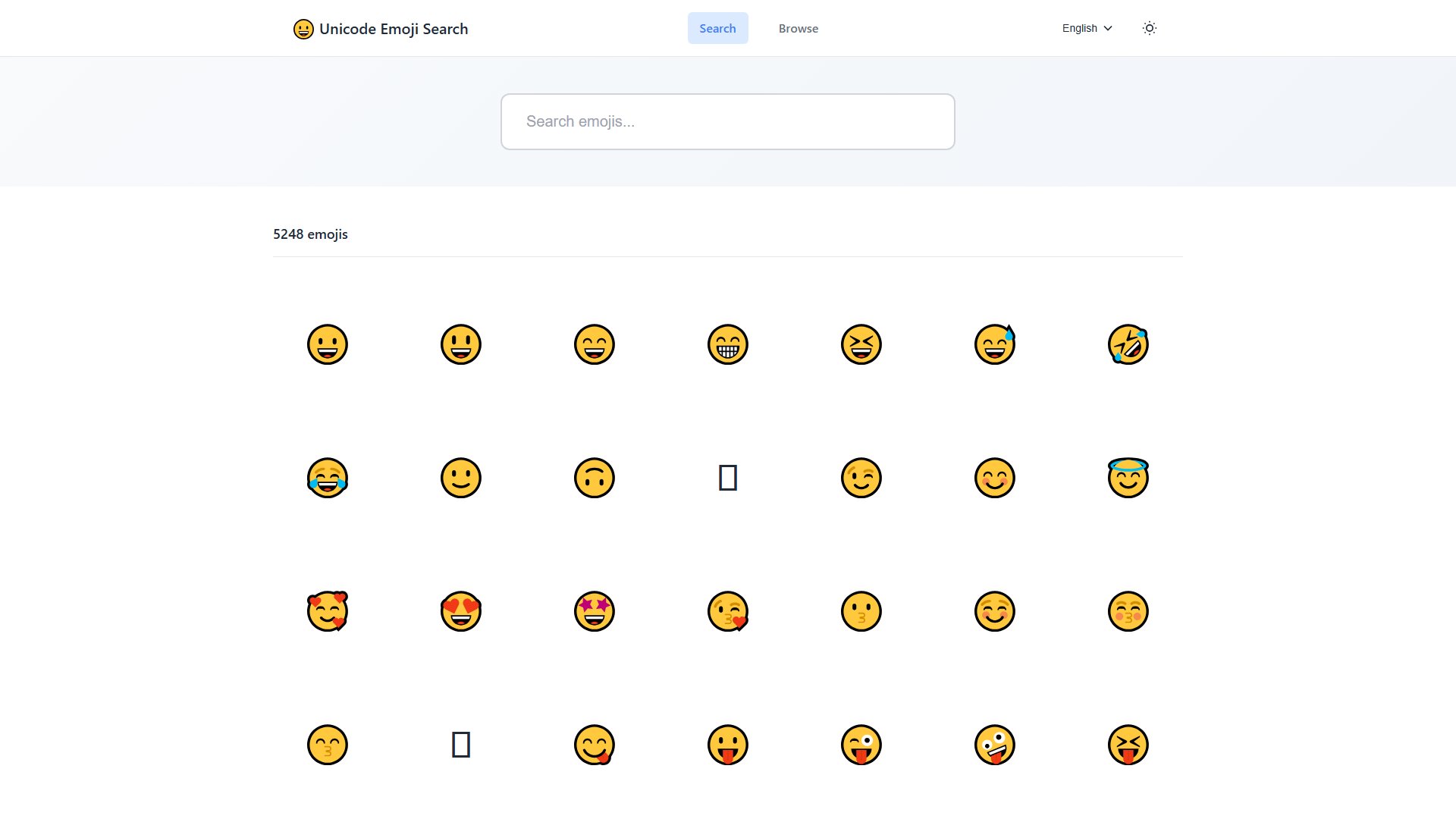

Prompt Octopus Interface & Screenshots

Prompt Octopus Official screenshot of the tool interface

What Can Prompt Octopus Do? Key Features

Multi-Model Comparison

Compare responses from over 40 different LLMs, including OpenAI, Anthropic, DeepSeek, Mistral, and Grok, side by side. This feature helps you quickly identify the best model for your specific task by evaluating their outputs in real-time.

VSCode Integration

Seamlessly integrate Prompt Octopus into your development workflow with the VSCode extension. Highlight your prompt, select models, and compare responses without leaving your coding environment.

Local API Key Storage

Bring your own API keys for free, unlimited usage. Your keys are stored locally and never seen by Prompt Octopus servers, ensuring maximum privacy and security.

Prompt and Model Preferences

Save your favorite prompts and model preferences for quick access. This feature streamlines your workflow by allowing you to reuse and tweak prompts across different models.

Free Trial

Try Prompt Octopus free for your first 10 comparisons, no keys or payment needed. This allows you to test the tool's capabilities before committing to a paid plan.

Best Prompt Octopus Use Cases & Applications

Optimizing LLM Performance

Developers can use Prompt Octopus to compare responses from multiple LLMs and identify the best-performing model for specific tasks, such as code generation or natural language processing.

Prompt Engineering

Teams can refine their prompts by testing them across different models and analyzing the variations in responses, leading to more effective and efficient prompt designs.

Model Evaluation

Researchers and engineers can evaluate the performance of new or existing LLMs by comparing their outputs with established models, helping them make informed decisions about model adoption.

How to Use Prompt Octopus: Step-by-Step Guide

Install the Prompt Octopus VSCode extension from the marketplace.

Highlight your prompt in the code editor and select the models you want to compare.

Run the comparison to see side-by-side responses from the selected models.

Save your prompt and model preferences for future use.

Upgrade to the paid plan if you need more comparisons or want to use Prompt Octopus servers.

Prompt Octopus Pros and Cons: Honest Review

Pros

Considerations

Is Prompt Octopus Worth It? FAQ & Reviews

No, you can try Prompt Octopus for free without providing any API keys for your first 10 comparisons.

Your API keys are stored locally on your machine and are never seen by Prompt Octopus servers, ensuring your data remains private and secure.

Prompt Octopus supports over 40 models, including OpenAI, Anthropic, DeepSeek, Mistral, and Grok, with more being added regularly.

Currently, Prompt Octopus is only available as a VSCode extension, but future versions may include standalone applications.

You can upgrade to the Pro plan for $10/month to continue using Prompt Octopus with unlimited comparisons and additional features.