LLM Speed Check

Estimate local AI model performance on your hardware

What is LLM Speed Check? Complete Overview

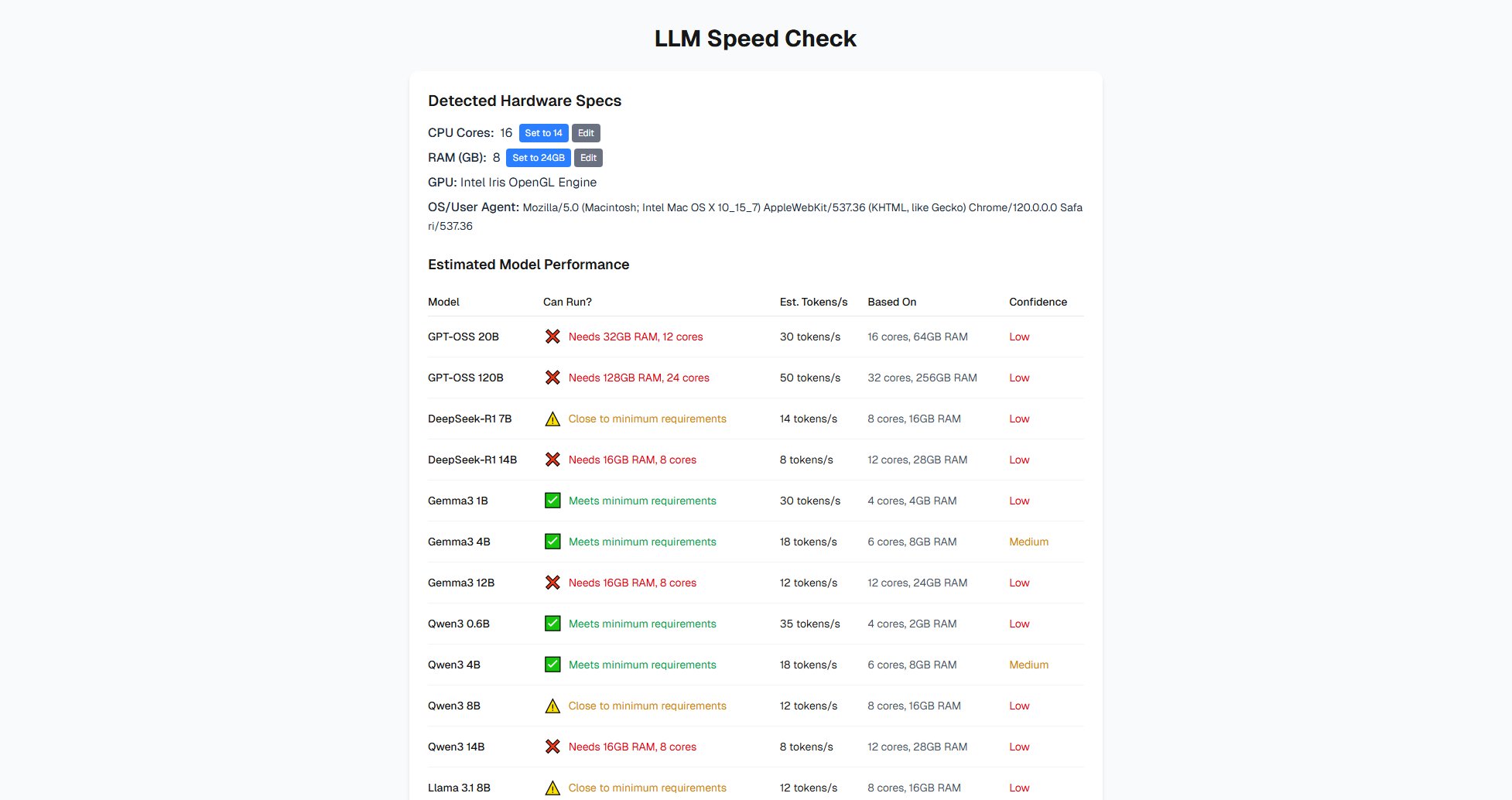

LLM Speed Check is an innovative tool that helps users determine which open-source AI language models their local hardware can support and estimates their performance speed. Designed for developers, researchers, and AI enthusiasts, this solution addresses the critical challenge of matching model requirements with available computing resources. The tool automatically detects your system's specifications (CPU cores, RAM, GPU) and compares them against a comprehensive database of benchmark results to provide estimated tokens-per-second performance for various popular models. This enables users to make informed decisions before downloading and running large language models locally through platforms like LM Studio or Ollama. By providing these estimates upfront, LLM Speed Check saves users significant time and frustration that would otherwise be spent testing incompatible models.

LLM Speed Check Interface & Screenshots

LLM Speed Check Official screenshot of the tool interface

What Can LLM Speed Check Do? Key Features

Hardware Detection

The tool automatically scans and identifies your system's CPU cores, RAM capacity, and GPU specifications. While browser limitations may affect detection accuracy, users can manually override these values for more precise estimates. This comprehensive hardware analysis forms the foundation for all performance predictions.

Model Compatibility Check

LLM Speed Check evaluates whether your system meets the minimum requirements for running various AI models, clearly indicating which models are compatible (showing 'Meets requirements'), which might work with limitations ('Close to minimum requirements'), and which won't run ('Needs more resources'). This helps prevent frustrating installation attempts with incompatible models.

Performance Estimation

For each compatible model, the tool provides an estimated tokens-per-second output based on similar hardware configurations. These estimates help users understand the relative speed differences between models on their specific hardware, allowing for informed model selection based on performance needs.

Comprehensive Model Database

The tool includes performance data for a wide range of popular open-source models including GPT-OSS, DeepSeek-R1, Gemma3, Qwen3, Llama 3 variants, Mistral, CodeLlama, Phi-4, and TinyLlama. Models are organized by popularity based on Ollama's library for easy navigation.

Cross-Platform Compatibility

The performance estimates apply to both LM Studio and Ollama, the two most popular platforms for running local LLMs. While noting that LM Studio offers more optimization options while Ollama is easier to set up, the tool's predictions remain relevant for both environments.

Best LLM Speed Check Use Cases & Applications

Developer Workstation Setup

Software engineers preparing their development machines can use LLM Speed Check to identify which coding assistant models (like CodeLlama variants) will run effectively on their hardware before committing to large downloads and setup processes.

Research Experiment Planning

AI researchers evaluating different model architectures for local experimentation can quickly compare which models are viable options on their available hardware, saving days of trial-and-error setup time.

Personal AI Assistant Selection

Individuals wanting to run personal AI assistants offline can determine the most capable model their hardware supports, balancing between model sophistication and response speed for optimal user experience.

Educational Demonstrations

Educators teaching about large language models can use the tool to show students how hardware constraints impact model selection and performance in real-world scenarios.

How to Use LLM Speed Check: Step-by-Step Guide

Visit the LLM Speed Check website. The tool will automatically detect your system's hardware specifications including CPU cores, RAM, and GPU information (note that browser limitations may affect detection accuracy).

Review the automatically detected specifications. If they don't match your actual hardware (common for RAM detection), manually adjust the values using the 'Edit' buttons to reflect your true system resources for more accurate results.

Browse through the comprehensive list of AI models. The tool will automatically calculate and display which models your hardware can support, along with estimated performance metrics for each compatible model.

Analyze the results: Green checkmarks indicate fully compatible models, warning signs show models that might work with limitations, and red indicators mean your hardware doesn't meet requirements. The estimated tokens-per-second helps compare performance between models.

Select the most suitable model(s) based on your needs (performance vs. capability) and download them through your preferred local LLM platform (LM Studio or Ollama) for actual testing and use.

LLM Speed Check Pros and Cons: Honest Review

Pros

Considerations

Is LLM Speed Check Worth It? FAQ & Reviews

The estimates are based on aggregated benchmark data from similar hardware configurations. While they provide a good indication of expected performance, actual results may vary due to system load, thermal throttling, background processes, and specific software optimizations in tools like LM Studio or Ollama.

Browser limitations often restrict detailed GPU detection capabilities. For most local LLM operations, CPU and RAM are the primary limiting factors, which the tool focuses on. GPU information, when available, is used for additional context but isn't critical for the current estimations.

The tool is specifically designed for local hardware evaluation. Cloud instances typically have different performance characteristics due to virtualization overhead and different hardware configurations. For cloud deployments, refer to provider-specific benchmarks.

Low confidence appears when we have limited benchmark data for your specific hardware configuration. This often occurs with either very high-end or very low-end systems that haven't been extensively tested with certain models.

We regularly update our database with new models and additional benchmark data. Major updates typically occur monthly, with minor adjustments as new information becomes available from the open-source community.