GraphBit

Enterprise-Grade LLM Framework for Speed, Security & Scale

What is GraphBit? Complete Overview

GraphBit is an enterprise-grade LLM framework designed to simplify the complexity of building AI agents. Built with Rust and wrapped in Python, it is optimized for speed, reliability, and scalability, making it ideal for production workloads. GraphBit addresses the pain points of high CPU usage, memory inefficiency, and slow throughput commonly found in other frameworks. It offers seamless integration with popular services, acting as an intelligent layer to connect and orchestrate data flow across tech stacks. Target users include enterprises, developers, and AI professionals looking for a robust, high-performance solution to deploy AI agents at scale.

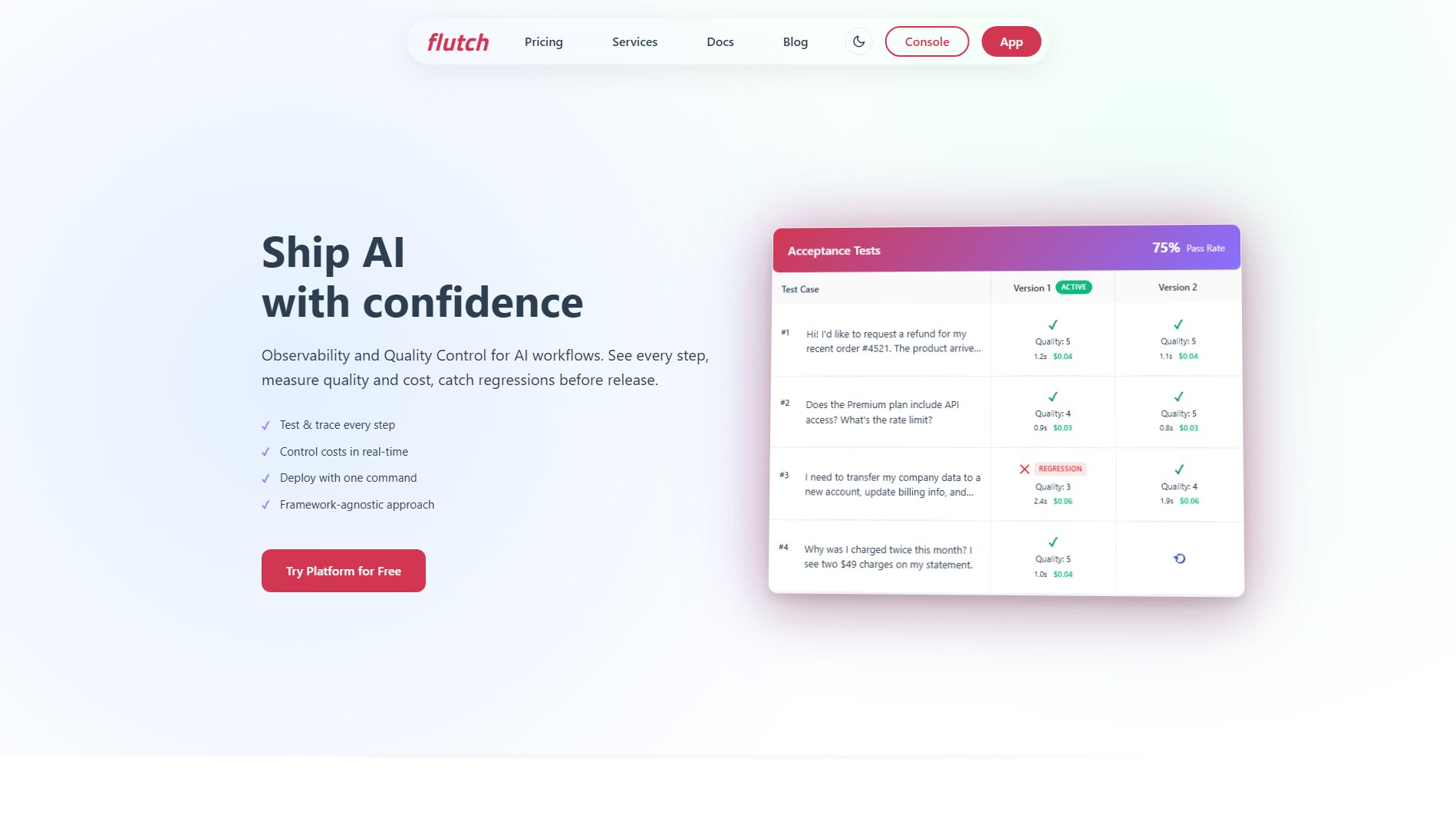

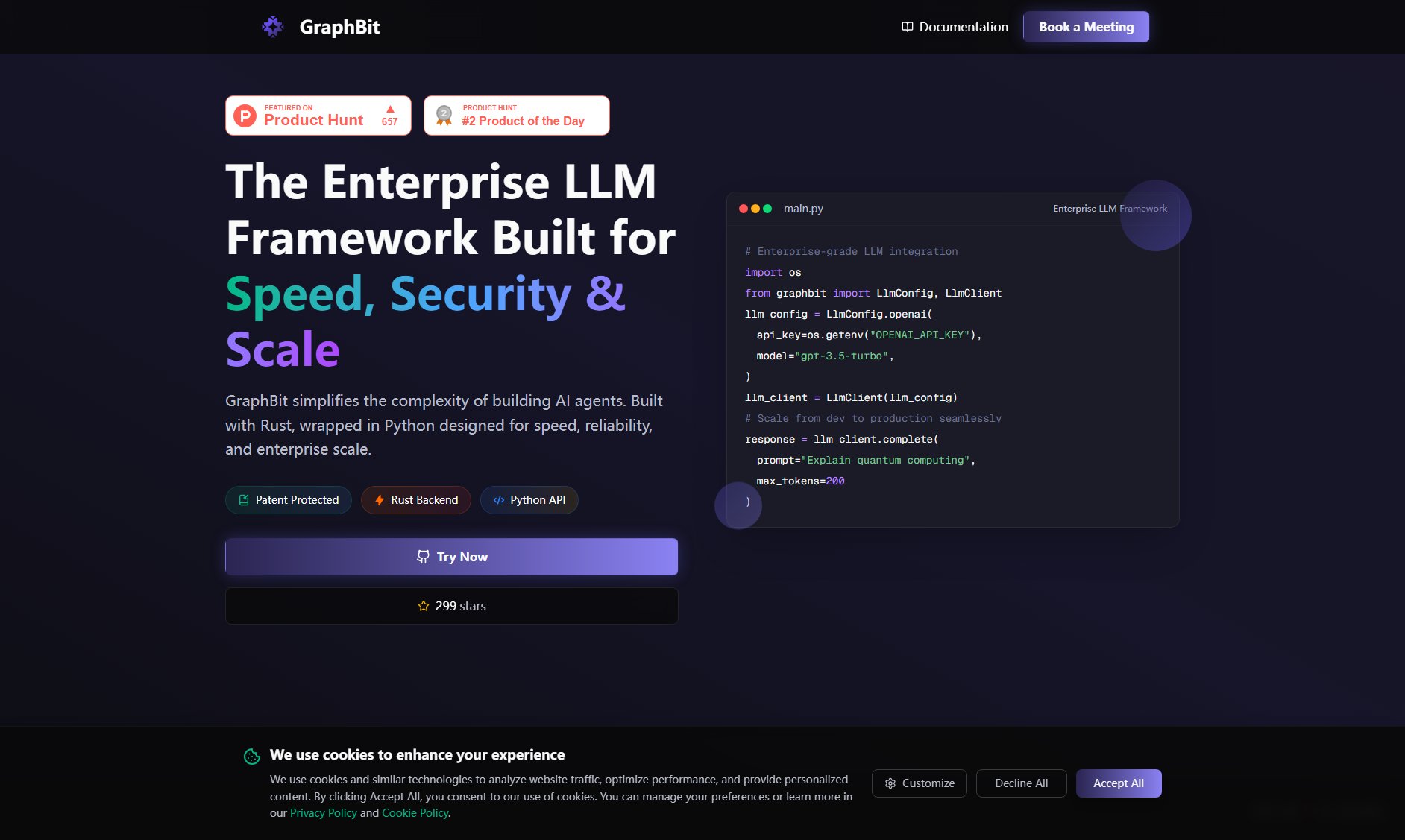

GraphBit Interface & Screenshots

GraphBit Official screenshot of the tool interface

What Can GraphBit Do? Key Features

Rust Backend for Performance

GraphBit's backend is built with Rust, ensuring unparalleled speed and efficiency. Benchmarks show it uses only 0.1% CPU and 0.014MB RAM, outperforming competitors like LangChain and Pydantic AI by up to 14x.

Python API for Ease of Use

Despite its Rust core, GraphBit provides a Python API, making it accessible to developers familiar with Python. This allows for quick integration and reduces the learning curve.

Enterprise-Grade Scalability

GraphBit is designed to scale seamlessly from development to production, handling high-throughput workloads with minimal resource usage. It supports 25 requests per minute with a 0.176% error rate.

Universal Connections

GraphBit can integrate with various services, acting as an intelligent layer to connect and process data across your entire tech stack. This makes it versatile for different enterprise workflows.

Tool Calling for AI Agents

GraphBit supports building AI agents with tool calling capabilities, enabling complex workflows and user input analysis. This feature is essential for developing sophisticated AI solutions.

Best GraphBit Use Cases & Applications

Enterprise AI Integration

Large enterprises can use GraphBit to integrate LLMs into their existing systems, ensuring high performance and low resource usage. This is ideal for customer support chatbots, data analysis tools, and more.

Developer Tooling

Developers can leverage GraphBit's Python API to quickly build and deploy AI agents. The framework's efficiency reduces development time and operational costs.

Research and Development

Researchers can use GraphBit to prototype and test new AI models, benefiting from its speed and reliability. The framework's benchmarking capabilities also aid in performance evaluation.

How to Use GraphBit: Step-by-Step Guide

Install GraphBit using pip: `pip install graphbit`. Ensure you have Python 3.8+ installed.

Configure the LLM client by importing `LlmConfig` and `LlmClient` from GraphBit. Set up your API key and model preferences.

Use the `complete` method to send prompts to the LLM. Customize parameters like `max_tokens` and `temperature` to control the output.

Deploy your AI agent in production. GraphBit's efficient resource usage ensures smooth scaling without performance degradation.

GraphBit Pros and Cons: Honest Review

Pros

Considerations

Is GraphBit Worth It? FAQ & Reviews

GraphBit is built with Rust but provides a Python API, making it accessible to Python developers.

GraphBit outperforms LangChain in CPU efficiency (14x faster), memory usage (13x better), and throughput (4% faster).

Yes, GraphBit's free tier is suitable for small projects, but its true strength lies in enterprise-scale applications.

Enterprise users receive priority support, while free users can access community support via Discord and GitHub.

GraphBit is designed to work seamlessly with OpenAI models, but future updates may include support for other LLMs.