Gemma 3

Google's most advanced open AI model for developers

What is Gemma 3? Complete Overview

Gemma 3 is Google's most capable open AI model, built on the same technology as Gemini 2.0. It offers state-of-the-art performance on a single GPU, making it accessible for developers of all levels. With advanced multimodal capabilities, extensive language support, and multiple model sizes, Gemma 3 is designed to handle a wide range of tasks from document analysis to multilingual applications. Its lightweight yet powerful architecture allows for efficient deployment across various hardware setups, from mobile devices to enterprise servers. The model's 128K token context window and function calling features enable complex reasoning tasks and seamless integration with existing systems.

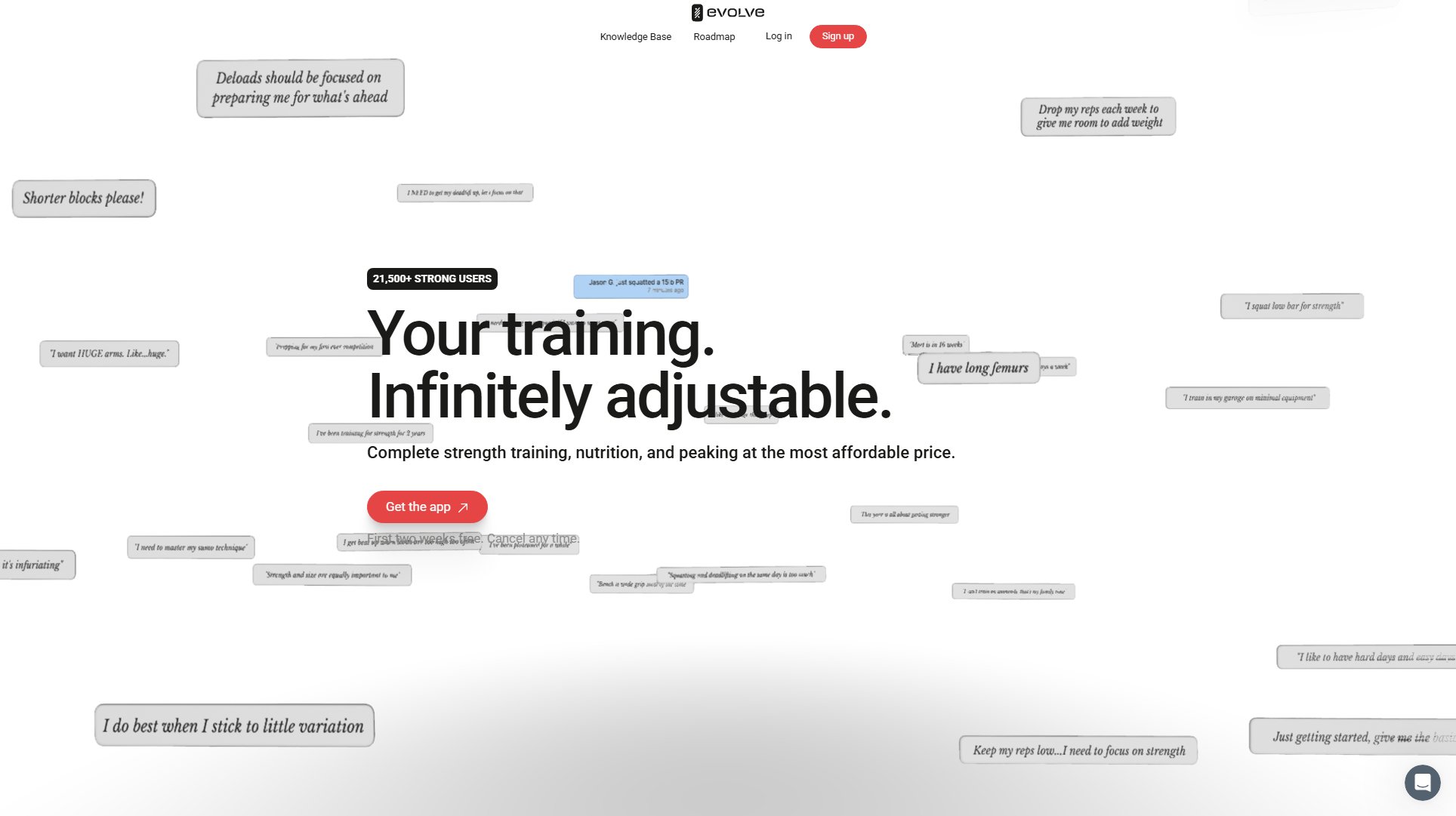

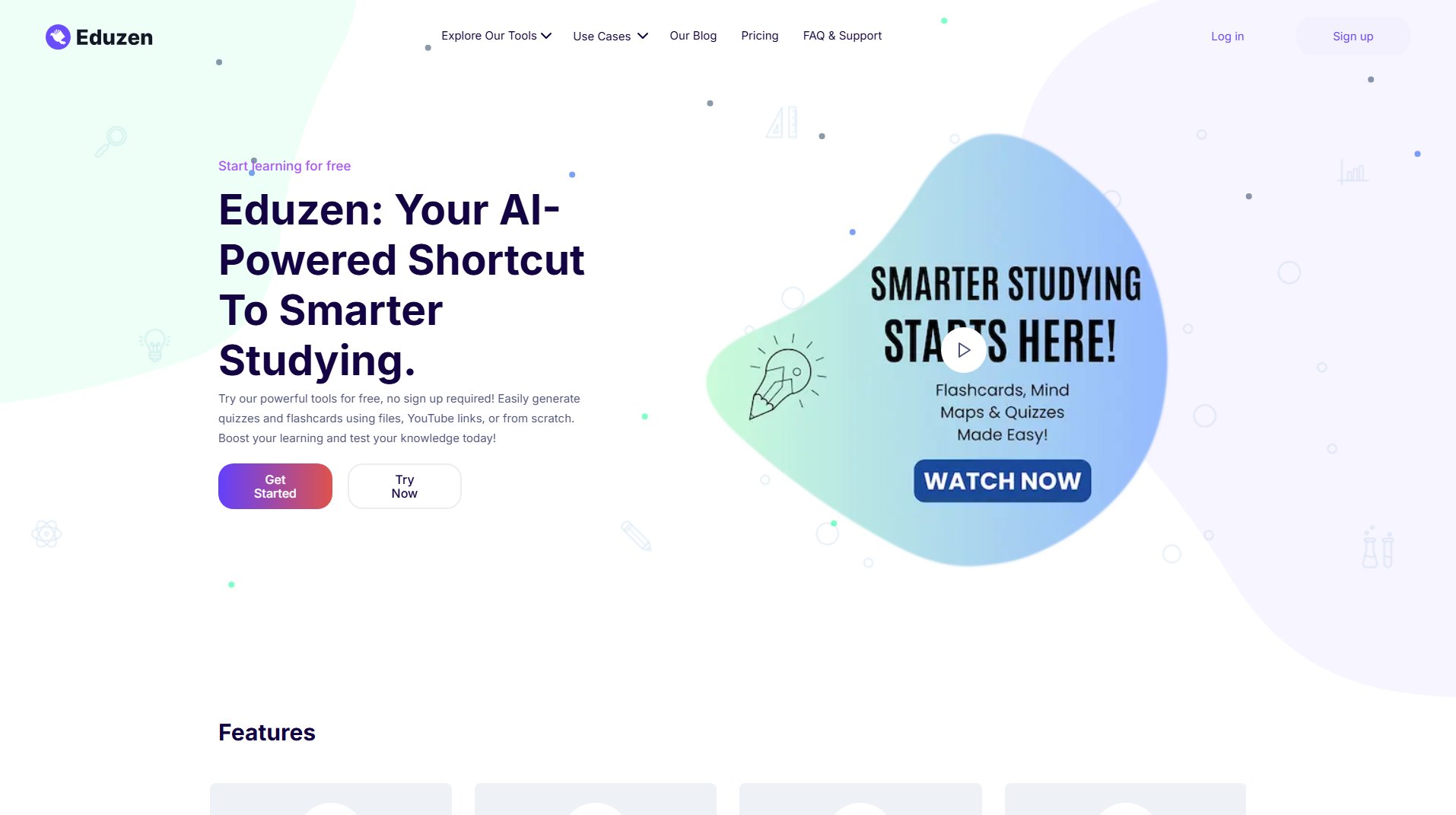

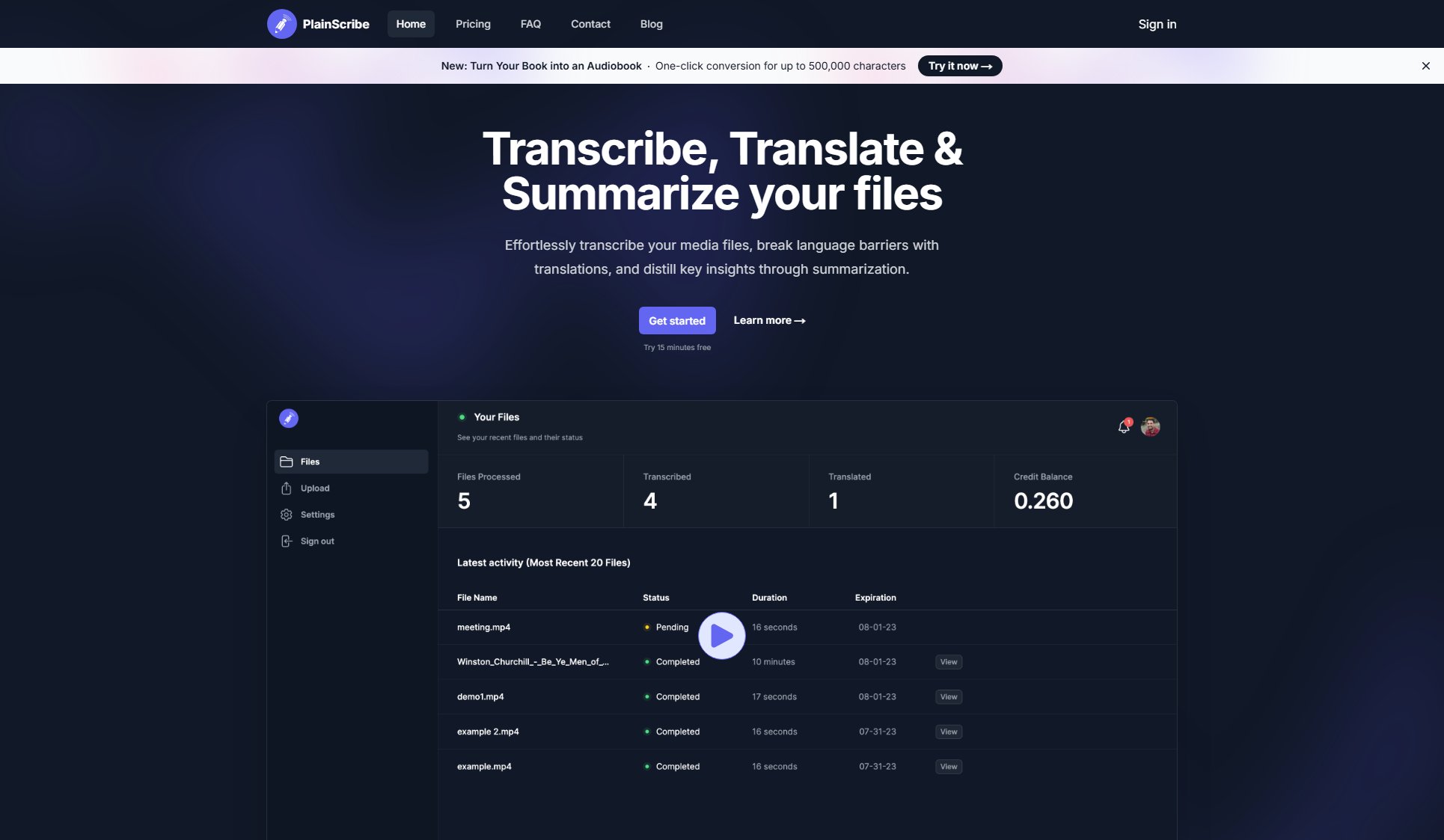

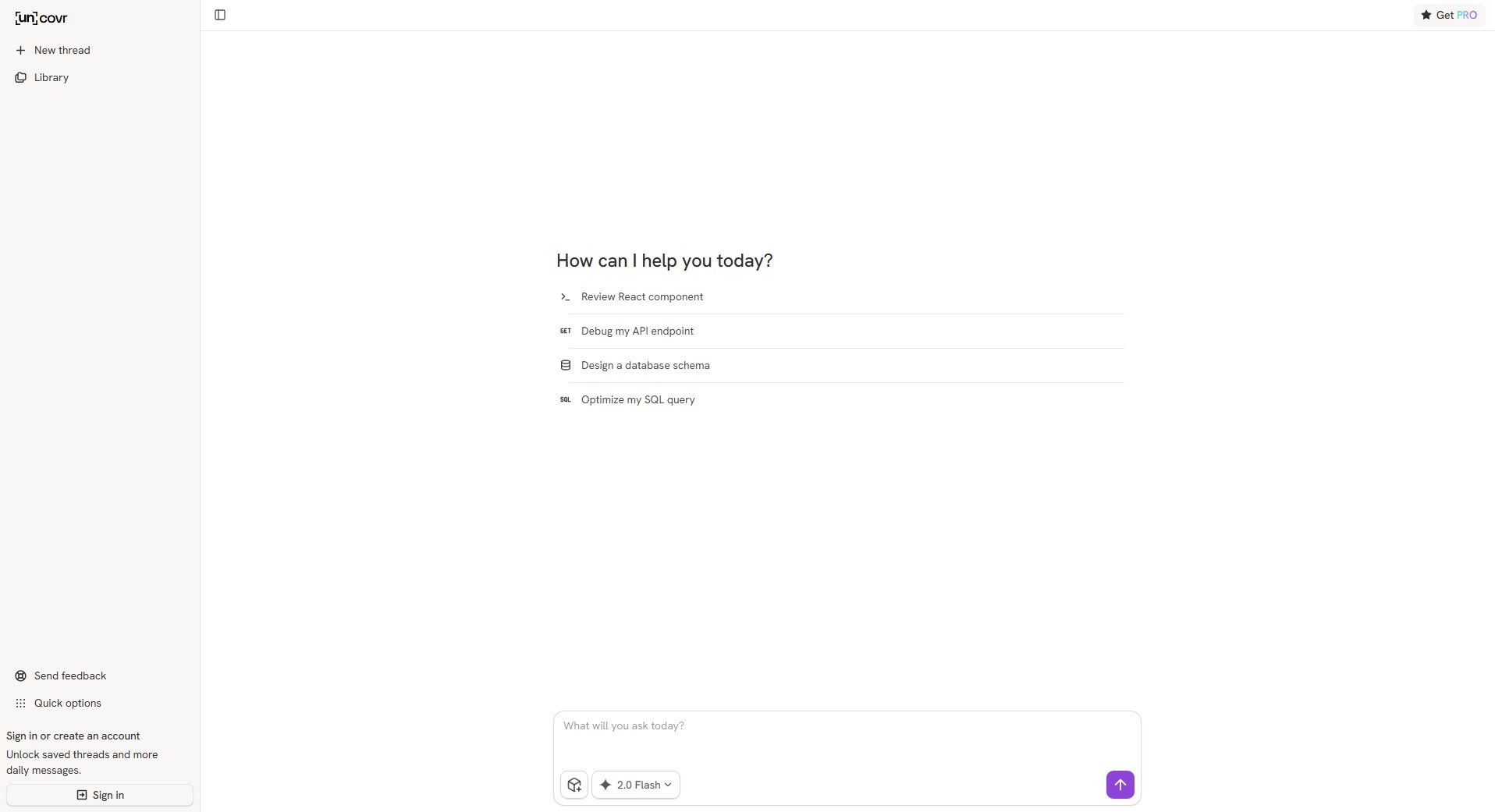

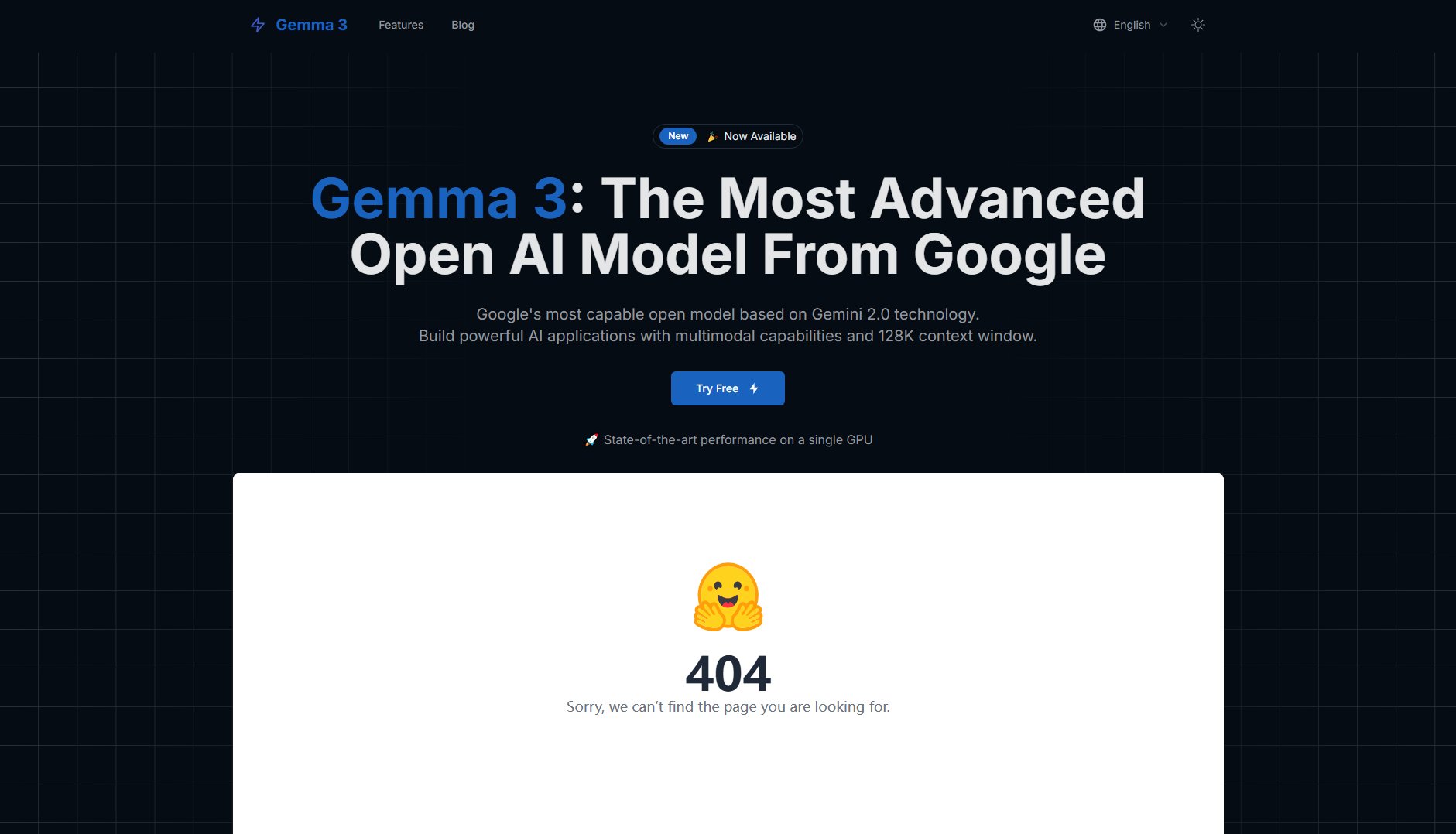

Gemma 3 Interface & Screenshots

Gemma 3 Official screenshot of the tool interface

What Can Gemma 3 Do? Key Features

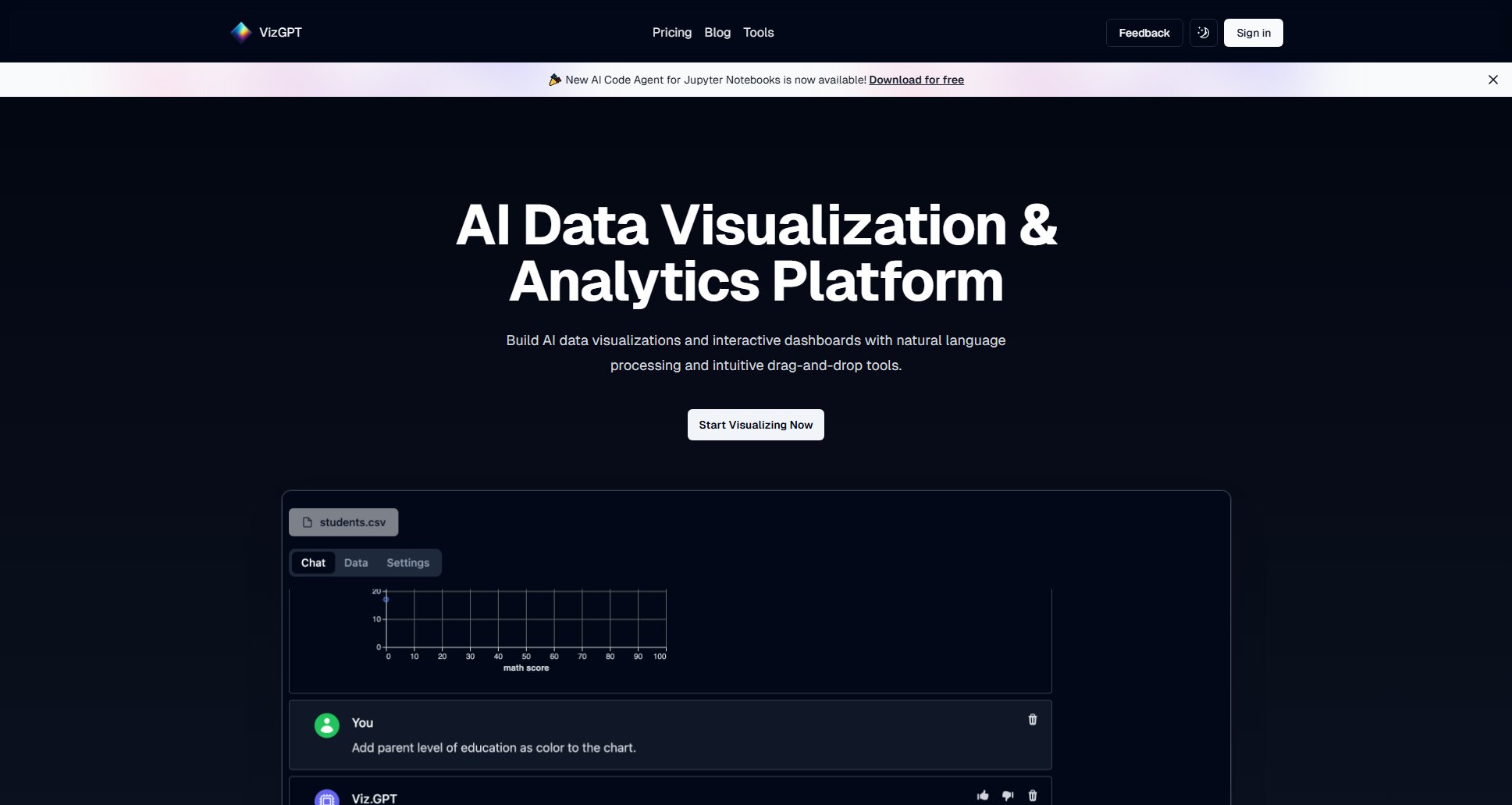

Vision-Language Understanding

Gemma 3 processes images and text together with advanced visual reasoning capabilities, enabling multimodal applications that can understand and interpret visual content alongside textual data.

128K Token Context Window

With an expansive 128K token context window, Gemma 3 can handle larger inputs, allowing for more comprehensive document analysis and complex reasoning tasks that require maintaining context over extended sequences.

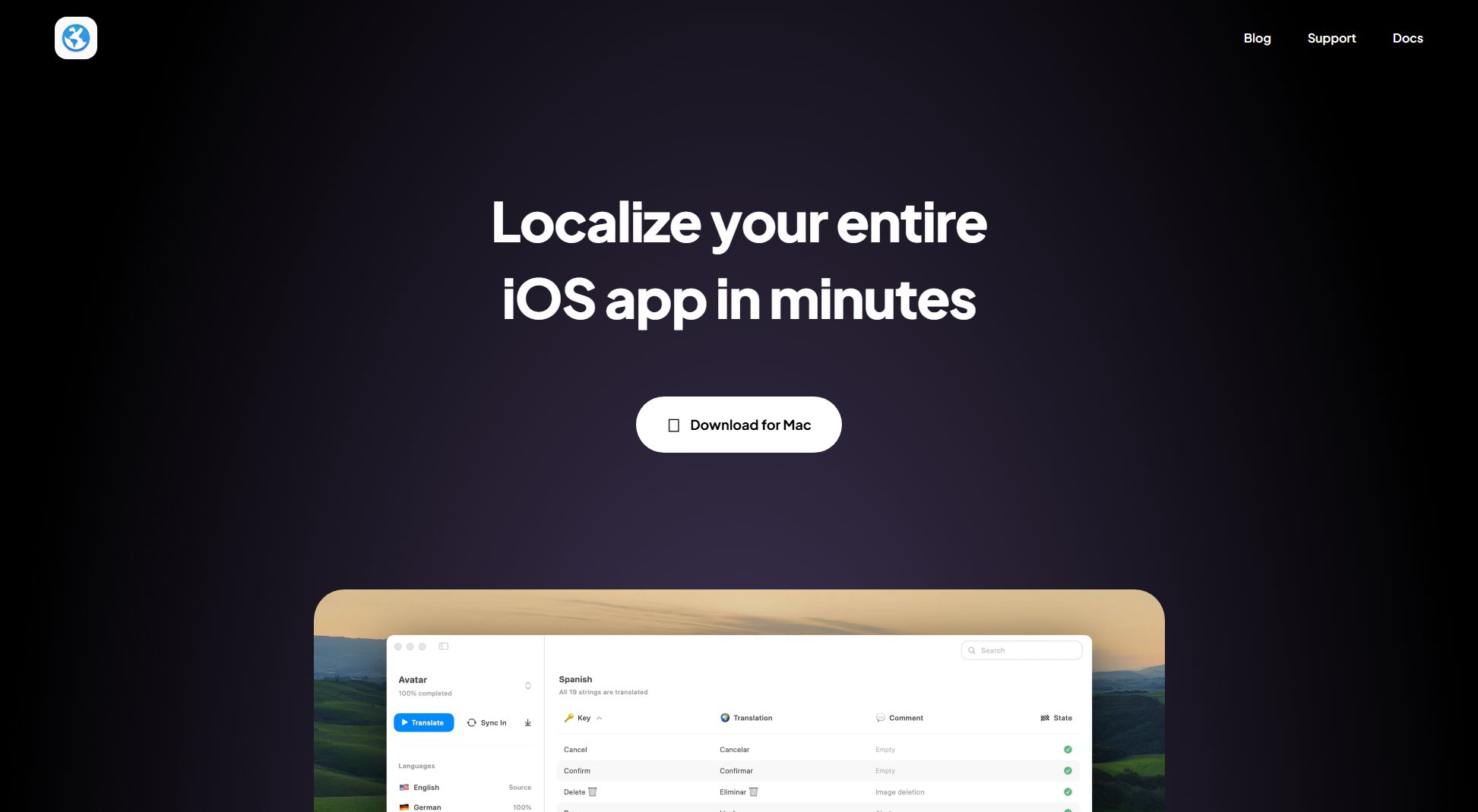

140+ Languages Support

Gemma 3 supports over 140 languages out of the box, making it ideal for building global applications that require extensive multilingual capabilities without additional fine-tuning.

Multiple Model Sizes

Available in 1B, 4B, 12B, and 27B parameter versions, Gemma 3 offers flexibility to match your hardware and performance needs, from mobile devices to high-end GPUs.

Function Calling

Built-in support for function calling and structured output generation allows developers to create sophisticated AI-driven workflows that integrate seamlessly with existing systems.

Quantized Models

Official quantized versions of Gemma 3 reduce computational requirements while maintaining accuracy, enabling efficient deployment in resource-constrained environments.

Best Gemma 3 Use Cases & Applications

Scientific Research Paper Analysis

Researchers can leverage the 128K token context window to analyze entire scientific papers, maintaining coherence across long documents for comprehensive understanding and summarization.

Multilingual Customer Support

Businesses can implement Gemma 3 to handle customer queries across 140+ languages without additional fine-tuning, providing consistent support quality globally.

Visual Assistant Development

Developers can create efficient visual assistants that process and understand images alongside text, running smoothly on a single GPU thanks to the model's multimodal capabilities.

AI Workflow Automation

Teams can build sophisticated AI-driven workflows using the built-in function calling feature, enabling seamless integration with existing systems and structured output generation.

How to Use Gemma 3: Step-by-Step Guide

Visit the Gemma 3 website and click the 'Try Free' button to access the model without any setup requirements.

Choose the model size that best fits your hardware capabilities and application needs (1B, 4B, 12B, or 27B parameters).

Input your text or upload images for multimodal processing, depending on your application requirements.

Adjust parameters such as temperature, top-p, and max tokens to customize the model's output for your specific use case.

Execute your query and analyze the results, leveraging the model's advanced capabilities for your application.

For advanced users, integrate the model into your workflows using the function calling API for structured output generation.

Gemma 3 Pros and Cons: Honest Review

Pros

Considerations

Is Gemma 3 Worth It? FAQ & Reviews

Gemma 3 is Google's most advanced open AI model based on Gemini 2.0 technology. It introduces multimodal capabilities, a 128K token context window, support for 140+ languages, and comes in multiple optimized sizes.

The 1B model runs on CPUs and mobile devices, the 4B model works on consumer GPUs, and the 27B model can run on a single NVIDIA GPU. Optimal performance requires NVIDIA GPUs, Google Cloud TPUs, or AMD GPUs with ROCm stack.

You can use Gemma 3 for free directly on the website with no setup required. Various examples and use cases are provided to help you get started quickly.

Yes, Gemma 3 offers adjustable parameters including max new tokens, temperature, top-p, top-k, and repetition penalty to customize model behavior for specific use cases.

Gemma 3 delivers state-of-the-art performance for its size, outperforming larger models in preliminary evaluations while requiring only a single GPU, making it more accessible and cost-effective.