ProLLM Benchmarks

Real-world LLM benchmarks for business decisions

What is ProLLM Benchmarks? Complete Overview

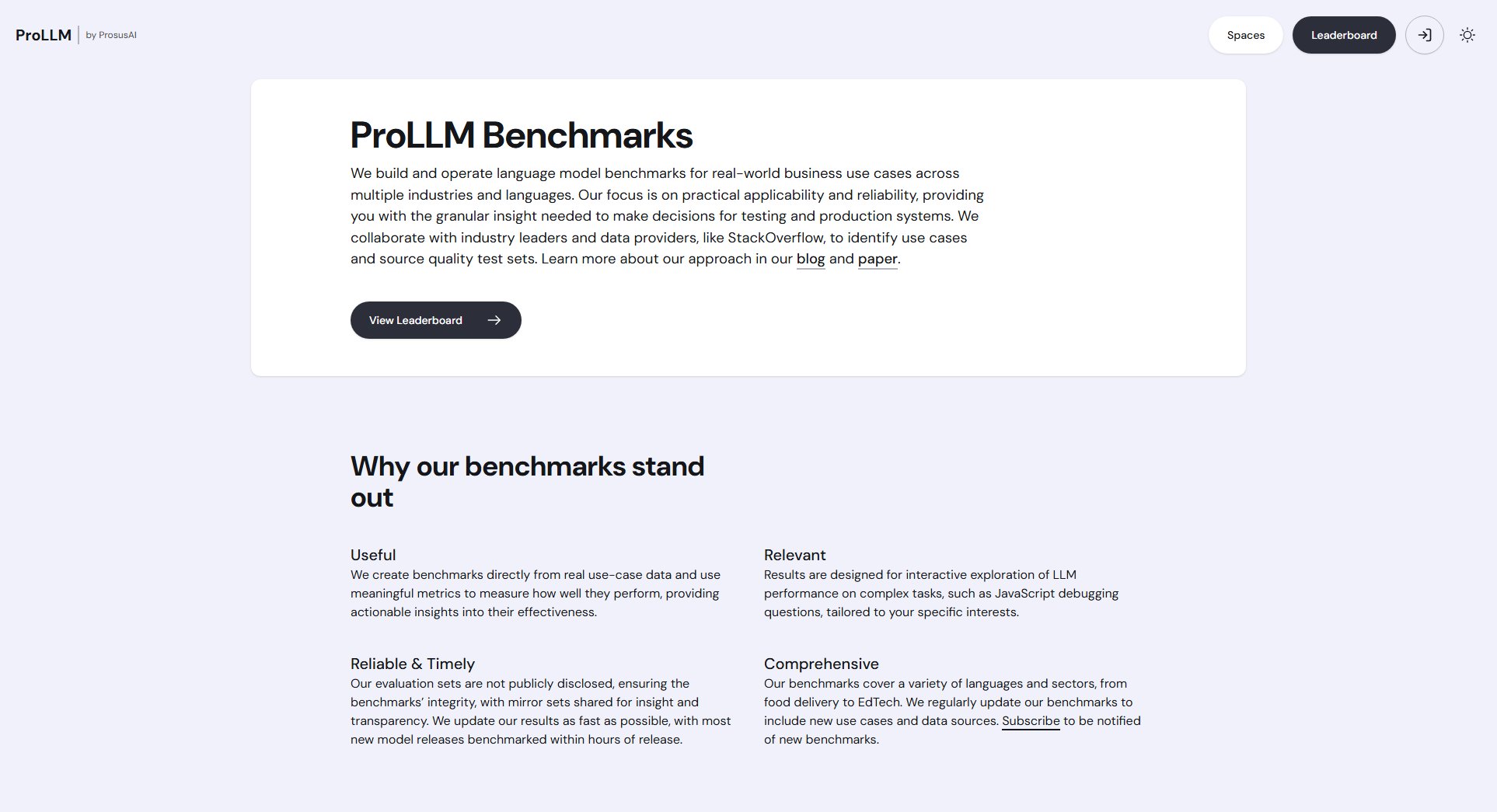

ProLLM Benchmarks specializes in creating and operating language model benchmarks tailored for real-world business applications across various industries and languages. The platform focuses on practical applicability and reliability, offering granular insights essential for decision-making in testing and production systems. Collaborating with industry leaders and data providers like StackOverflow, ProLLM identifies relevant use cases and sources high-quality test sets. The benchmarks are designed to provide actionable insights, ensuring that businesses can evaluate LLM performance effectively. Target audiences include enterprises and professionals who rely on accurate, up-to-date benchmarks to assess language models for their specific needs.

ProLLM Benchmarks Interface & Screenshots

ProLLM Benchmarks Official screenshot of the tool interface

What Can ProLLM Benchmarks Do? Key Features

Useful Benchmarks

ProLLM Benchmarks are derived directly from real use-case data, employing meaningful metrics to measure performance. This approach ensures that the insights provided are actionable, helping businesses understand the effectiveness of language models in practical scenarios.

Relevant Results

The platform offers interactive exploration of LLM performance on complex tasks, such as JavaScript debugging questions. Results are tailored to specific interests, making them highly relevant for businesses looking to evaluate models for particular applications.

Reliable & Timely Updates

ProLLM ensures the integrity of its benchmarks by not publicly disclosing evaluation sets, while providing mirror sets for transparency. The platform updates results swiftly, often benchmarking new model releases within hours of their availability.

Comprehensive Coverage

ProLLM Benchmarks span multiple languages and sectors, including food delivery and EdTech. The platform continuously updates its benchmarks to incorporate new use cases and data sources, ensuring comprehensive coverage for diverse business needs.

Best ProLLM Benchmarks Use Cases & Applications

JavaScript Debugging

Businesses can use ProLLM Benchmarks to evaluate how well different language models perform in debugging JavaScript code, ensuring they select the most effective model for their development needs.

EdTech Applications

Educational technology companies can leverage ProLLM Benchmarks to assess language models' performance in generating educational content or answering student queries, enhancing the learning experience.

Food Delivery Services

ProLLM Benchmarks help food delivery platforms evaluate language models for customer support interactions, ensuring accurate and efficient responses to user inquiries.

How to Use ProLLM Benchmarks: Step-by-Step Guide

Visit the ProLLM Benchmarks website and explore the available benchmarks to identify those relevant to your industry or use case.

Review the leaderboard to compare the performance of different language models on tasks that matter to your business.

Subscribe to receive notifications about new benchmarks and updates, ensuring you stay informed about the latest evaluations.

Utilize the insights provided by ProLLM Benchmarks to make informed decisions about which language models to deploy in your testing or production systems.

ProLLM Benchmarks Pros and Cons: Honest Review

Pros

Considerations

Is ProLLM Benchmarks Worth It? FAQ & Reviews

ProLLM Benchmarks are updated as quickly as possible, with most new model releases benchmarked within hours of their availability.

No, the evaluation sets are not publicly disclosed to maintain benchmark integrity, but mirror sets are shared for transparency and insight.

ProLLM Benchmarks cover a variety of industries, including EdTech, food delivery, and more, with regular updates to include new use cases.