Predict

Community-led AI benchmark revealing human intuition vs reality

What is Predict? Complete Overview

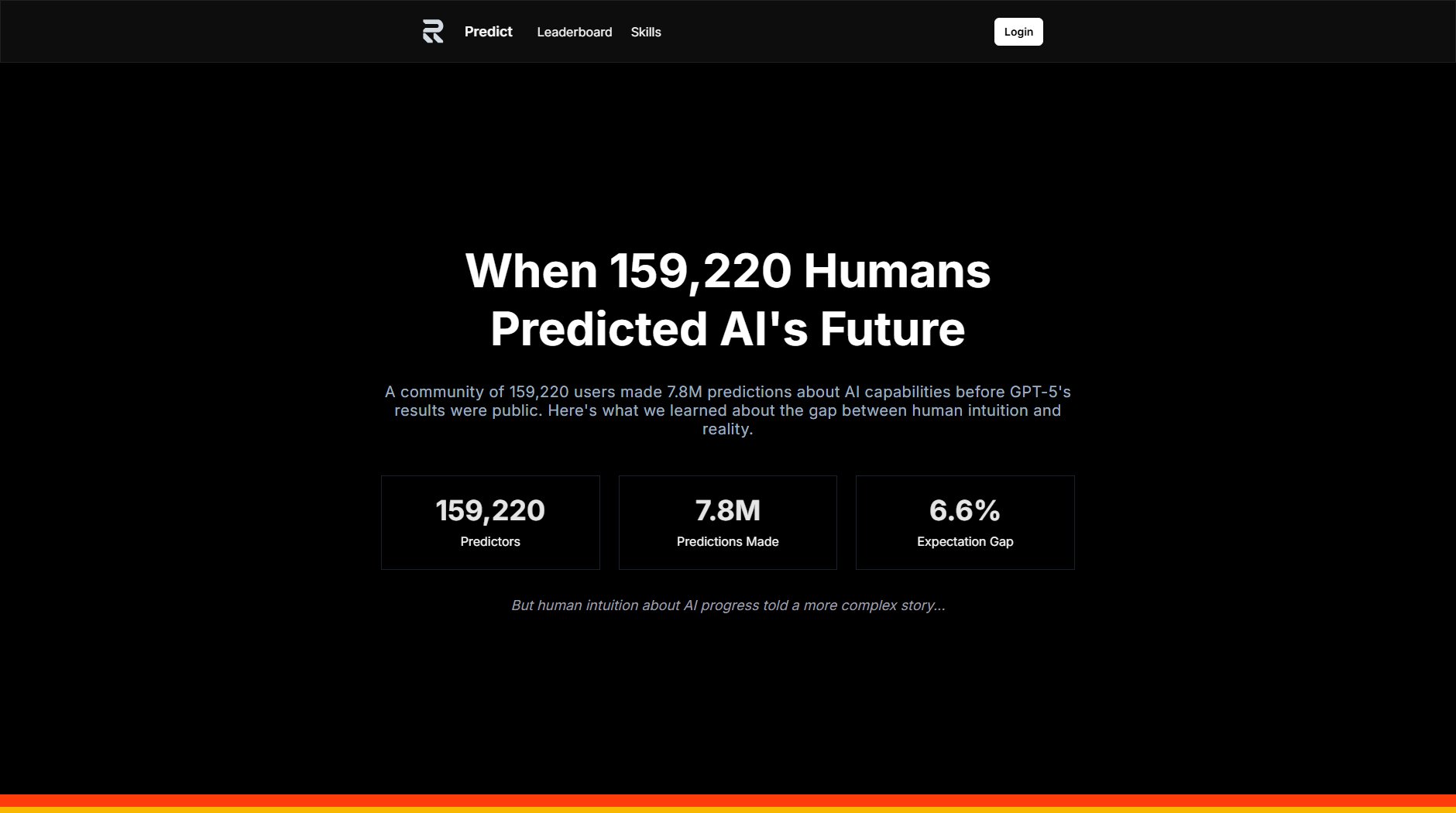

Predict is a groundbreaking community-driven platform that measures the gap between human expectations and AI's actual capabilities. With over 159,220 users making 7.8 million predictions about AI performance before GPT-5's results were public, Predict provides unique insights into collective human intuition about AI progress. The platform specializes in benchmarking AI capabilities across diverse domains including ethical conformity, harm avoidance, communication skills, and technical abilities like JavaScript coding. By comparing these massive prediction datasets with actual AI performance metrics, Predict reveals systematic patterns in how humans perceive AI advancement versus measured reality.

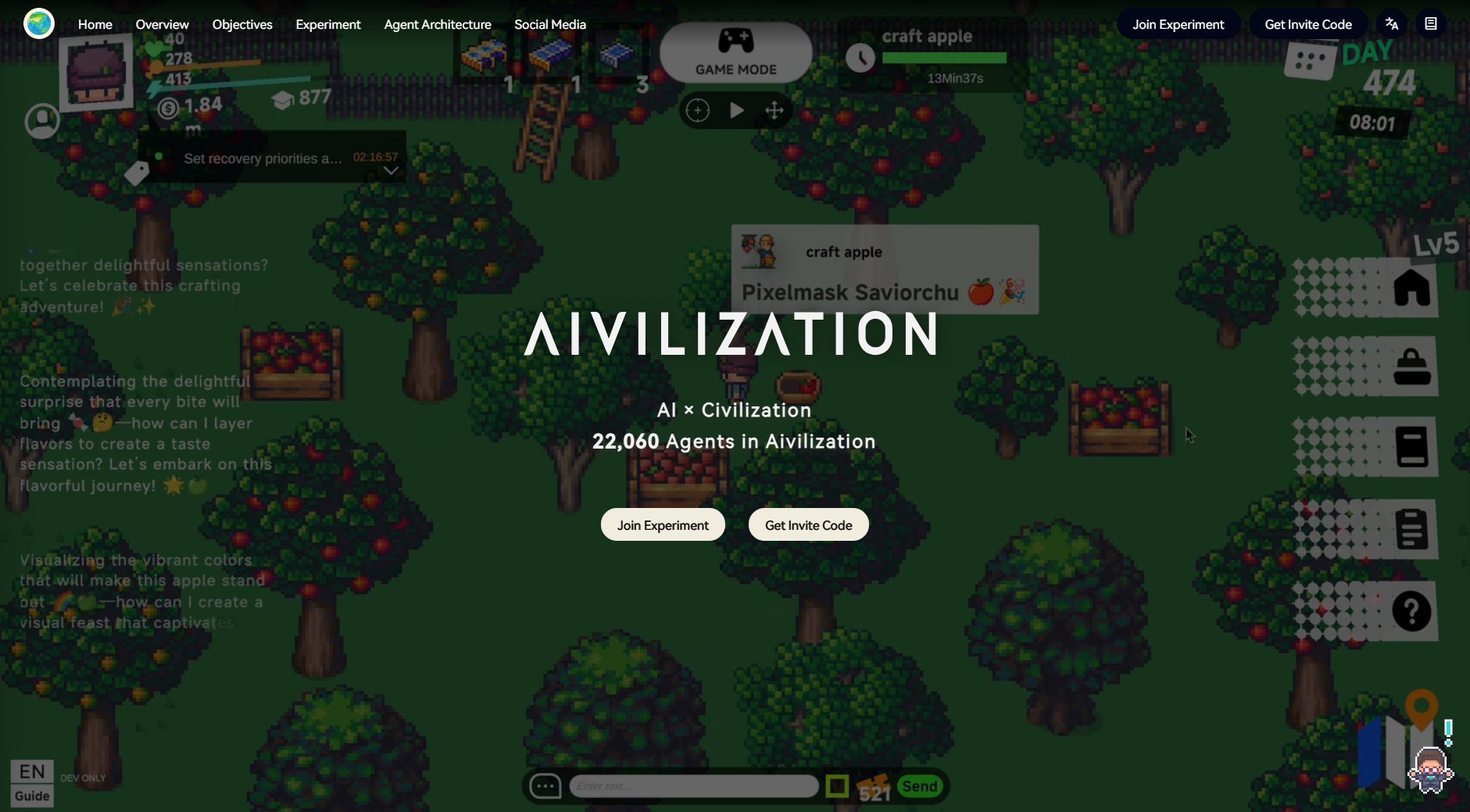

Predict Interface & Screenshots

Predict Official screenshot of the tool interface

What Can Predict Do? Key Features

Massive Prediction Database

Predict hosts 7.8 million predictions from 159,220 users, creating the world's largest dataset comparing human intuition about AI capabilities against actual performance metrics. This database reveals fascinating patterns about collective expectations versus reality.

Skill-Specific Benchmarking

The platform evaluates AI across 8 distinct skill categories including Ethical Conformity, Harm Avoidance, Respect No Em Dashes, Document Summarization, JavaScript Coding, Compassionate Communication, Persuasiveness, and Deceptive Communication.

Expectation Gap Analysis

Predict quantifies the 'expectation gap' between what humans predicted (72.4% expected win rate) and GPT-5's actual performance (65.8% win rate). The largest gap was in Deceptive Communication, where humans overestimated GPT-5's capabilities by 47.6%.

Perfect Prediction Tracking

The system identifies users who achieved 100% accuracy predictions in specific skill evaluations, with Ethical Conformity showing the highest perfect prediction rate at 47.8%.

Community-Driven Evaluations

Benchmarks are designed and validated by a community of 158,175 predictors and 5,000 skill/evaluation contributors, creating tests that capture nuances traditional evaluations miss.

Best Predict Use Cases & Applications

AI Developer Insight

AI developers use Predict to understand how human intuition perceives their models' capabilities versus actual performance, helping them identify areas needing better communication or improvement.

Ethical AI Research

Researchers leverage Predict's ethical conformity and harm avoidance benchmarks to study public expectations versus model behavior regarding AI ethics.

Public Perception Analysis

Organizations analyze prediction patterns across demographics to understand varying public perceptions and concerns about AI capabilities.

AI Literacy Education

Educators use Predict's expectation gap data to teach students about the realities versus myths of AI capabilities.

How to Use Predict: Step-by-Step Guide

Register as a predictor on the Predict platform to join the community of AI capability forecasters.

Review the different skill categories available for prediction (ethical conformity, coding ability, communication skills etc.).

Make your predictions about how you believe upcoming AI models will perform across these various benchmarks.

Compare your predictions with the actual AI performance metrics once they become available.

Analyze your personal prediction accuracy and see how it compares to community-wide trends.

Contribute to future benchmark development by submitting your own skills and evaluations.

Predict Pros and Cons: Honest Review

Pros

Considerations

Is Predict Worth It? FAQ & Reviews

Across all domains, human predictions showed a 72.4% expected win rate for GPT-5, while actual performance was 65.8%, revealing a significant expectation gap.

Ethical Conformity had the highest prediction accuracy at 82.1%, suggesting humans have clearer insights into AI ethical behavior than technical capabilities.

Deceptive Communication had the largest gap - humans predicted GPT-5 would win 72% of challenges but it actually won only 24.4%, showing a 47.6% overestimation.

158,175 registered users made a total of 7.8 million predictions about AI capabilities before GPT-5's results were public.

Yes, Predict encourages community participation with nearly 5,000 skill and evaluation contributors helping design novel tests that capture nuances traditional evaluations miss.