LLM HUB

AI Pipeline Orchestration for seamless multi-model workflows

What is LLM HUB? Complete Overview

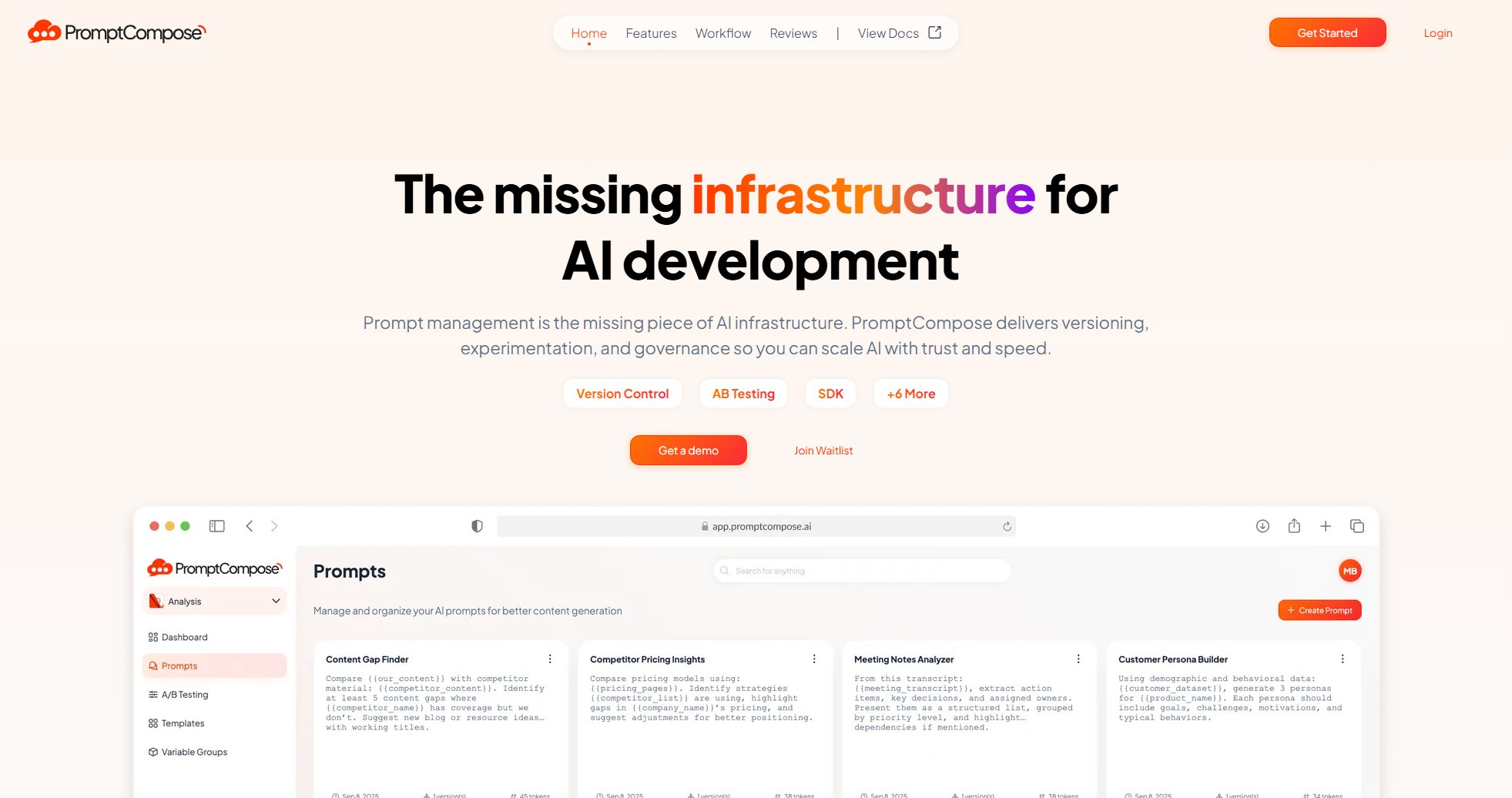

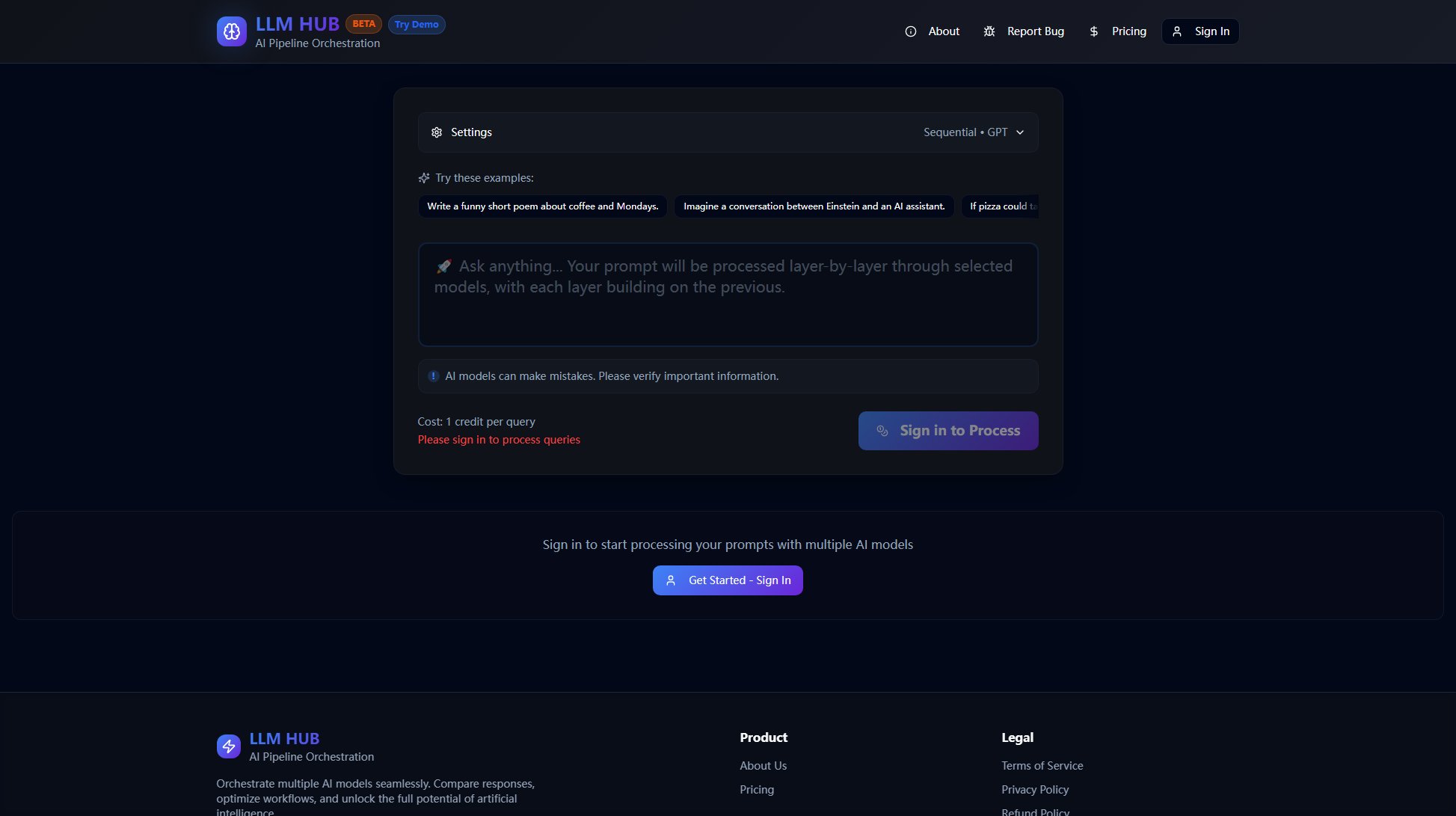

LLM HUB is an AI pipeline orchestration platform that enables users to seamlessly integrate and compare multiple AI models (like GPT, Claude, Gemini) in their workflows. Designed for developers, data scientists, and AI enthusiasts, it solves the pain points of model comparison, response optimization, and workflow automation. The platform allows users to run sequential or parallel queries across different models, providing flexibility in testing and deploying AI solutions. With a credit-based pricing system, users pay only for the queries they run, making it accessible for both small-scale experimentation and large-scale production use.

LLM HUB Interface & Screenshots

LLM HUB Official screenshot of the tool interface

What Can LLM HUB Do? Key Features

Multi-Model Orchestration

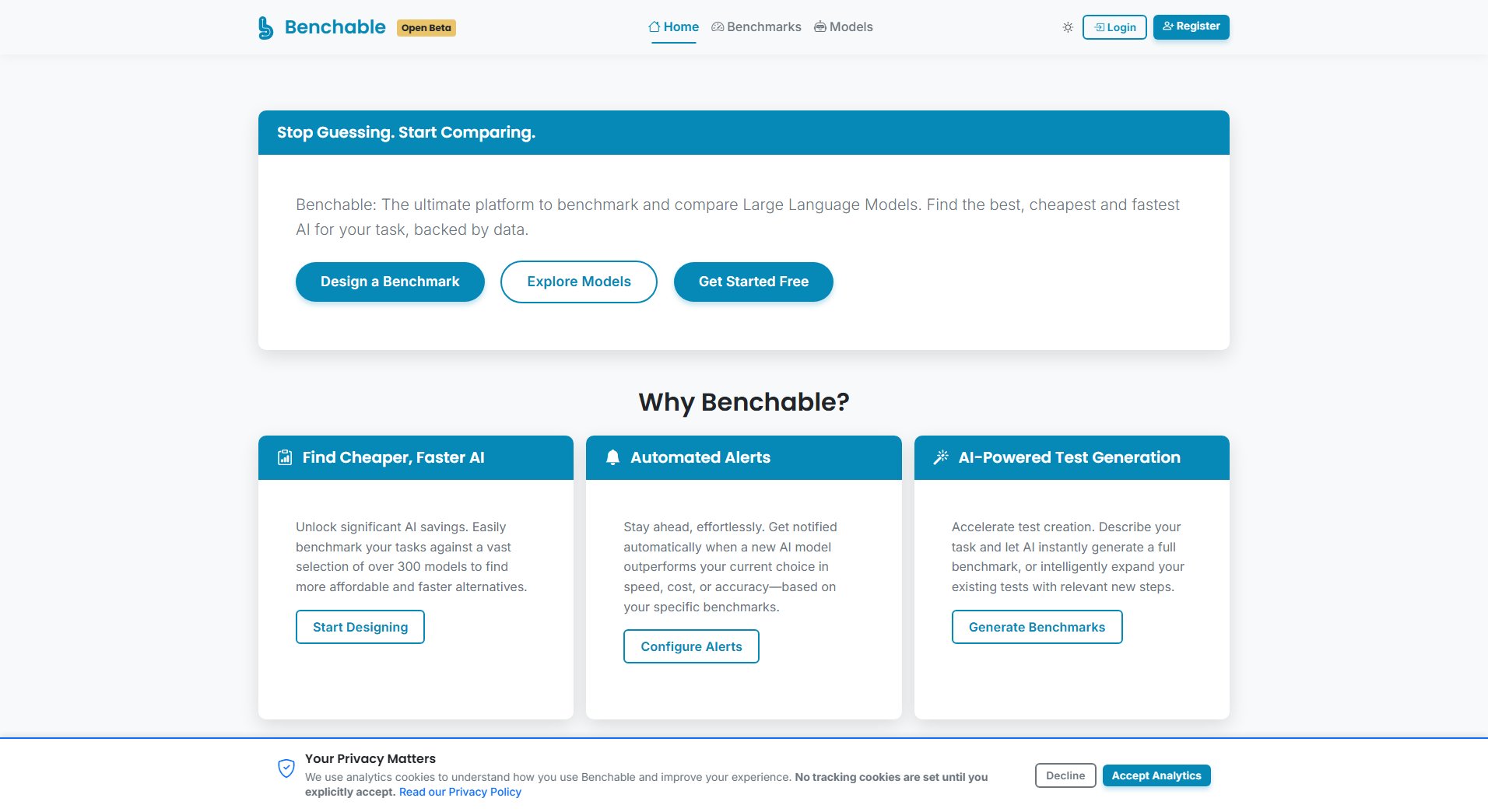

Run prompts across multiple AI models (GPT, Claude, Gemini) simultaneously or sequentially, allowing for comprehensive response comparison and model benchmarking.

Workflow Optimization

Create optimized AI workflows by chaining models or running them in parallel, saving time and improving output quality through model collaboration.

Credit-Based System

Flexible pay-as-you-go system where 1 credit = 1 query. Credits never expire, giving users complete control over their AI expenditure without subscription pressure.

Prompt Examples Library

Access a curated collection of example prompts to kickstart your AI projects, ranging from creative writing to technical explanations and data analysis.

Response Comparison

Easily compare outputs from different AI models side-by-side to evaluate quality, tone, and accuracy for your specific use case.

Best LLM HUB Use Cases & Applications

Content Creation Comparison

Marketing teams can generate multiple versions of content using different AI models, then select the most compelling outputs for their campaigns.

Technical Documentation

Developers can explain complex code snippets through different AI models to find the clearest explanations for documentation or team training.

Research Assistance

Academics can compare how different AI models handle specialized queries, identifying which provides the most accurate and comprehensive responses.

Product Ideation

Product teams can generate diverse creative concepts by running parallel prompts across multiple models, sparking innovative ideas.

How to Use LLM HUB: Step-by-Step Guide

Sign in to your LLM HUB account or create a new one to access the platform's features.

Purchase credits through one of the available packages (100, 250, or 500 credits) or request a custom amount for larger needs.

Choose between sequential (one model after another) or parallel (multiple models simultaneously) processing modes.

Enter your prompt or select from the example prompts to get started quickly with common use cases.

Review and compare the responses from different AI models, then integrate the best outputs into your workflow.

LLM HUB Pros and Cons: Honest Review

Pros

Considerations

Is LLM HUB Worth It? FAQ & Reviews

Each query costs 1 credit regardless of which AI model you use or how long the response is. This simple pricing makes cost estimation easy.

No, purchased credits never expire. You can use them whenever you need them without worrying about losing your investment.

Refunds are not typically offered for unused credits as stated in the refund policy, but you can contact support for special circumstances.

Currently GPT, Claude, and Gemini models are available, with plans to add more models based on user demand and emerging technologies.

There isn't a permanent free tier, but new users can contact support about potential trial credits for testing the platform.