Denvr Dataworks

High-performance AI cloud for training, inference, and data science

What is Denvr Dataworks? Complete Overview

Denvr Dataworks provides vertically integrated AI cloud services with high-performance compute infrastructure for AI training, inference, and data science workloads. Their platforms feature NVIDIA H100, A100, and Intel Gaudi HPUs across virtualized and bare metal environments in data centers located in Canada and the USA. The service is designed for AI startups, developers, researchers, and enterprises looking for optimized AI infrastructure with self-service provisioning, petabyte-scale storage, and ultra-fast networking. Denvr offers special programs like the AI Ascend startup program with up to $500,000 in compute credits for qualifying early-stage companies.

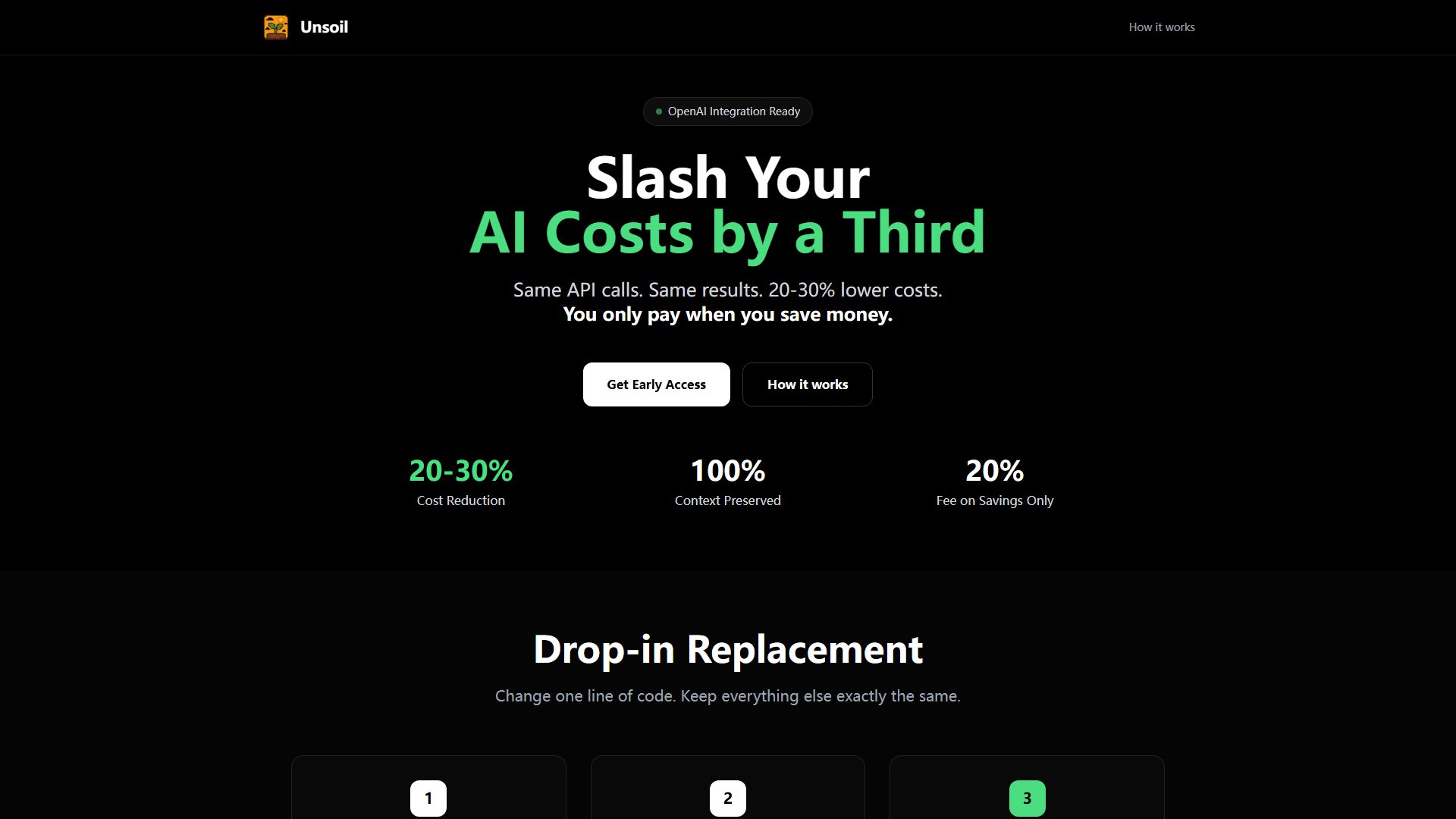

Denvr Dataworks Interface & Screenshots

Denvr Dataworks Official screenshot of the tool interface

What Can Denvr Dataworks Do? Key Features

High-Performance GPU Clusters

Deploy single nodes or scale to 1024 GPU clusters with NVIDIA HGX H100, A100 and Intel Gaudi AI processors powered by non-blocking InfiniBand or RoCE v2 up to 3.2 Tbps. The infrastructure supports demanding AI workloads with optimized hardware configurations for maximum performance.

AI Inference Services

Run foundation or custom models with high-speed, low-cost inference through fully managed API endpoints. The platform offers serverless and dedicated OpenAI-compatible endpoints that simplify deployment and operations for production AI applications.

Developer Platform

Comprehensive tools including Jupyter Notebooks, preloaded ML machine images with GPU drivers and Docker, Python/Terraform language bindings, and detailed documentation. The platform provides everything developers need to start building AI applications quickly with streamlined workflows.

Orchestration Layer

Intel Xeon CPU nodes power job scheduling and system operations with full compatibility for Kubernetes, SLURM, or custom orchestration stacks. The platform supports containerized workloads and provides flexible deployment options for different workflow requirements.

Fast, Scalable Filesystems

Petabyte-scale network filesystems with over 10 GB/s bandwidth built on NVMe SSD storage. The high-performance storage solution is optimized for AI workloads with distributed data redundancy to ensure high availability.

Best Denvr Dataworks Use Cases & Applications

AI Startup Model Development

Early-stage AI companies can leverage the startup program credits to access high-performance GPUs for developing and training foundation models without significant upfront infrastructure costs.

Large-scale Model Training

Research teams and enterprises can scale to 1024 GPU clusters for training large language models or other complex AI systems with the platform's high-performance compute infrastructure.

Production AI Inference

Businesses can deploy managed API endpoints for serving AI models in production with low-latency, high-throughput inference capabilities.

How to Use Denvr Dataworks: Step-by-Step Guide

Sign up for an account on the Denvr Dataworks platform or apply for the AI Ascend startup program if you qualify for compute credits.

Select your compute resources from available GPU instances (NVIDIA H100, A100, Intel Gaudi) or CPU instances based on your workload requirements.

Configure your environment using the developer tools including Jupyter Notebooks, preloaded ML images, or bring your own containerized workflows.

Deploy your AI models for training or inference, utilizing the high-performance compute clusters and networked storage systems.

Monitor and manage your workloads through the platform's orchestration tools and access technical support as needed.

Denvr Dataworks Pros and Cons: Honest Review

Pros

Considerations

Is Denvr Dataworks Worth It? FAQ & Reviews

The AI Ascend program provides early-stage AI companies with up to $500,000 in compute credits for Denvr's AI services, including access to advanced GPUs. Eligible teams receive an initial $1,000 credit and can qualify for additional production credits based on their needs and potential.

Denvr offers both InfiniBand and RoCE networking options with speeds up to 3.2 Tbps, depending on the GPU configuration. The high-speed interconnects are designed to minimize latency and maximize throughput for distributed training workloads.

Yes, the platform is fully compatible with Kubernetes, SLURM, and other common orchestration stacks. You can integrate your existing workflows or use Denvr's pre-configured solutions.

Denvr provides global help desk support via web ticketing and email, with Slack/Teams integration for enterprise customers. Their team includes ML experts who can assist with platform integration, best practices, and troubleshooting.