CrawlerCheck

Free Googlebot & AI Crawlability Test Tool

What is CrawlerCheck? Complete Overview

CrawlerCheck is a powerful tool designed to help webmasters and SEO professionals verify if major search engine bots and AI crawlers can access their websites. It instantly checks your site's robots.txt file, meta robots tags, and X-Robots-Tag HTTP headers to provide a clear report on which user-agents are allowed or disallowed. This tool is essential for avoiding costly SEO mistakes, ensuring your content is visible to search engines, and controlling access to AI bots like ChatGPT and Claude. CrawlerCheck supports a wide range of crawlers, including Googlebot, Bingbot, and various AI and social media bots, making it a comprehensive solution for managing your website's crawlability.

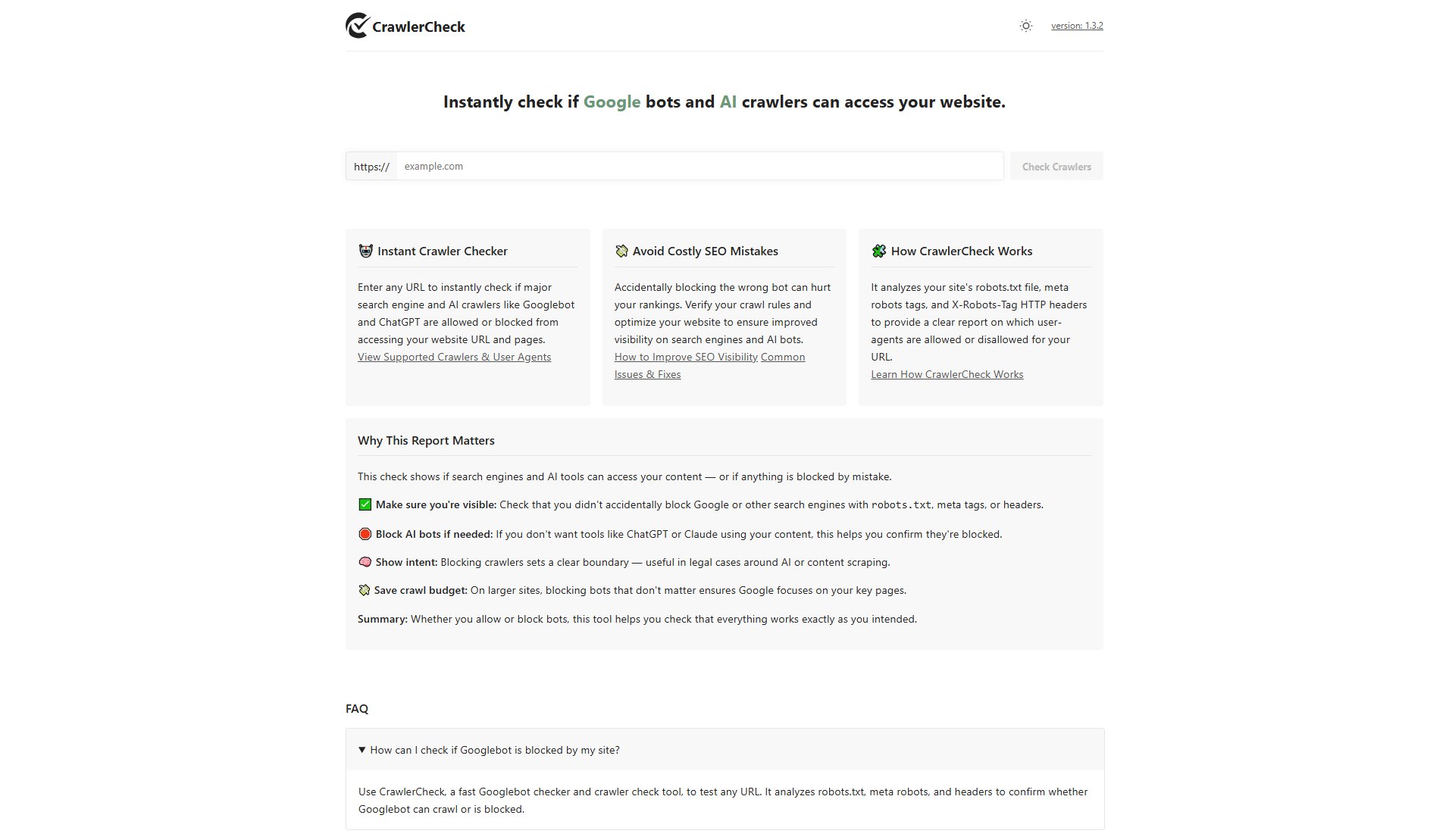

CrawlerCheck Interface & Screenshots

CrawlerCheck Official screenshot of the tool interface

What Can CrawlerCheck Do? Key Features

Instant Crawler Checker

Enter any URL to instantly check if major search engine and AI crawlers like Googlebot and ChatGPT are allowed or blocked from accessing your website URL and pages. This feature provides immediate feedback on your site's crawlability.

Comprehensive Crawler Support

CrawlerCheck supports a wide range of crawlers, including major search engine bots (Googlebot, Bingbot), AI and large language model crawlers (ChatGPT-User, GPTBot), SEO audit tools (AhrefsBot, SemrushBot), and social media bots (Twitterbot, FacebookBot).

Detailed Crawlability Report

The tool analyzes your site's robots.txt file, meta robots tags, and X-Robots-Tag HTTP headers to provide a clear report on which user-agents are allowed or disallowed. This helps you understand and optimize your crawl rules.

SEO Optimization Insights

CrawlerCheck helps you optimize your crawl budget, ensure access to critical resources, and refine crawl rules to improve your website's search engine visibility and rankings.

Common Issues & Fixes

The tool identifies common crawlability issues such as unintentionally blocked URLs, server errors, excessive URL parameters, and JavaScript-rendered content, providing actionable fixes to improve your SEO health.

Best CrawlerCheck Use Cases & Applications

SEO Optimization

SEO professionals use CrawlerCheck to ensure that search engine bots can access and index their websites effectively. By identifying and fixing crawlability issues, they improve their site's visibility and rankings on search engines.

AI Bot Management

Website owners use CrawlerCheck to verify if AI bots like ChatGPT and Claude can access their content. This helps them decide whether to allow or block these bots based on their content usage policies.

Technical SEO Audits

Webmasters perform technical SEO audits using CrawlerCheck to identify and resolve issues like unintentionally blocked URLs, server errors, and duplicate content that can negatively impact their site's performance.

Crawl Budget Optimization

Large websites use CrawlerCheck to optimize their crawl budget by blocking low-value or duplicate pages, ensuring that search engines focus on crawling and indexing their most important content.

How to Use CrawlerCheck: Step-by-Step Guide

Enter the URL of the webpage you want to check in the input field provided on the CrawlerCheck homepage. Ensure the URL includes the https:// protocol.

Click the 'Check Crawlers' button to initiate the crawlability test. The tool will analyze the robots.txt file, meta robots tags, and X-Robots-Tag HTTP headers for the specified URL.

Review the detailed report generated by CrawlerCheck, which shows which user-agents are allowed or disallowed from accessing your URL.

Use the insights provided to optimize your crawl rules, ensuring that important bots like Googlebot and Bingbot can access your content while blocking unwanted crawlers if necessary.

Regularly recheck your URLs to ensure ongoing crawlability and address any new issues that may arise due to changes in your website's configuration or content.

CrawlerCheck Pros and Cons: Honest Review

Pros

Considerations

Is CrawlerCheck Worth It? FAQ & Reviews

Use CrawlerCheck to test any URL. It analyzes robots.txt, meta robots, and headers to confirm whether Googlebot can crawl or is blocked.

Yes. CrawlerCheck works as an AI crawler access checker, letting you verify if AI bots such as ChatGPT, Claude, or Perplexity can read your site. It shows whether robots.txt, meta tags, or headers allow or block AI crawlers.

Indexing issues often come from restrictions in robots.txt or meta tags. Run a crawler test with CrawlerCheck to confirm whether your pages are blocked. It's a quick way to diagnose crawlability problems before waiting on Search Console.

Robots.txt is a robots exclusion checker file that tells bots where they can or cannot go. Try it out with CrawlerCheck: paste a URL, and you'll see how crawlers interpret your site's rules in real time.