Cerebras

Industry-leading AI infrastructure with unmatched speed and scale

What is Cerebras? Complete Overview

Cerebras provides the fastest AI infrastructure powered by the world's fastest processor, the Cerebras Wafer-Scale Engine. Designed for ultra-fast AI, it outperforms traditional GPU setups, enabling builders to achieve extraordinary results. Cerebras serves open models in seconds via cloud API, scales custom models on dedicated capacity, and offers on-prem deployment for full control. It is ideal for AI-native leaders, top startups, and Global 1000 enterprises looking for blazing AI inference speeds, cost efficiency, and enterprise-grade reliability.

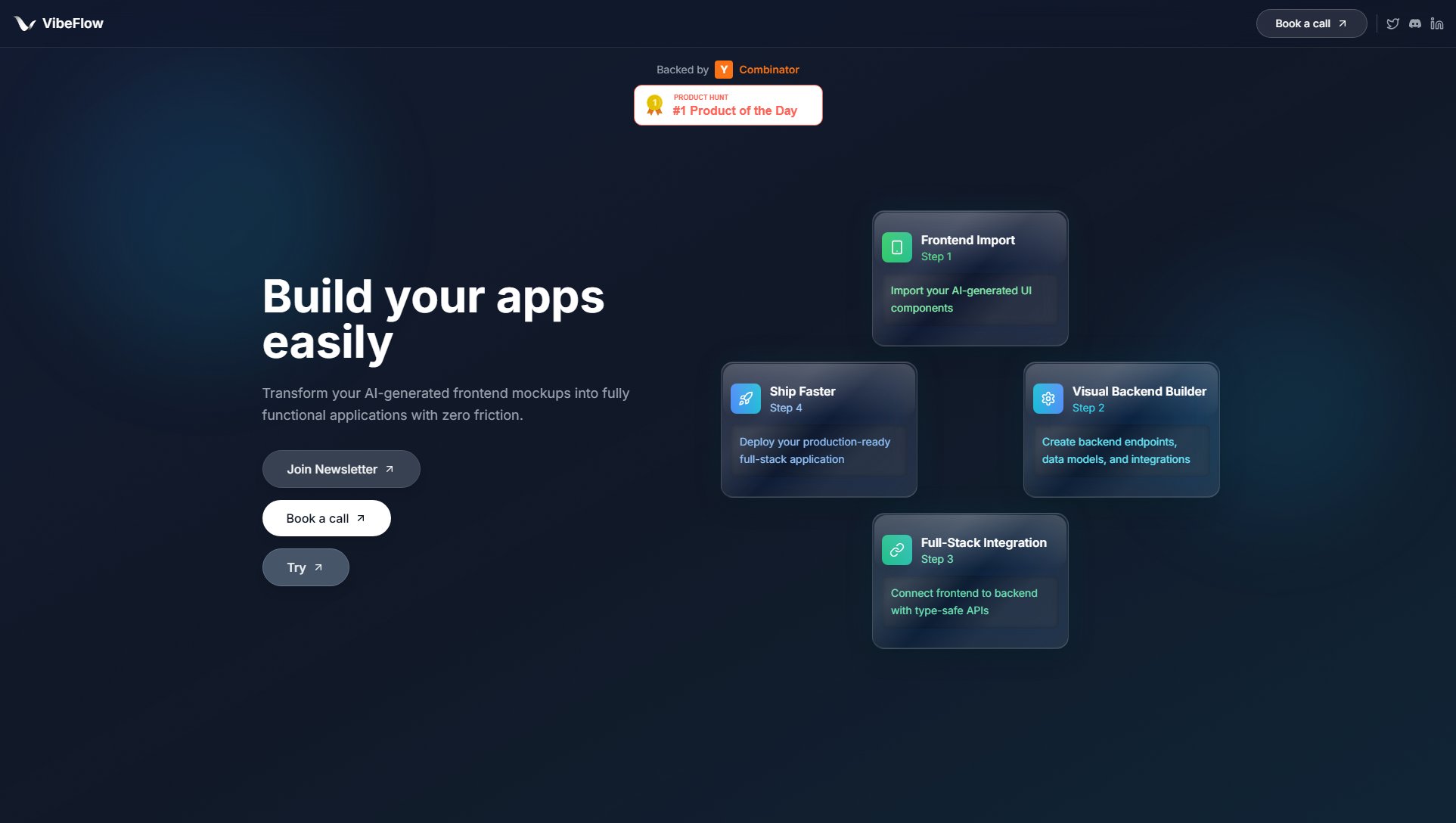

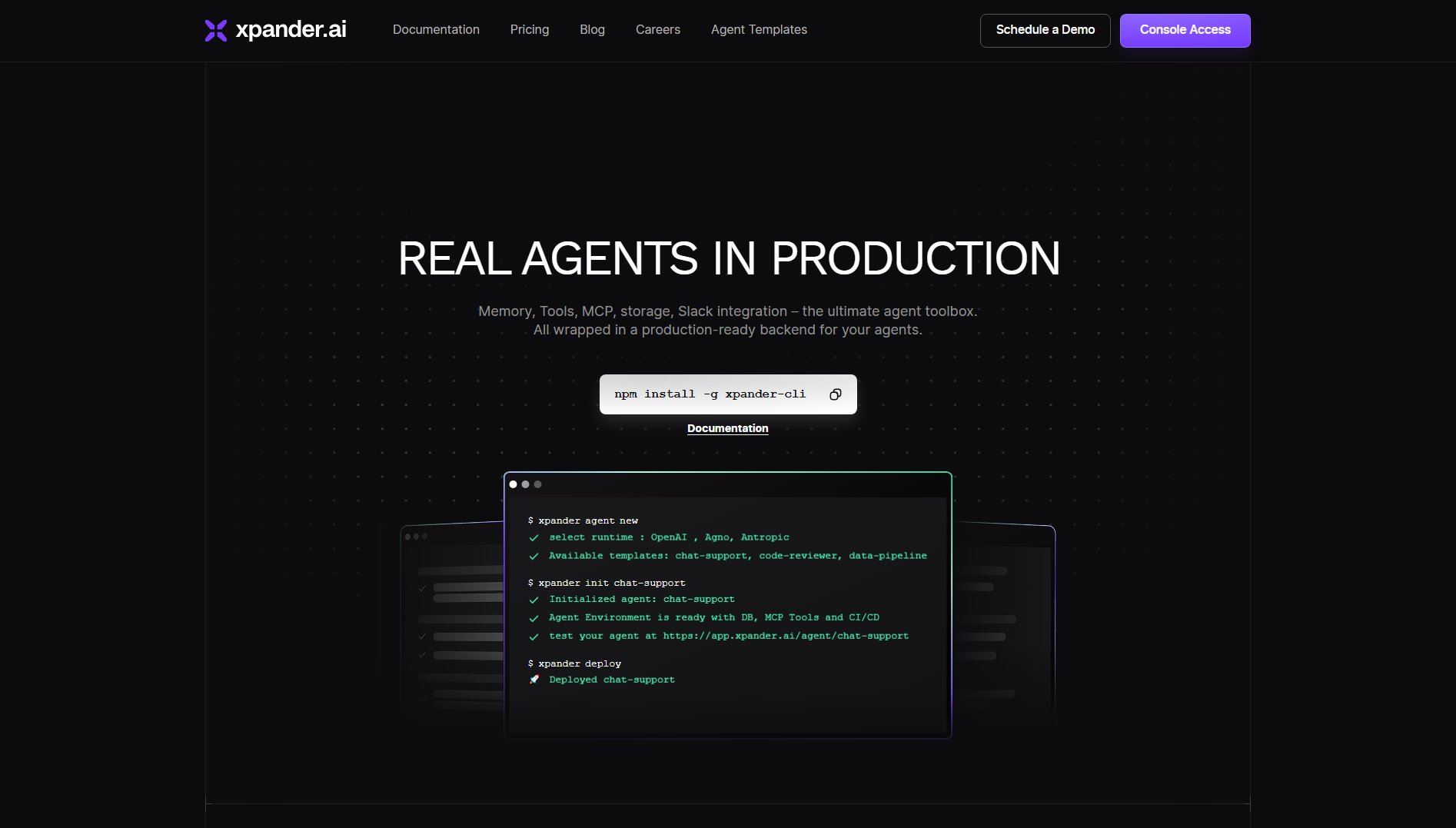

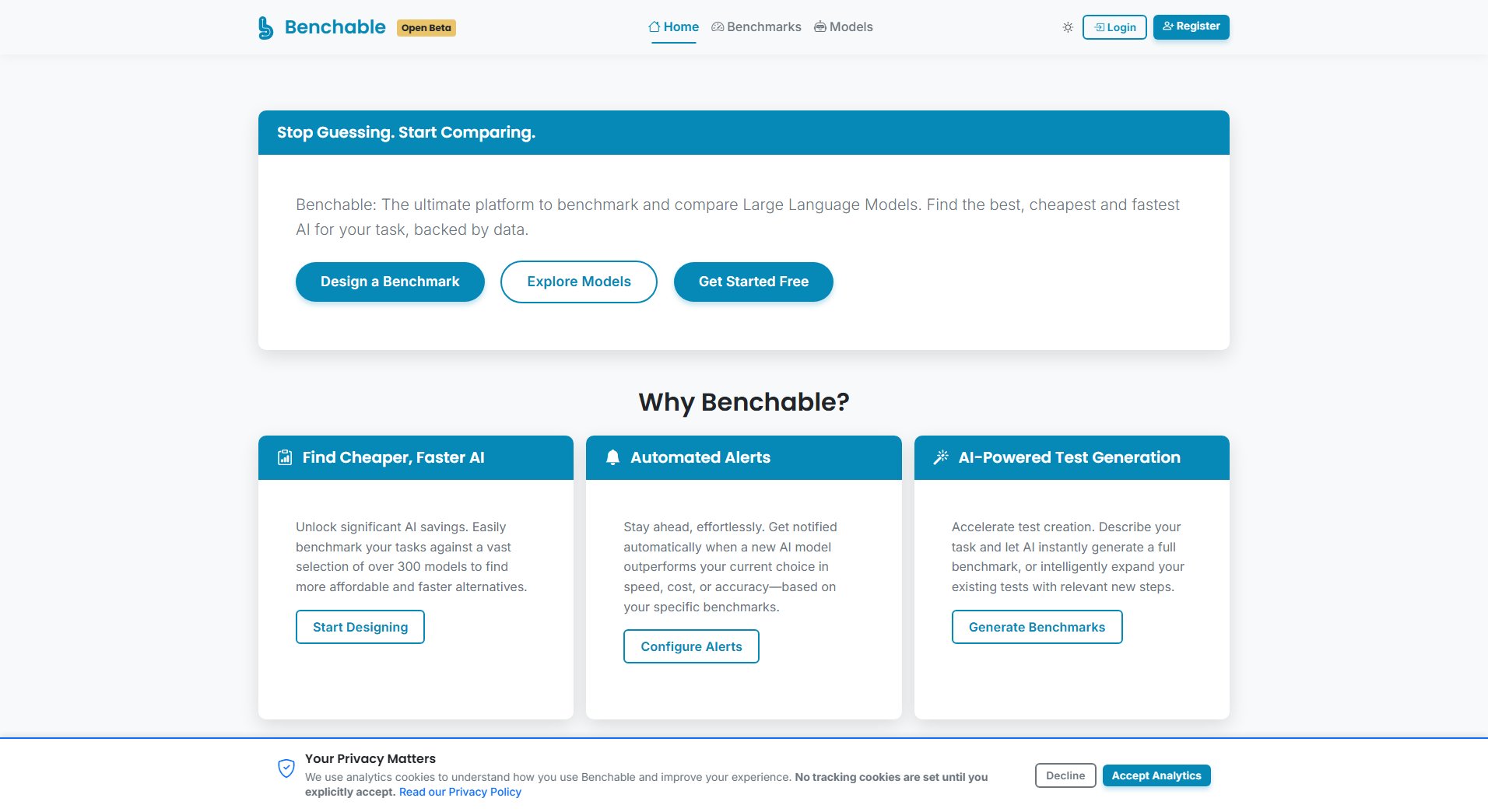

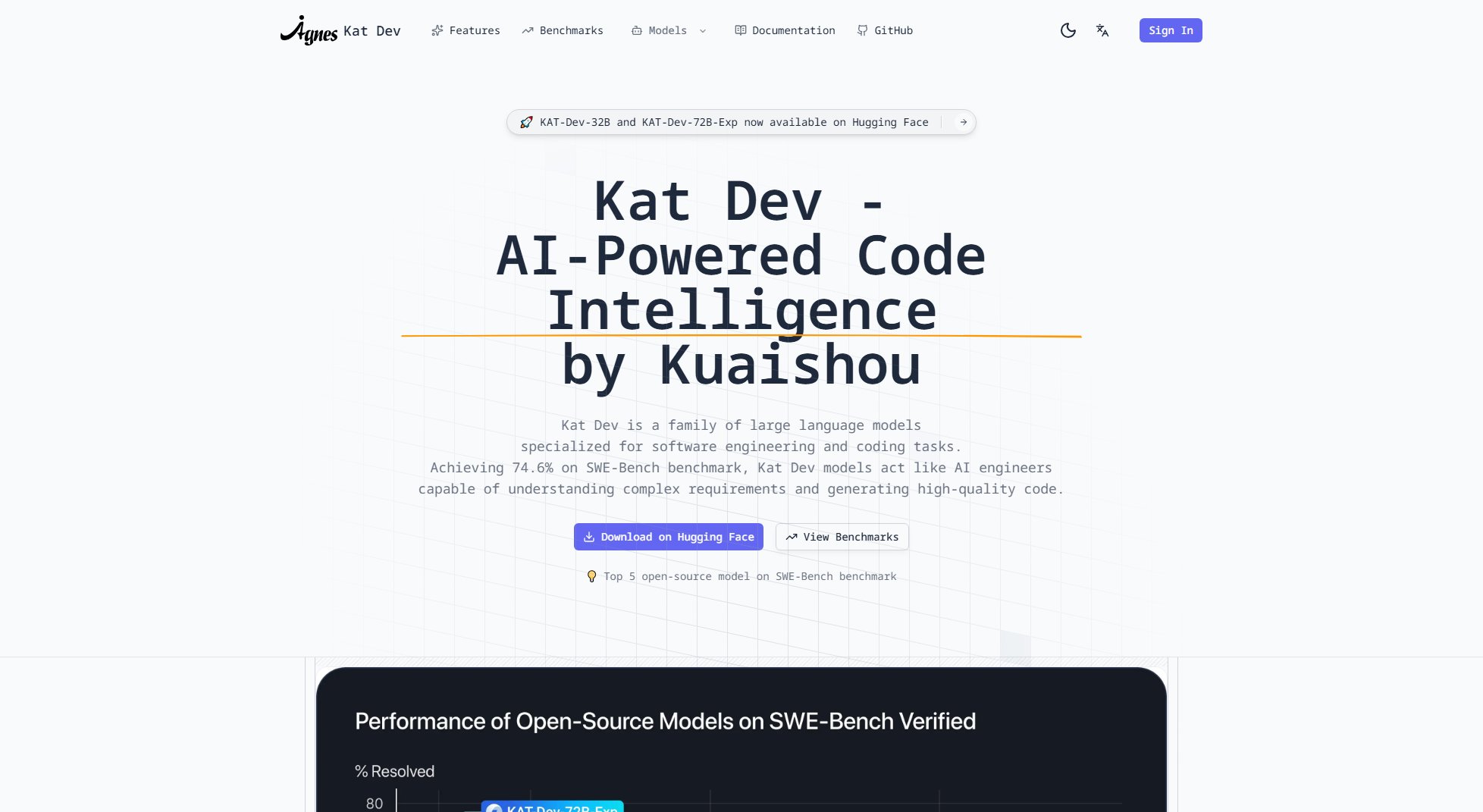

Cerebras Interface & Screenshots

Cerebras Official screenshot of the tool interface

What Can Cerebras Do? Key Features

Blazing AI Inference

Cerebras delivers world-record inference speeds, enabling complex reasoning in under a second. It supports frontier models like GPT-OSS 120B, Qwen3 Instruct, and Llama, making it perfect for deep search, copilots, and real-time analysis.

Unmatched Speed & Intelligence

Deploy full-parameter models faster than any other platform, with no compromises on model size or precision. Cerebras achieves up to 30x faster inference compared to GPU clouds, slashing infrastructure costs.

Enterprise-Grade, Developer-Friendly

Cerebras offers drop-in OpenAI API compatibility, SOC2/HIPAA certification, and battle-tested scalability. It is trusted by leading cloud service providers and enterprises for mission-critical AI workloads.

Train, Fine-tune, Serve on One Platform

Start with lightning-fast inference, then fine-tune or pre-train models with your own data. Cerebras provides a unified platform for all stages of AI model development, from prototyping to production.

Agents that Never Stall

Execute multi-step workflows without delays or timeouts, ensuring seamless AI-driven processes. Case studies like NinjaTech demonstrate how Cerebras enables uninterrupted, high-speed AI operations.

Best Cerebras Use Cases & Applications

Real-Time Code Generation

Developers can leverage Cerebras for instant code completions and debugging, maintaining workflow continuity and productivity at speeds up to 2,000 tokens per second.

AI-Powered Research

Organizations like AlphaSense use Cerebras for deep search and analysis, delivering accurate insights in under a second, significantly enhancing decision-making processes.

Healthcare and Drug Discovery

GSK and Mayo Clinic utilize Cerebras to accelerate drug discovery and genomic data analysis, reducing research timelines from years to months.

How to Use Cerebras: Step-by-Step Guide

Get an API key: Sign up on the Cerebras website to obtain your API key for accessing their cloud services.

Choose a model: Select from popular models like GPT-OSS 120B, Qwen3 Instruct, or Llama, depending on your use case.

Integrate with your workflow: Use the drop-in OpenAI API compatibility to seamlessly integrate Cerebras into your existing applications.

Scale as needed: Upgrade to dedicated or on-prem solutions for higher performance and control, tailored to your growing needs.

Cerebras Pros and Cons: Honest Review

Pros

Considerations

Is Cerebras Worth It? FAQ & Reviews

Cerebras supports models like GPT-OSS 120B, Qwen3 Instruct, Llama, and more, with speeds up to 3,000 tokens per second.

Cerebras offers up to 30x faster inference speeds and lower costs compared to traditional GPU clouds, making it ideal for high-performance AI workloads.

Cerebras offers a pay-as-you-go Exploration plan for prototyping, with no minimum commitment. Paid plans start at $50/month.

Yes, Cerebras provides on-prem deployment options for full control over models, data, and infrastructure in your data center or private cloud.

Community support is available via Discord for basic plans, while Growth and Enterprise plans offer prioritized Slack support and dedicated teams, respectively.