CacheGPT

Smart caching for AI to reduce LLM API costs by 80%

What is CacheGPT? Complete Overview

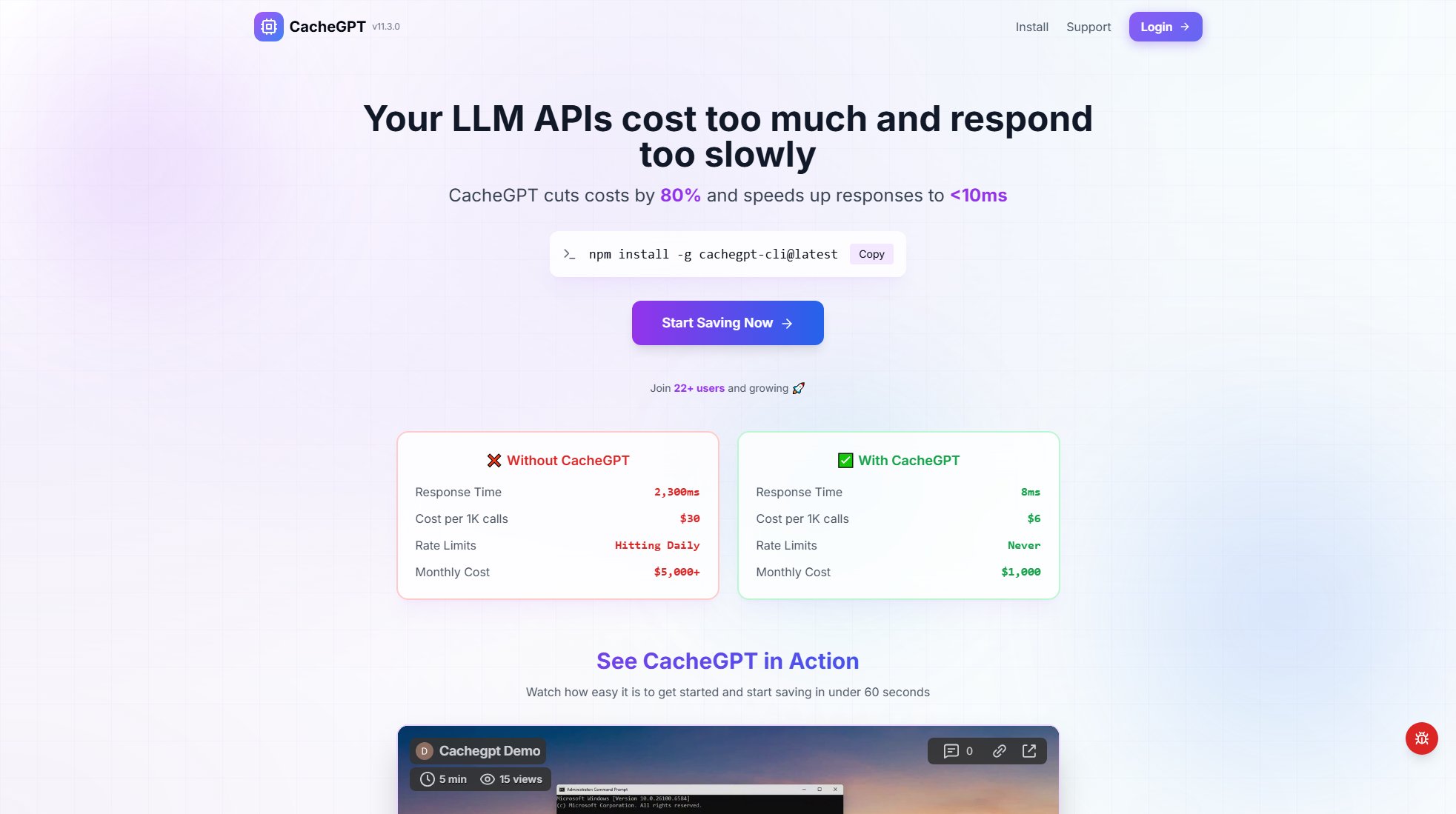

CacheGPT is a cutting-edge tool designed to significantly reduce the costs and improve the response times of LLM (Large Language Model) API calls. By implementing smart caching mechanisms, CacheGPT can cut API costs by up to 80% and speed up response times to under 10ms. It is ideal for developers, businesses, and enterprises that rely heavily on LLM APIs from providers like OpenAI, Anthropic Claude, Google Gemini, Mistral, and Cohere. CacheGPT ensures high performance, security, and compliance, making it suitable for both development and production environments.

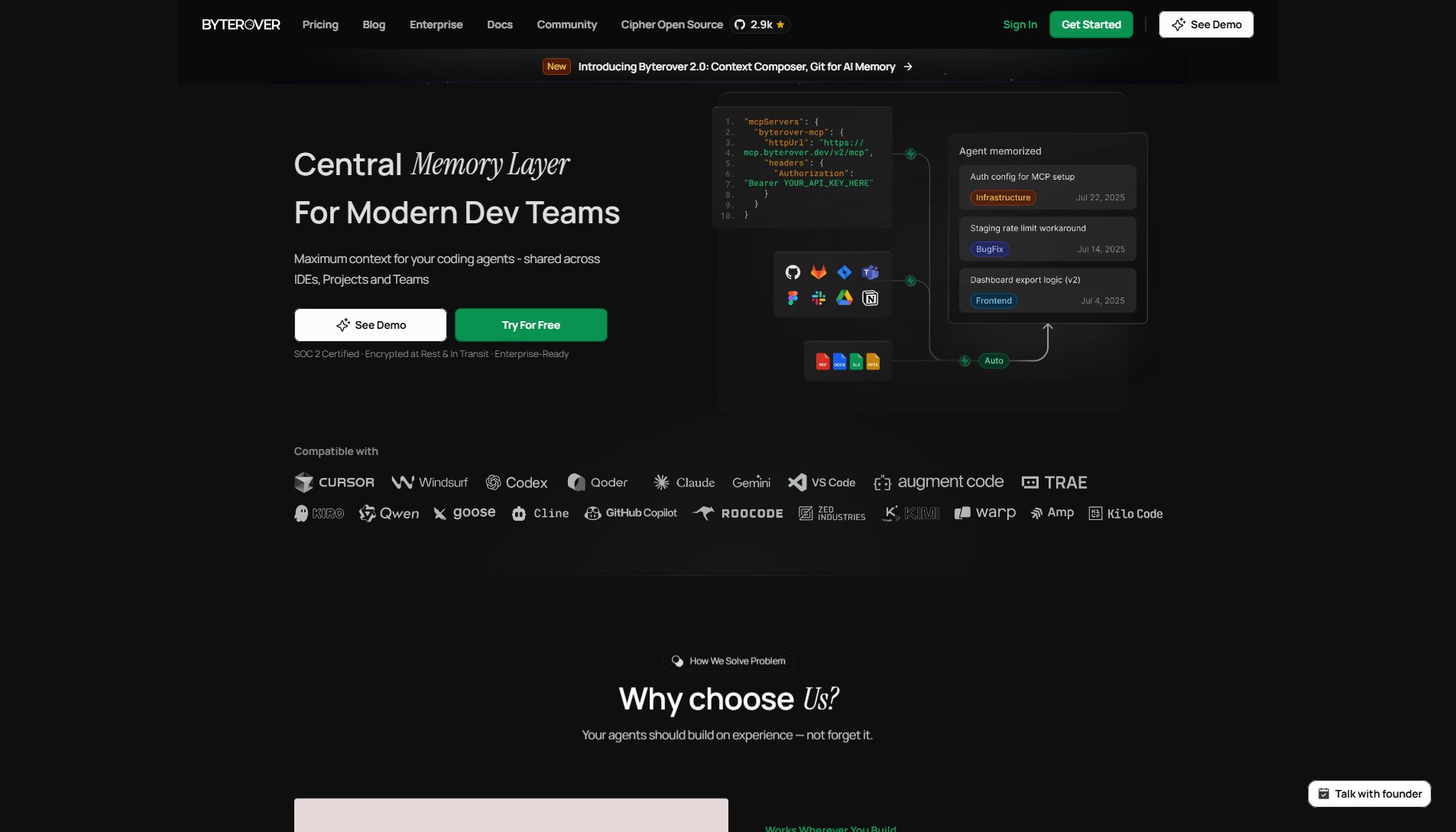

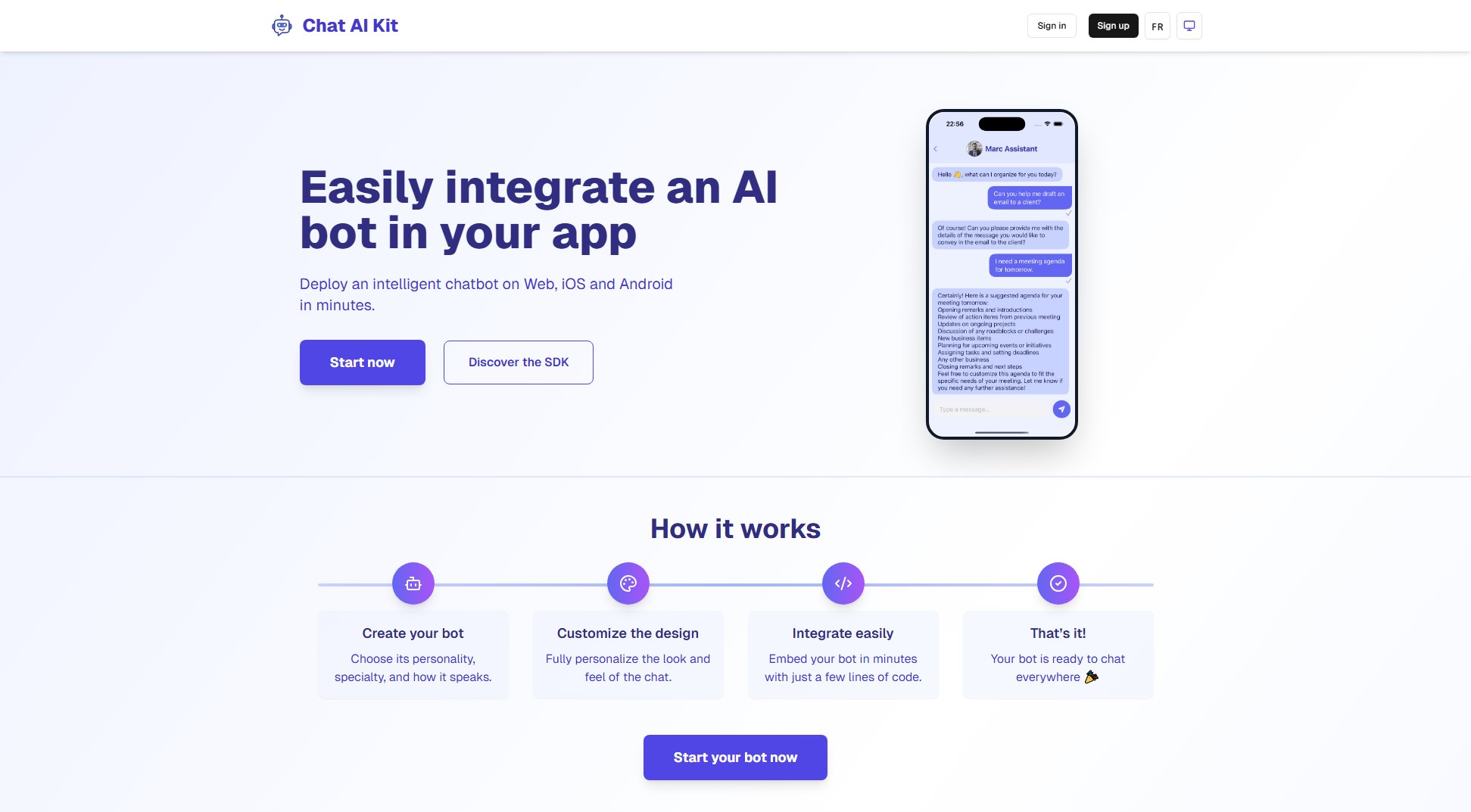

CacheGPT Interface & Screenshots

CacheGPT Official screenshot of the tool interface

What Can CacheGPT Do? Key Features

Cost Reduction

CacheGPT dramatically reduces LLM API costs by up to 80%. By caching frequently used queries and responses, it minimizes the number of API calls needed, leading to substantial savings. For example, the cost per 1,000 calls drops from $30 to just $6.

Ultra-Fast Response Times

With CacheGPT, response times are slashed to under 10ms for cached queries. This is a significant improvement over the typical 2,300ms response time of direct API calls, enhancing user experience and application performance.

Semantic Caching

CacheGPT uses advanced semantic similarity matching with vector embeddings to determine if a query has been made before. If a match is found above the confidence threshold, the cached response is returned instantly, saving both time and money.

Multi-Provider Support

CacheGPT supports all major LLM providers, including OpenAI (GPT-4, GPT-3.5), Anthropic (Claude), Google (Gemini), Mistral, Cohere, and any OpenAI-compatible API. Users can switch providers seamlessly from their settings.

Easy Setup

Getting started with CacheGPT is incredibly simple. Just run 'npm install -g cachegpt-cli@latest' and 'cachegpt chat' to authenticate and start saving immediately. The entire setup process takes under 30 seconds.

Enterprise-Grade Security

CacheGPT ensures data security with end-to-end encryption and encrypted API keys at rest. It is SOC2 compliant and does not store actual prompts or responses, only semantic hashes for cache matching.

High Availability

CacheGPT offers a 99.9% uptime SLA, making it reliable for production use. Many companies already rely on it to reduce their LLM costs by 80% in real-world applications.

Best CacheGPT Use Cases & Applications

Development Environment

Developers can use CacheGPT to reduce the cost of testing and iterating with LLM APIs. By caching common queries, they can save up to 80% on API costs during the development phase.

Production Applications

Businesses running production applications that rely on LLM APIs can use CacheGPT to cut costs and improve response times. This is especially valuable for high-traffic applications where API costs can quickly escalate.

Enterprise Solutions

Enterprises with large-scale LLM API usage can leverage CacheGPT to manage costs effectively. The tool's SOC2 compliance and high availability make it suitable for enterprise environments.

How to Use CacheGPT: Step-by-Step Guide

Install CacheGPT CLI by running the command 'npm install -g cachegpt-cli@latest' in your terminal. This will install the latest version of CacheGPT globally on your system.

Authenticate by running 'cachegpt chat' in your terminal. This will open a browser window where you can sign in using OAuth (Google or GitHub).

Start using CacheGPT immediately. Once authenticated, you can begin making queries. CacheGPT will handle the caching automatically, returning cached responses when available.

Monitor your savings. CacheGPT provides insights into your cost reductions and performance improvements, helping you understand the value it brings to your workflow.

CacheGPT Pros and Cons: Honest Review

Pros

Considerations

Is CacheGPT Worth It? FAQ & Reviews

Yes! CacheGPT uses end-to-end encryption, and your API keys are encrypted at rest. It is SOC2 compliant and never stores your actual prompts or responses—only semantic hashes for cache matching. Your data never leaves your control.

CacheGPT uses semantic similarity matching with vector embeddings. When you make a request, it checks if a similar query was made before. If there's a match above the confidence threshold, it returns the cached response instantly. Otherwise, it forwards the request to your LLM provider and caches the result.

No! CacheGPT uses server-managed API keys by default. Just sign up with OAuth (Google/GitHub) and start chatting immediately. For enterprise users, you can optionally provide your own keys for full control.

CacheGPT supports all major providers: OpenAI (GPT-4, GPT-3.5), Anthropic (Claude), Google (Gemini), Mistral, Cohere, and any OpenAI-compatible API. You can switch providers anytime from your settings.

Yes! CacheGPT is completely free to use with generous limits. No credit card required. Premium tiers with higher limits and additional features may be introduced in the future, but there will always be a free tier.

Simply run 'npm install -g cachegpt-cli@latest' and then 'cachegpt chat'. You'll authenticate via browser OAuth and can start chatting immediately. The whole process takes under 30 seconds.

When there's no matching cached response, CacheGPT forwards your request to the LLM provider you selected, returns the response to you, and caches it for future use. You pay the normal API cost for that request, but all similar future requests will be cached.

Absolutely! CacheGPT is production-ready with 99.9% uptime SLA, enterprise-grade security, and sub-10ms cache response times. Many companies are already using it to reduce their LLM costs by 80% in production.