The LLM Workshop

Compare 50+ AI models to find the perfect fit for your data

What is The LLM Workshop? Complete Overview

The LLM Workshop is an AI model comparison platform designed to help users find the most suitable large language model (LLM) for their specific needs. By allowing users to upload their data and test it across 50+ different AI models (including GPT, Claude, Gemini, and more), the platform provides side-by-side comparisons of model responses. This enables data-driven decision making when selecting AI models for various applications. Currently in beta, the platform focuses on text generation capabilities with plans to expand to audio, video, and image support in the future. The unique BYOK (Bring Your Own Keys) pricing model means users only pay for actual API usage through their own provider accounts, with no additional platform fees.

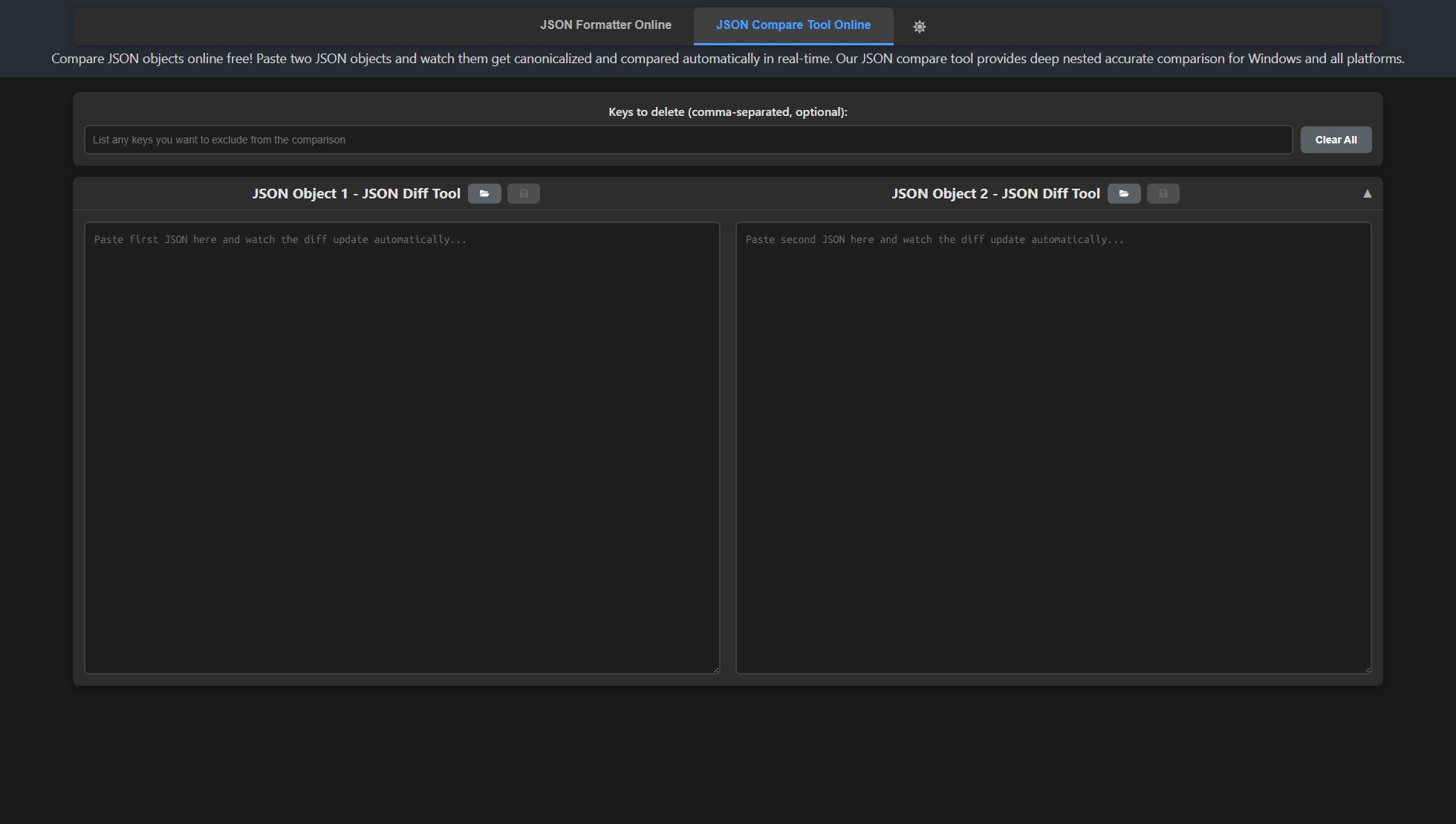

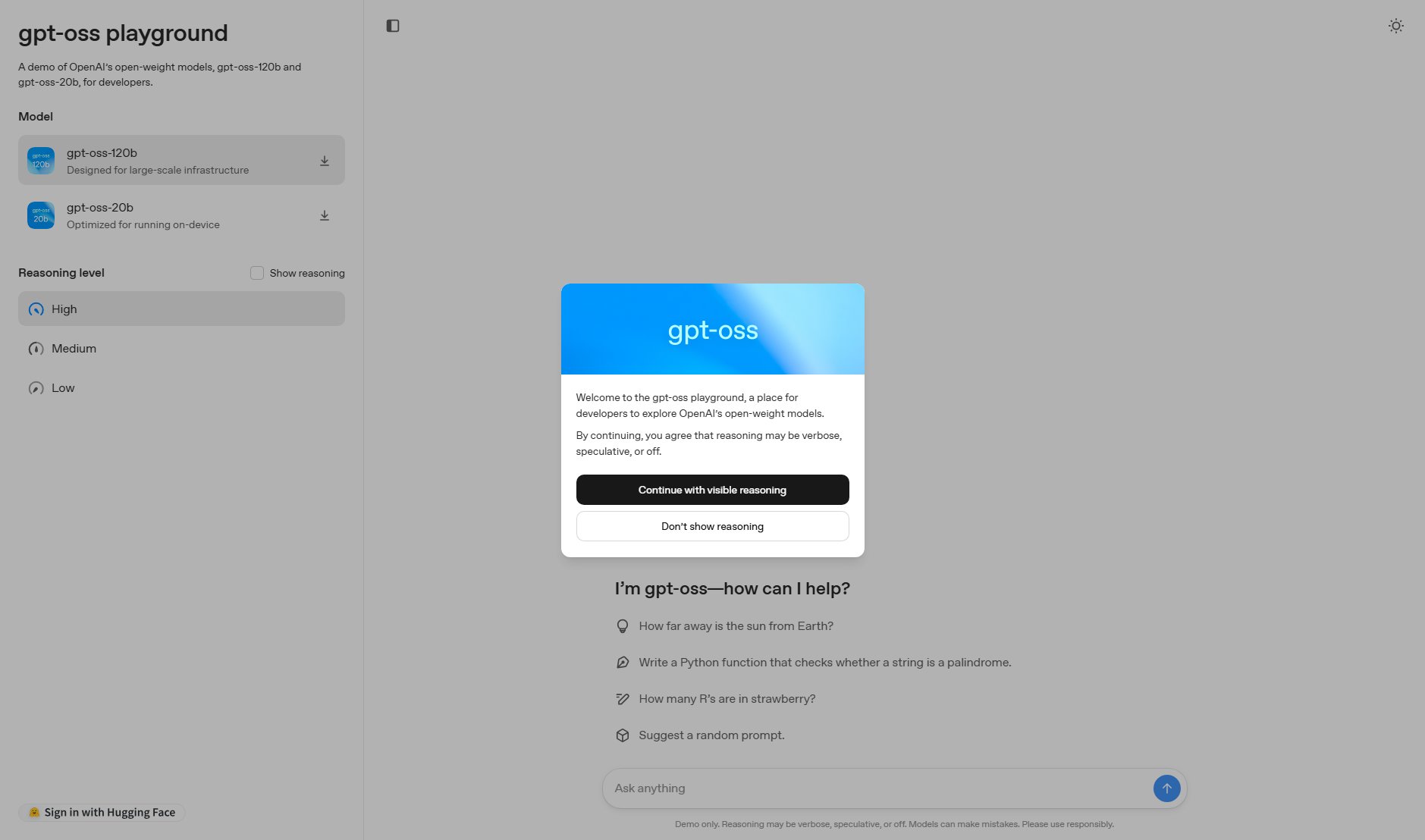

The LLM Workshop Interface & Screenshots

The LLM Workshop Official screenshot of the tool interface

What Can The LLM Workshop Do? Key Features

Multi-Model Comparison

Test your data simultaneously across 50+ leading AI models including GPT, Claude, Gemini, and many others. Get side-by-side comparisons of responses to objectively evaluate which model performs best for your specific use case.

BYOK Pricing Model

The Bring Your Own Keys approach means you use your existing API keys from providers like OpenAI, Anthropic, and Google. You only pay for actual API usage directly to these providers, with no additional platform fees or markups.

Text Generation Support

Currently supports comprehensive text generation testing across all available models. Upload your text prompts and compare how different models process and respond to the same input.

Future Media Support

While currently text-focused, the platform has plans to expand support for audio, video, and image processing in upcoming releases, making it a comprehensive multimedia AI testing solution.

Beta Program Access

As a beta user, you get early access to innovative features and the opportunity to shape platform development through direct feedback to the development team.

Best The LLM Workshop Use Cases & Applications

Content Creation Optimization

Marketing teams can test different AI models with their content briefs to determine which produces the most on-brand, engaging copy for blogs, social media, or advertising materials.

Technical Documentation Evaluation

Engineering teams can compare how different models explain complex technical concepts to identify which AI best understands their domain-specific terminology and requirements.

Customer Support Response Testing

Support teams can evaluate which AI generates the most accurate, helpful responses to common customer inquiries, potentially integrating the best-performing model into their support workflows.

Research Assistance Comparison

Academics and analysts can test how different models summarize research papers or answer domain-specific questions to identify the most reliable AI research assistant.

How to Use The LLM Workshop: Step-by-Step Guide

Sign up for an account on The LLM Workshop platform. Currently in beta, the service is open for new users to register and begin testing.

Connect your API keys from providers like OpenAI, Anthropic, or Google. The platform uses a BYOK (Bring Your Own Keys) model, so you'll need valid API credentials for the models you want to test.

Upload your test data or input prompts that you want to evaluate across different AI models. Currently supports text inputs with plans to expand to other media types.

Select which models you want to compare from the available 50+ options. You can choose specific models or run comparisons across all connected providers.

Review the side-by-side results showing how each model processed your input. Analyze response quality, creativity, accuracy, and other factors important to your use case.

Determine which model(s) perform best for your specific needs based on the comparative results. You can refine your tests with different prompts or parameters as needed.

The LLM Workshop Pros and Cons: Honest Review

Pros

Considerations

Is The LLM Workshop Worth It? FAQ & Reviews

The LLM Workshop is an AI model comparison platform that allows you to test your data across multiple large language models simultaneously, comparing responses from 50+ models to find the best fit for your needs.

The platform uses a BYOK (Bring Your Own Keys) model where you provide your own API keys and only pay for actual API usage directly to providers like OpenAI or Anthropic, with no additional platform fees.

Yes, The LLM Workshop is currently in beta with text generation fully supported across 50+ models. Audio, video, and image support are planned for future releases, and users may encounter occasional bugs during the beta period.

The platform supports 50+ models including GPT, Claude, Gemini, and many others. You can test any model for which you have valid API credentials.

Audio, video, and image support are planned for future releases, though specific timelines aren't yet available as the platform is currently focused on text generation during its beta phase.