GPUX

Deploy AI fast with serverless GPU inference

What is GPUX? Complete Overview

GPUX is a cutting-edge platform designed to accelerate AI deployment with serverless GPU inference. It enables users to run AI models like StableDiffusionXL, ESRGAN, and Whisper with ultra-fast cold starts (as quick as 1 second). The platform is tailored for developers, data scientists, and enterprises looking to deploy AI models efficiently without managing infrastructure. GPUX stands out by offering a peer-to-peer (P2P) marketplace where users can sell requests on their private models to other organizations. With its focus on performance and ease of use, GPUX is revolutionizing how AI workloads are handled, ensuring the 'right fit' for machine learning tasks.

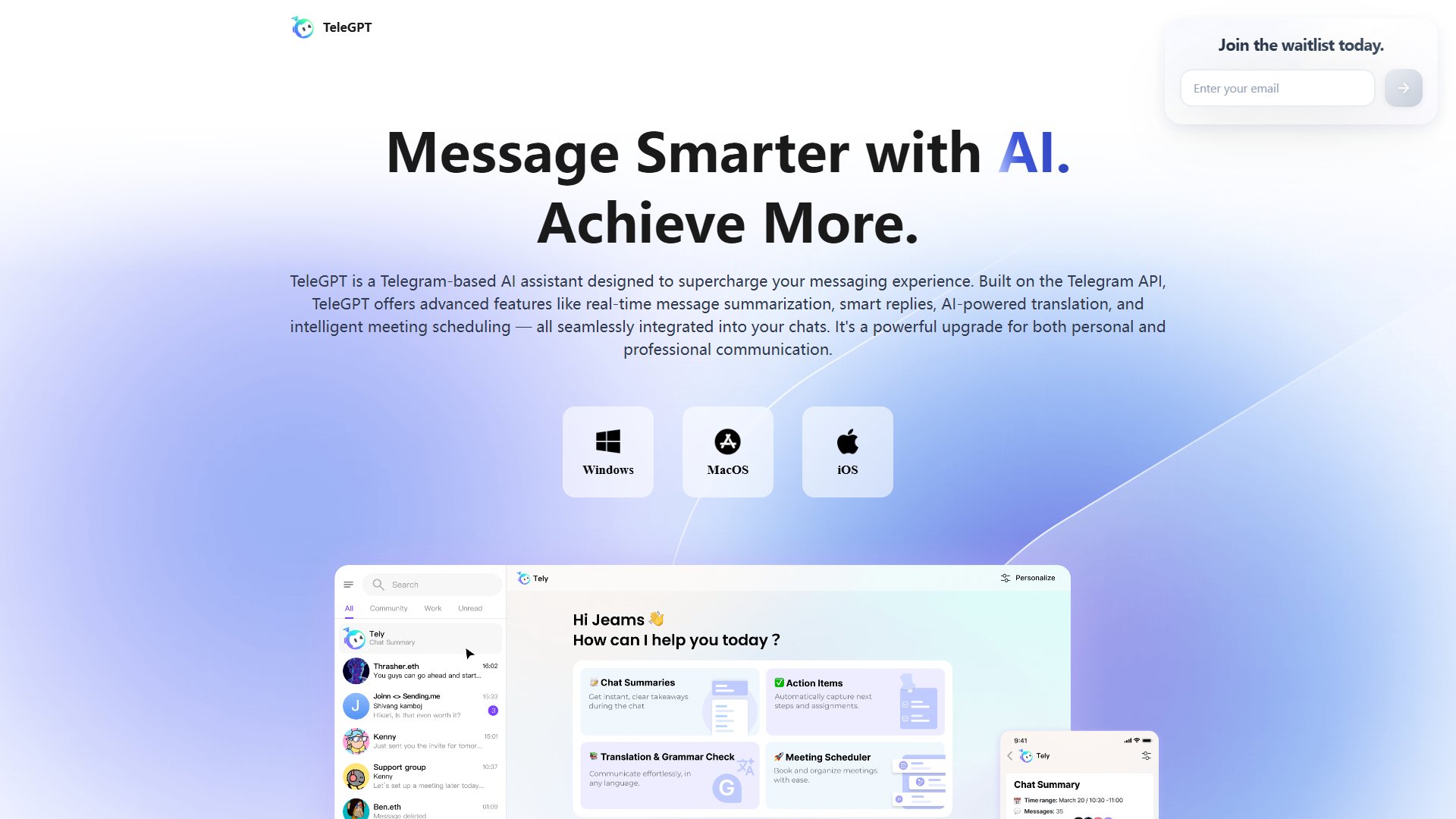

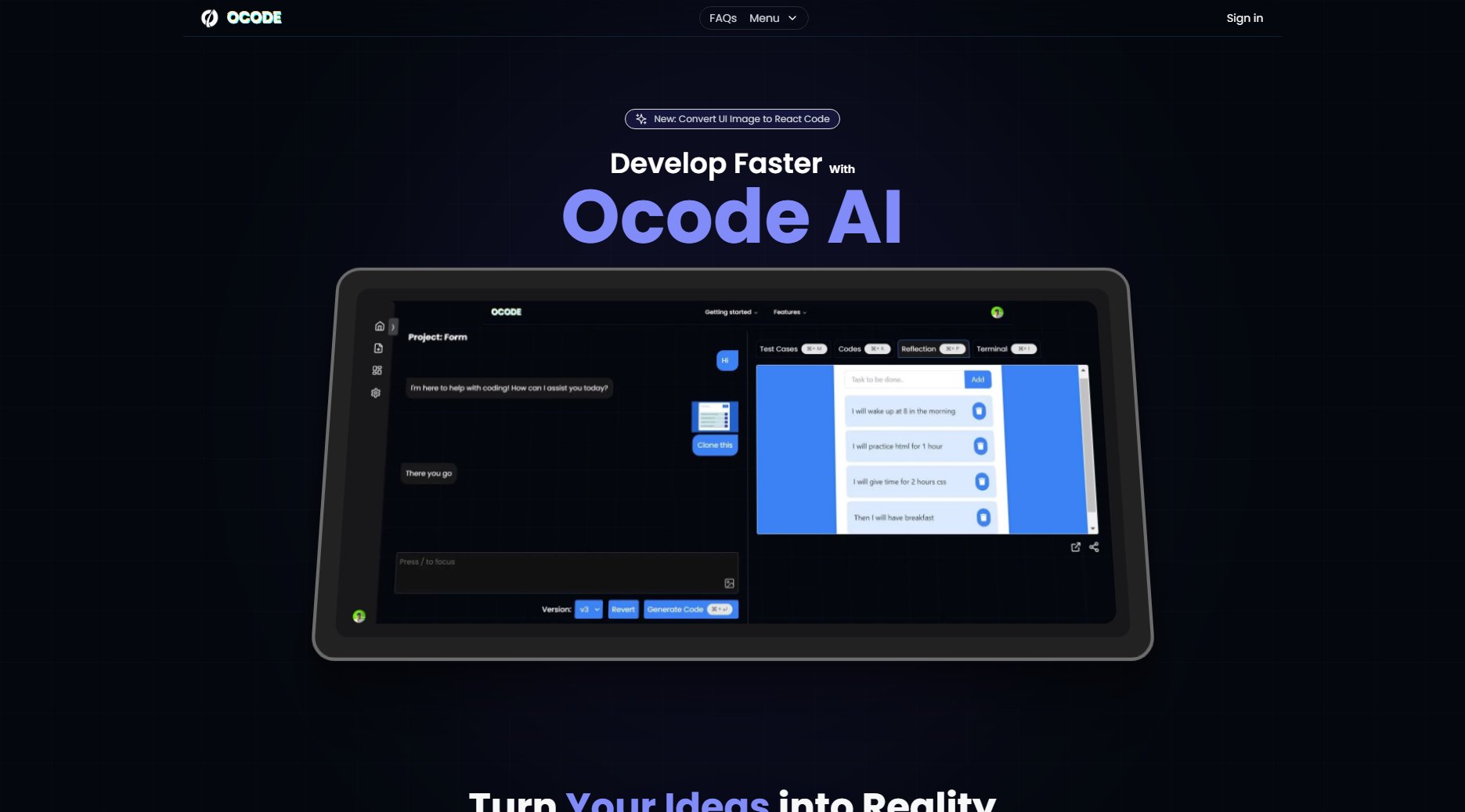

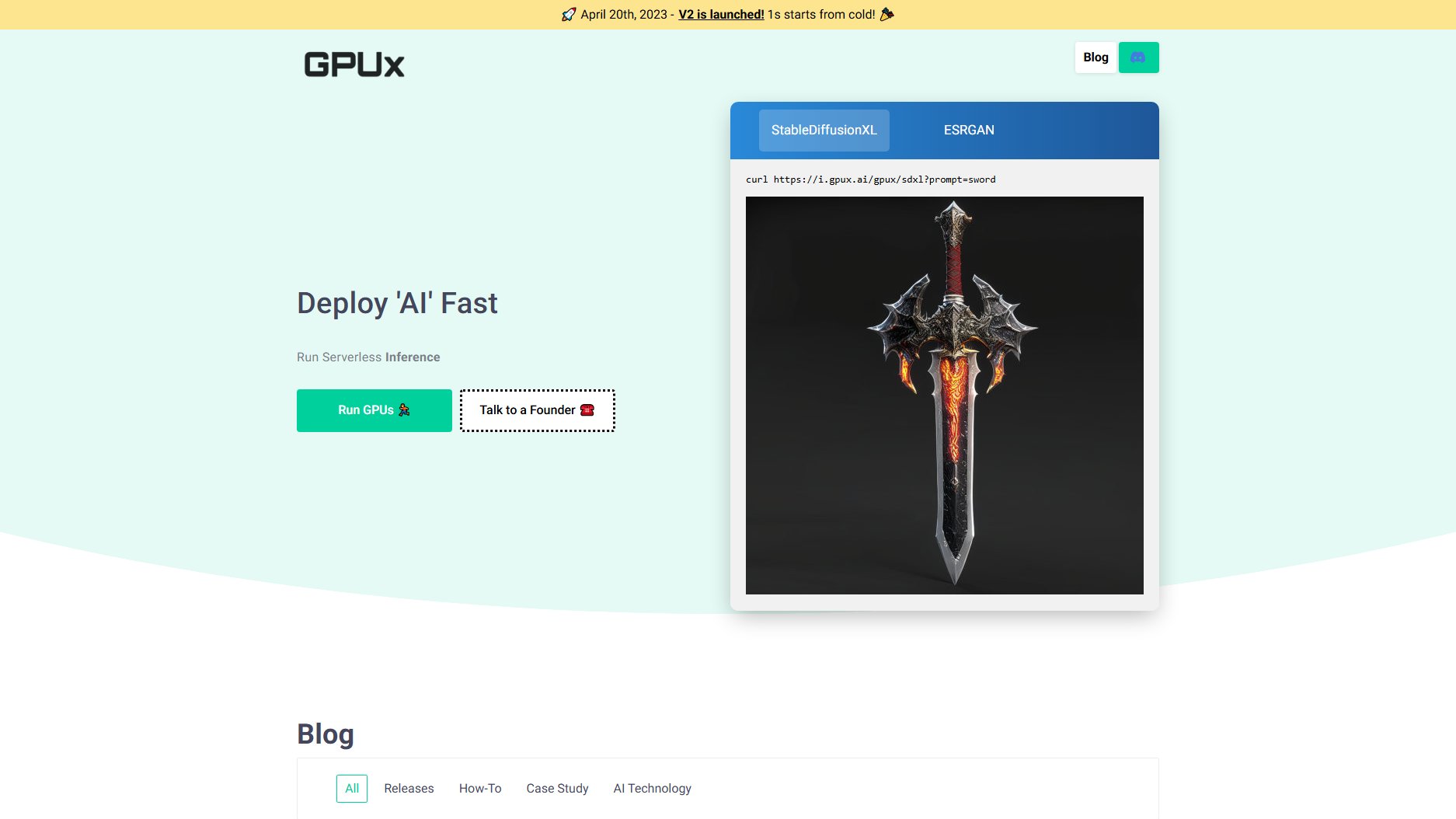

GPUX Interface & Screenshots

GPUX Official screenshot of the tool interface

What Can GPUX Do? Key Features

1s Cold Start

GPUX boasts an impressive 1-second cold start time, allowing users to deploy and run AI models almost instantly. This feature is particularly beneficial for applications requiring rapid scaling and responsiveness, ensuring minimal latency even during peak demand.

Serverless GPU Inference

The platform provides serverless GPU inference, eliminating the need for users to manage underlying infrastructure. This simplifies the deployment process, allowing developers to focus on building and optimizing their AI models rather than handling servers.

P2P Marketplace

GPUX includes a unique peer-to-peer marketplace where users can monetize their private AI models by selling inference requests to other organizations. This feature opens up new revenue streams and fosters collaboration within the AI community.

ReadWrite Volumes

The ReadWrite Volumes feature ensures efficient data handling and storage, enabling seamless access to large datasets during inference. This is crucial for models that require extensive data processing and real-time updates.

Multi-Model Support

GPUX supports a variety of popular AI models, including StableDiffusion, SDXL0.9, AlpacaLLM, and Whisper. This versatility makes it a one-stop solution for diverse AI applications, from image generation to natural language processing.

Best GPUX Use Cases & Applications

AI-Powered Image Generation

Artists and designers can use GPUX to deploy StableDiffusionXL for generating high-quality images in seconds. The 1s cold start ensures that creative workflows are not interrupted by delays, making it ideal for real-time content creation.

Real-Time Speech Recognition

Developers can leverage Whisper on GPUX to build applications that transcribe speech in real time. The serverless GPU inference ensures low latency, making it suitable for live transcription services and voice assistants.

Monetizing Private Models

Enterprises with proprietary AI models can use GPUX's P2P marketplace to offer inference services to other organizations. This creates a new revenue stream while maximizing the utility of their AI investments.

How to Use GPUX: Step-by-Step Guide

Sign up on the GPUX platform and access the dashboard. The intuitive interface guides you through the initial setup, allowing you to configure your account and preferences effortlessly.

Choose the AI model you want to deploy from the supported list, such as StableDiffusionXL or Whisper. GPUX provides detailed documentation and examples to help you select the right model for your needs.

Upload your model or use one of the pre-configured models available on the platform. GPUX's serverless architecture ensures that the deployment process is smooth and hassle-free.

Run inference by making a simple API call. For example, you can use a cURL command like 'curl https://i.gpux.ai/gpux/sdxl?prompt=sword' to generate outputs instantly.

Monitor and manage your deployments through the GPUX dashboard. The platform provides real-time analytics and logs to help you optimize performance and troubleshoot any issues.

GPUX Pros and Cons: Honest Review

Pros

Considerations

Is GPUX Worth It? FAQ & Reviews

GPUX offers an impressive 1-second cold start time, ensuring that your AI models are up and running almost instantly.

Yes, GPUX's P2P marketplace allows you to sell inference requests for your private models to other organizations, creating a new revenue stream.

GPUX supports a variety of models, including StableDiffusion, SDXL0.9, AlpacaLLM, and Whisper, catering to diverse AI applications.

Yes, GPUX offers a free plan with limited inference runs and basic support. For unlimited access and additional features, you can upgrade to the Pro plan.

Deploying a model is simple: sign up, choose your model, upload or configure it, and run inference via an API call. The platform handles the rest.