DeepSeek OCR

Next-gen document intelligence with 10x compression for 100+ languages

What is DeepSeek OCR? Complete Overview

DeepSeek OCR is a revolutionary two-stage transformer-based document AI that compresses high-resolution documents into compact vision tokens before decoding them with a high-capacity mixture-of-experts language model. It achieves near-lossless text, layout, and diagram understanding across 100+ languages while dramatically reducing computational requirements. The tool is designed for professionals and enterprises dealing with large-scale document digitization, technical documentation processing, and multilingual dataset creation. Its unique Context Optical Compression Engine allows it to handle complex documents like legal contracts, financial reports, and scientific papers with 97% exact-match accuracy at 10× compression, processing up to 200,000 pages per day on a single NVIDIA A100 GPU.

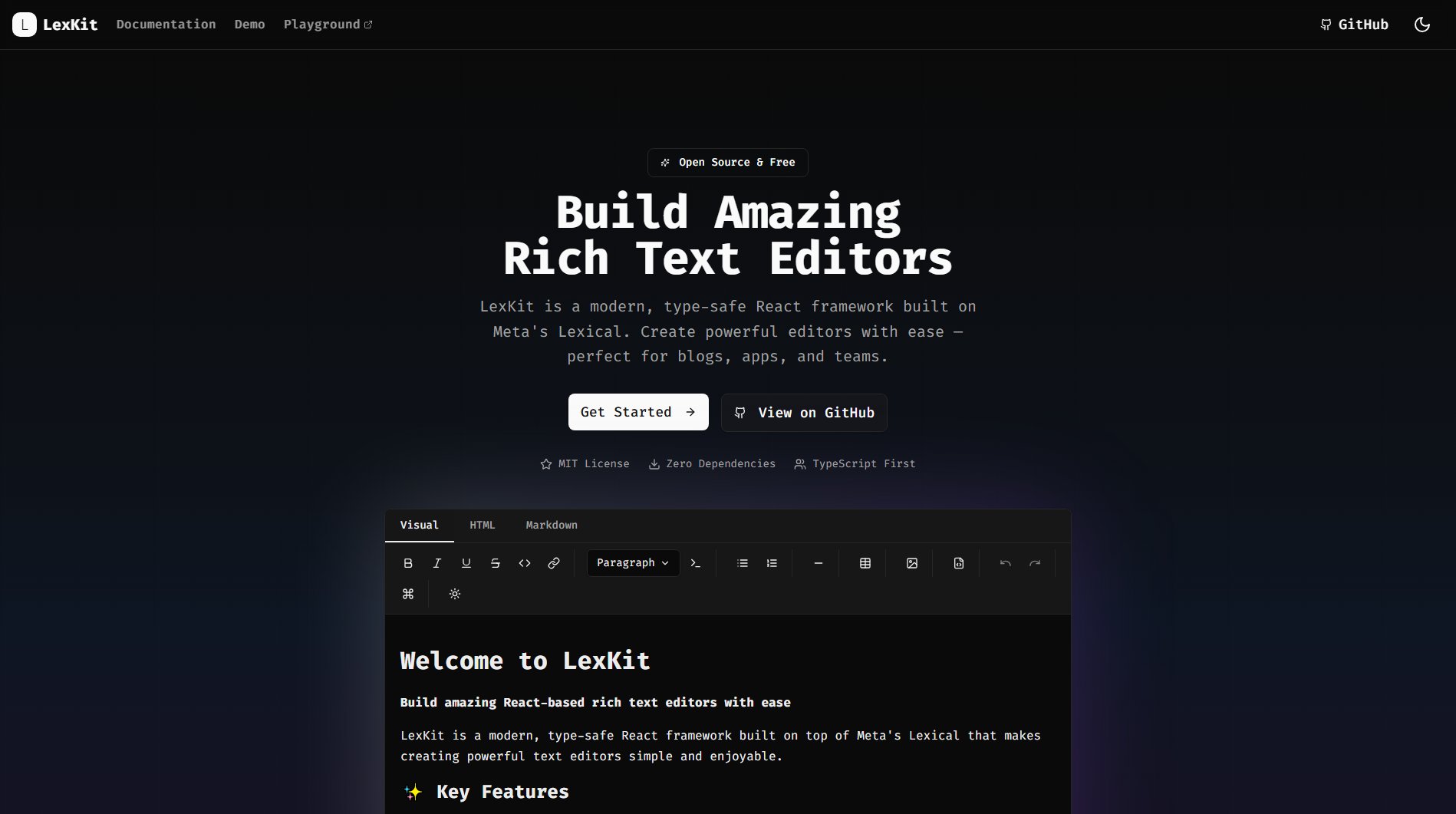

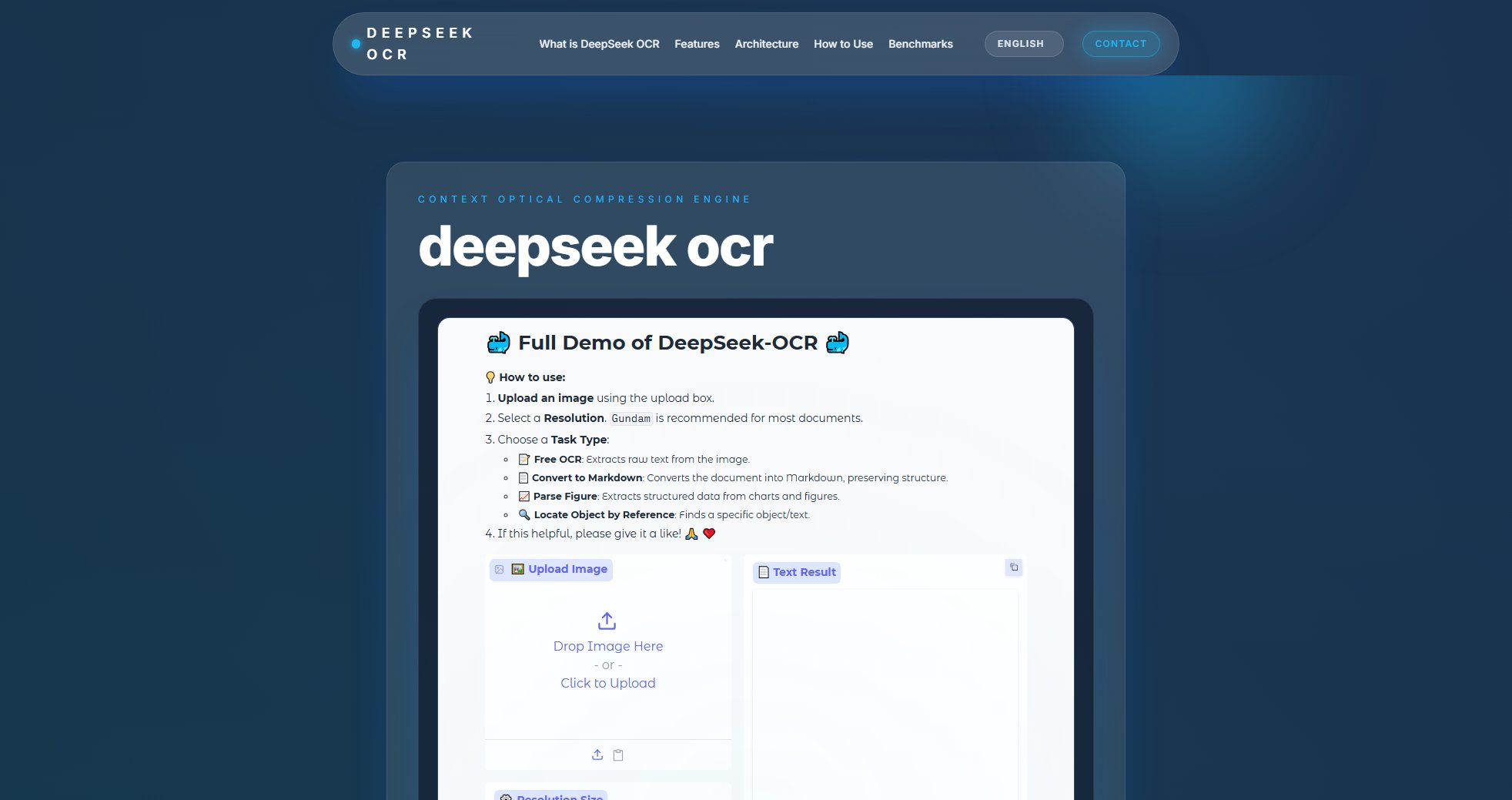

DeepSeek OCR Interface & Screenshots

DeepSeek OCR Official screenshot of the tool interface

What Can DeepSeek OCR Do? Key Features

Context Optical Compression Engine

Reduces a 1024×1024 page to just 256 tokens while preserving layout and semantic information. This innovative approach enables processing of long documents that would overwhelm conventional OCR systems, achieving 10× compression with minimal accuracy loss.

Multilingual Support

Supports 100+ languages including Latin, CJK, Cyrillic, and specialized scientific scripts. The extensive training on real and synthetic multilingual data makes it ideal for global digitization projects.

Structured Output

Produces HTML tables, Markdown charts, SMILES chemistry strings, and geometry annotations, enabling direct integration with analytics pipelines without manual reconstruction of complex document elements.

Mode Selector

Offers precision tuning between speed and fidelity with modes ranging from Tiny (64 tokens) to Gundam (multi-viewport tiling), making it adaptable to various document types from invoices to blueprints.

DeepSeek-3B-MoE Decoder

Uses a 3B-parameter mixture-of-experts model that activates ~570M parameters per token to reconstruct text, layout tags, and captions with FlashAttention and CUDA optimizations for high throughput.

Best DeepSeek OCR Use Cases & Applications

Scanned Books & Reports Digitization

Compress thousands of words per page into compact tokens for downstream search, summarization, and knowledge graph pipelines while preserving original layout.

Technical Documentation Processing

Extract geometry reasoning, engineering annotations, and chemical SMILES from visual assets to support scientific analysis and technical documentation conversion.

Multilingual Dataset Creation

Build global corpora across 100+ languages by scanning books or surveys to create training data for downstream language models with layout-aware output.

Document Conversion Applications

Embed into invoice, contract, or form-processing platforms to emit layout-aware JSON and HTML ready for automation, reducing manual data entry.

How to Use DeepSeek OCR: Step-by-Step Guide

Deploy locally with GPUs by cloning the GitHub repository, downloading the 6.7 GB safetensors checkpoint, and configuring PyTorch 2.6+ with FlashAttention. Base mode runs on 8–10 GB GPUs.

Call via API using DeepSeek's OpenAI-compatible endpoints to submit images and receive structured text output, priced at ~$0.028 per million input tokens for cache hits.

Integrate outputs into workflows by converting to JSON, linking SMILES strings to cheminformatics pipelines, or auto-captioning diagrams for bilingual publishing.

Optimize performance by scheduling latency-sensitive jobs on Base or Large modes and queuing archival batches in Tiny mode to maximize GPU utilization.

DeepSeek OCR Pros and Cons: Honest Review

Pros

Considerations

Is DeepSeek OCR Worth It? FAQ & Reviews

It slices pages into patches, applies 16× convolutional downsampling, and forwards only 64–400 vision tokens to the MoE decoder, retaining layout cues while cutting context size tenfold.

NVIDIA A100 (40 GB) offers peak throughput (~200k pages/day), while RTX 30-series cards with ≥8 GB VRAM can handle Base mode for moderate loads.

Yes, tests show near-lossless HTML/Markdown reproduction for tables and chart structures, enabling direct analytics pipeline integration.

Local deployment keeps data on-prem under MIT license. For API use, consult compliance guidance due to cloud infrastructure considerations.

Matches or exceeds cloud competitors on complex documents while using far fewer vision tokens, ideal for GPU-constrained operations.